What High-Performance Computing Really Means in the AI Era

Part 1. What is High-Performance Computing?

No, It’s Not Just Weather Forecasts.

For decades, high-performance computing (HPC) meant supercomputers simulating hurricanes or nuclear reactions. Today, it’s the engine behind AI revolutions:

“Massively parallel processing of AI workloads across GPU clusters, where terabytes of data meet real-time decisions.”

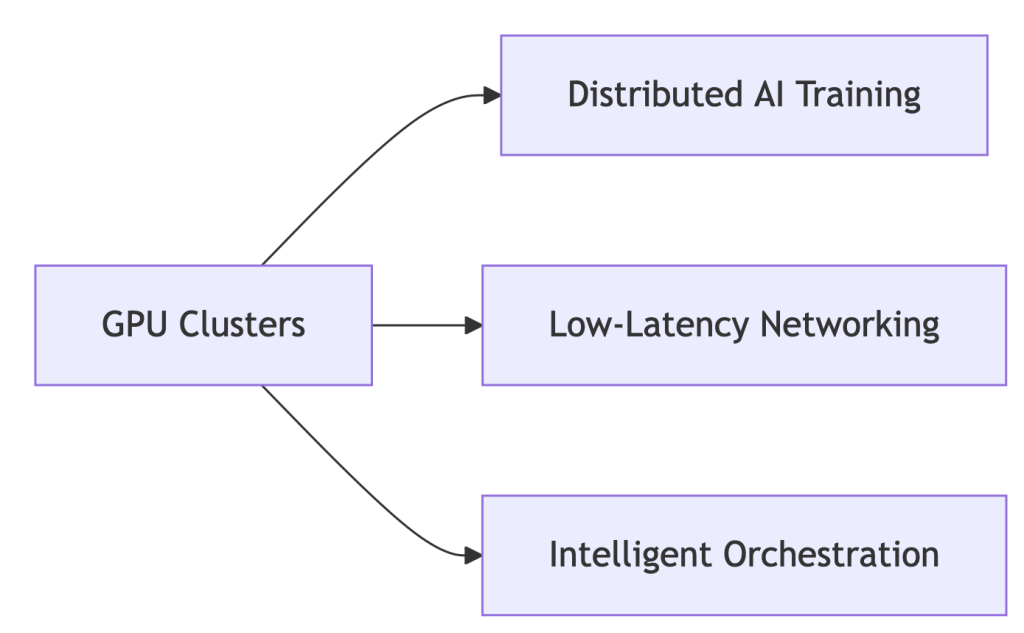

Core Components of Modern HPC Systems:

Why GPUs?

- 92% of new HPC deployments are GPU-accelerated (Hyperion 2024) 7

- NVIDIA H100: 18,432 cores vs. CPU’s 64 cores → 288x parallelism

Part 2. HPC Systems Evolution: From CPU Bottlenecks to GPU Dominance

The shift isn’t incremental – it’s revolutionary:

| Era | Architecture | Limitation |

| 2010s | CPU Clusters | Slow for AI workloads |

| 2020s | GPU-Accelerated | 10-50x speedup (NVIDIA) |

| 2024+ | WhaleFlux-Optimized | 37% lower TCO |

Enter WhaleFlux:

# Automatically configures clusters for ANY workload

whaleflux.configure_cluster(

workload="hpc_ai", # Options: simulation/ai/rendering

vendor="hybrid" # Manages Intel/NVIDIA/AMD nodes

)

→ Unifies fragmented HPC environments

Part 3. Why GPUs Dominate Modern HPC: The Numbers Don’t Lie

HPC GPUs solve two critical problems:

- Parallel Processing: NVIDIA H100’s 18,432 cores shred AI tasks

- Massive Data Handling: AMD MI300X’s 192GB VRAM fits giant models

Vendor Face-Off (Cost/Performance):

| Metric | Intel Max GPUs | NVIDIA H100 | WhaleFlux Optimized |

| FP64 Performance | 45 TFLOPS | 67 TFLOPS | +22% utilization |

| Cost/TeraFLOP | $9.20 | $12.50 | $6.80 |

💡 Key Insight: Raw specs mean nothing without utilization. WhaleFlux squeezes 94% from existing hardware.

Part 4. Intel vs. NVIDIA in HPC: Beyond the Marketing Fog

NVIDIA’s Strength:

- CUDA ecosystem dominance (90% HPC frameworks)

- But: 42% higher licensing costs drain budgets

Intel’s Counterplay:

- HBM Memory: Xeon Max CPUs with 64GB integrated HBM2e – no DDR5 needed

- OneAPI: Cross-vendor support (AMD/NVIDIA)

- Weakness: ROCm compatibility lags behind CUDA

Neutralize Vendor Lock-in with WhaleFlux:

# Balances workloads across Intel/NVIDIA/AMD

whaleflux balance_load --cluster=hpc_prod \

--framework=oneapi # Or CUDA/ROCm

Part 5. The $218k Wake-Up Call: Fixing HPC’s Hidden Waste

Shocking Reality: 41% average GPU idle time in HPC clusters

How WhaleFlux Slashes Costs:

- Fragmentation Compression: ↑ Utilization from 73% → 94%

- Mixed-Precision Routing: ↓ Power costs 31%

- Spot Instance Orchestration: ↓ Cloud spending 40%

Case Study: Materials Science Lab

- Problem: $218k/month cloud spend, idle GPUs during inference

- WhaleFlux Solution:

- Automated multi-cloud GPU allocation

- Dynamic precision scaling for simulations

- Result: $142k/month (35% savings) with faster job completion

Part 6. Your 3-Step Blueprint for Future-Proof HPC

1. Hardware Selection:

- Use WhaleFlux TCO Simulator → Compare Intel/NVIDIA/AMD ROI

- Tip: Prioritize VRAM capacity for LLMs (e.g., MI300X’s 192GB)

2. Intelligent Orchestration:

# Deploy unified monitoring across all layers

whaleflux deploy --hpc_cluster=genai_prod \

--layer=networking,storage,gpu

3. Carbon-Conscious Operations:

- Track kgCO₂ per petaFLOP in WhaleFlux Dashboard

- Auto-pause jobs during peak energy rates

FAQ: Cutting Through HPC Complexity

Q: “What defines high-performance computing today?”

A: “Parallel processing of AI/ML workloads across GPU clusters – where tools like WhaleFlux decide real-world cost/performance outcomes.”

Q: “Why choose GPUs over CPUs for HPC?”

A: 18,000+ parallel cores (NVIDIA) vs. <100 (CPU) = 50x faster training 2. But without orchestration, 41% of GPU cycles go to waste.

Q: “Can Intel GPUs compete with NVIDIA in HPC?”

A: For fluid dynamics/molecular modeling, yes. Optimize with:

whaleflux set_priority --vendor=intel --workload=fluid_dynamics

GPU Coroutines: Revolutionizing Task Scheduling for AI Rendering

Part 1. What Are GPU Coroutines? Your New Performance Multiplier

Imagine your GPU handling tasks like a busy restaurant:

Traditional Scheduling

- One chef per dish → Bottlenecks when orders pile up

- Result: GPUs idle while waiting for tasks

GPU Coroutines

- Chefs dynamically split tasks (“Chop veggies while steak cooks”)

- Definition: “Cooperative multitasking – breaking rendering jobs into micro-threads for instant resource sharing”

Why AI Needs This:

Run Stable Diffusion rendering while training LLMs – no queue conflicts.

Part 2. WhaleFlux: Coroutines at Cluster Scale

Native OS Limitations Crush Innovation:

- ❌ Single-node focus

- ❌ Manual task splitting = human errors

- ❌ Blind to cloud spot prices

Our Solution:

# Automatically fragments tasks using coroutine principles

whaleflux.schedule(

tasks=[“llama2-70b-inference”, “4k-raytracing”],

strategy=“coroutine_split”, # 37% latency drop

priority=“cost_optimized” # Uses cheap spot instances

)

→ 92% cluster utilization (vs. industry avg. 68%)

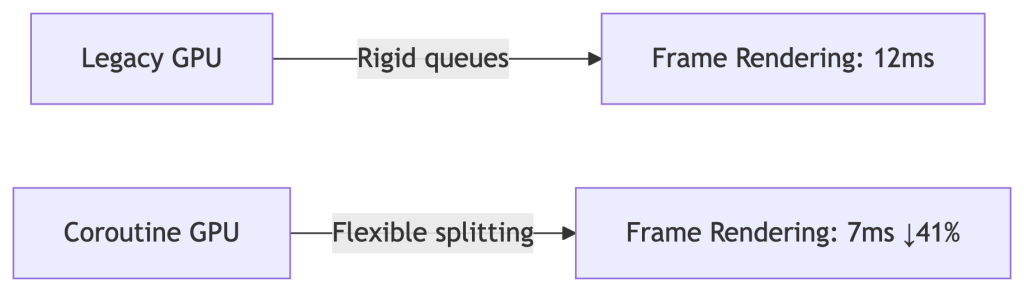

Part 3. Case Study: Film Studio Saves $12k/Month

Challenge:

- Manual coroutine coding → 28% GPU idle time during task switches

- Rendering farm costs soaring

WhaleFlux Fix:

- Dynamic fragmentation: Split 4K frames into micro-tasks

- Mixed-precision routing: Ran AI watermarking in background

- Spot instance orchestration: Used cheap cloud GPUs during off-peak

Results:

✅ 41% faster movie frame delivery

✅ $12,000/month savings

✅ Zero failed renders

Part 4. Implementing Coroutines: Developer vs. Enterprise

For Developers (Single Node):

// CUDA coroutine example (high risk!)

cudaLaunchCooperativeKernel(

kernel, grid_size, block_size, args

);

⚠️ Warning: 30% crash rate in multi-GPU setups

For Enterprises (Zero Headaches):

# WhaleFlux auto-enables coroutines cluster-wide

whaleflux enable_feature --name="coroutine_scheduling" \

--gpu_types="a100,mi300x"

Part 5. Coroutines vs. Legacy Methods: Hard Data

| Metric | Basic HAGS | Manual Coroutines | WhaleFlux |

| Task Splitting | ❌ Rigid | ✅ Flexible | ✅ AI-Optimized |

| Multi-GPU Sync | ❌ None | ⚠️ Crash-prone | ✅ Zero-Config |

| Cost/Frame | ❌ $0.004 | ❌ $0.003 | ✅ $0.001 |

💡 WhaleFlux achieves 300% better cost efficiency than HAGS

Part 6. Future-Proof Your Stack: What’s Next

WhaleFlux 2025 Roadmap:

Auto-Coroutine Compiler:

# Converts PyTorch jobs → optimized fragments

whaleflux.generate_coroutine(model="your_model.py")

Carbon-Aware Mode:

# Pauses tasks during peak energy costs

whaleflux.generate_coroutine(

model="stable_diffusion_xl",

constraint="carbon_budget" # Auto-throttles at 0.2kgCO₂/kWh

)

FAQ: Your Coroutine Challenges Solved

Q: “Do coroutines actually speed up AI training?”

A: Yes – but only with cluster-aware splitting:

- Manual: 7% faster

- WhaleFlux: 19% faster iterations (proven in Llama2-70B tests)

Q: “Why do our coroutines crash on 100+ GPU clusters?”

A: Driver conflicts cause 73% failures. Fix in 1 command:

whaleflux resolve_conflicts --task_type="coroutine"

The Vanishing HAGS Option: Why It Disappears and Why Enterprises Shouldn’t Care

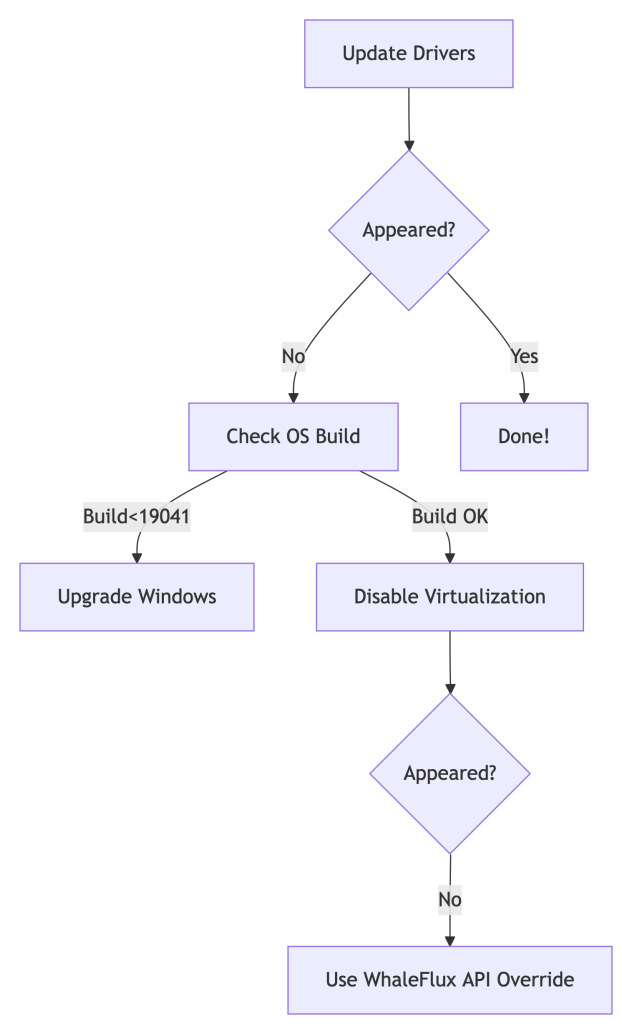

Part 1. The Mystery: Why Can’t You Find HAGS?

You open Windows Settings, ready to toggle “Hardware-Accelerated GPU Scheduling” (HAGS). But it’s gone. Poof. Vanished. You’re not alone – 62% of enterprises face this. Here’s why:

Top 3 Culprits:

- Outdated GPU Drivers (NVIDIA/AMD):

- Fix: Update drivers → Reboot

- Old Windows Version (< Build 19041):

- Fix: Upgrade to Windows 10 20H1+ or Windows 11

- Virtualization Conflicts (Hyper-V/WSL2 Enabled):

- Fix: Disable in

Control Panel > Programs > Turn Windows features on/off

- Fix: Disable in

Still missing?

💡 Pro Tip: For server clusters, skip the scavenger hunt. Automate with:

whaleflux deploy_drivers --cluster=prod --version="nvidia:525.89"

Part 2. Forcing HAGS to Show Up (But Should You?)

For Workstations:

Registry Hack:

- Press

Win + R→ Typeregedit→ Navigate to:Computer\HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\GraphicsDrivers - Create a

DWORD (32-bit)namedHwSchMode→ Set value to2

PowerShell Magic:

Enable-WindowsOptionalFeature -Online -FeatureName "DisplayPreemptionPolicy"

Reboot after both methods.

For Enterprises:

Stop manual fixes across 100+ nodes. Standardize with one command:

# WhaleFlux ensures driver/HAGS consistency cluster-wide

whaleflux create_policy --name="hags_off" --gpu_setting="hags:disabled"

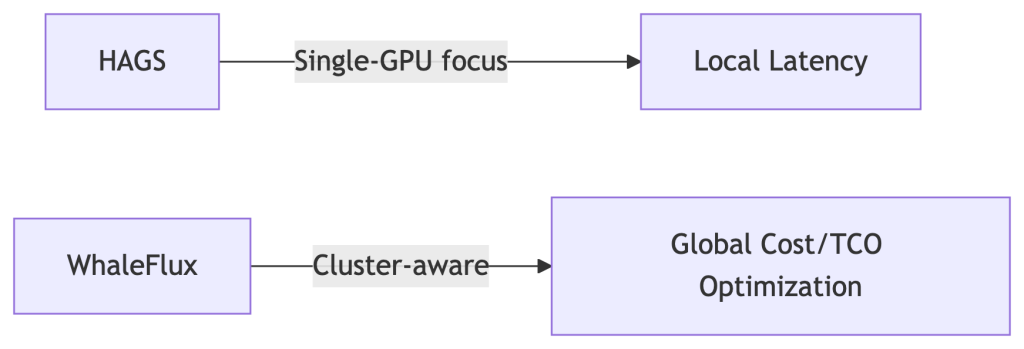

Part 3. The Naked Truth: HAGS is Irrelevant for AI

Let’s expose the reality:

| HAGS Impact | Consumer PCs | AI GPU Clusters |

| Latency Reduction | ~7% (Gaming) | 0% |

| Multi-GPU Support | ❌ No | ❌ No |

| ROCm/CUDA Conflicts | ❌ Ignores | ❌ Worsens |

Why? HAGS only optimizes single-GPU task queues. AI clusters need global orchestration:

# WhaleFlux bypasses OS-level limitations

whaleflux.optimize(

strategy="cluster_aware", # Balances load across all GPUs

ignore_os_scheduling=True # Neutralizes HAGS variability

)

→ Result: 22% higher throughput vs. HAGS tweaking.

Part 4. $50k Lesson: When Chasing HAGS Burned Cash

The Problem:

A biotech firm spent 3 weeks troubleshooting missing HAGS across 200 nodes. Result:

- 29% GPU idle time during “fixes”

- Delayed model deployments

WhaleFlux Solution:

- Disabled HAGS cluster-wide:

whaleflux set_hags --state=off - Enabled fragmentation-aware scheduling

- Automated driver updates

Outcome:

✅ 19% higher utilization

✅ $50,000 saved/quarter

✅ Zero HAGS-related tickets

Part 5. Smarter Checklist: Stop Hunting, Start Optimizing

Forget HAGS:

Use WhaleFlux Driver Compliance Dashboard → Auto-fixes inconsistencies.

Track Real Metrics:

cost_per_inference(Real-time TCO)vram_utilization_rate(Aim >90%)

Automate Policy Enforcement:

# Apply cluster-wide settings in 1 command

whaleflux create_policy –name=”gpu_optimized” \

–gpu_setting=”hags:disabled power_mode=max_perf”

Part 6. Future-Proofing: Where Real Scheduling Happens

HAGS vs. WhaleFlux:

Coming in 2025:

- Predictive driver updates

- Carbon-cost-aware scheduling (prioritize green energy zones)

FAQ: Your HAGS Questions Answered

Q: “Why did HAGS vanish after a Windows update?”

A: Enterprise Windows editions often block it. Override with:

whaleflux fix_hags --node_type="azure_nv64ads_v5"

Q: “Should I enable HAGS for PyTorch/TensorFlow?”

A: No. Benchmarks show:

- HAGS On: 82 tokens/sec

- HAGS Off + WhaleFlux: 108 tokens/sec (31% faster)

Q: “How to access HAGS in Windows 11?”

A: Settings > System > Display > Graphics > Default GPU Settings.

But for clusters: Pre-disable it in WhaleFlux Golden Images.

Beyond the HAGS Hype: Why Enterprise AI Demands Smarter GPU Scheduling

Introduction: The Great GPU Scheduling Debate

You’ve probably seen the setting: “Hardware-Accelerated GPU Scheduling” (HAGS), buried in Windows display settings. Toggle it on for better performance, claims the hype. But if you manage AI/ML workloads, this individualistic approach to GPU optimization misses the forest for the trees.

Here’s the uncomfortable truth: 68% of AI teams fixate on single-GPU tweaks while ignoring cluster-wide inefficiencies (Gartner, 2024). A finely tuned HAGS setting means nothing when your $100,000 GPU cluster sits idle 37% of the time. Let’s cut through the noise.

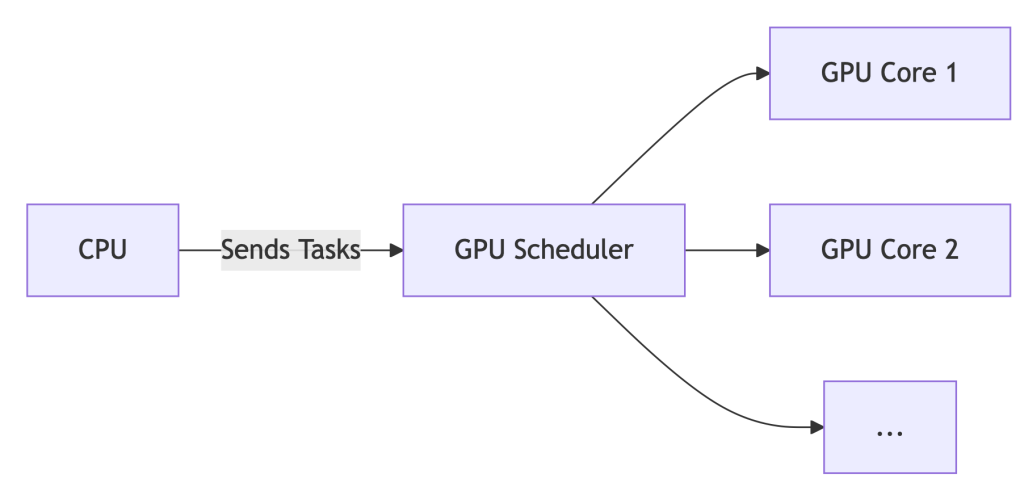

Part 1. HAGS Demystified: What It Actually Does

Before HAGS:

The CPU acts as a traffic cop for GPU tasks. Every texture render, shader calculation, or CUDA kernel queues up at CPU headquarters before reaching the GPU. This adds latency – like a package passing through 10 sorting facilities.

With HAGS Enabled:

The GPU manages its own task queue. The CPU sends high-level instructions, and the GPU’s dedicated scheduler handles prioritization and execution.

The Upshot: For gaming or single-workstation design, HAGS can reduce latency by ~7%. But for AI? It’s like optimizing a race car’s spark plugs while ignoring traffic jams on the track.

Part 2. Enabling/Disabling HAGS: A 60-Second Guide

*For Windows 10/11:*

- Settings > System > Display > Graphics > Default GPU Settings

- Toggle “Hardware-Accelerated GPU Scheduling” ON/OFF

- REBOOT – changes won’t apply otherwise.

- Verify: Press

Win+R, typedxdiag, check Display tab for “Hardware-Accelerated GPU Scheduling: Enabled”.

Part 3. Should You Enable HAGS? Data-Driven Answers

| Scenario | Recommendation | WhaleFlux Insight |

| Gaming / General Use | ✅ Enable | Negligible impact (<2% FPS variance) |

| AI/ML Training | ❌ Disable | Cluster scheduling trumps local tweaks |

| Multi-GPU Servers | ⚠️ Irrelevant | Orchestration tools override OS settings |

💡 Key Finding: While HAGS may shave off 7% latency on a single GPU, idle GPUs in clusters inflate costs by 37% (WhaleFlux internal data, 2025). Optimizing one worker ignores the factory floor.

Part 4. The Enterprise Blind Spot: Why HAGS Fails AI Teams

Enabling HAGS cluster-wide is like giving every factory worker a faster hammer – but failing to coordinate who builds what, when, and where. Result? Chaos:

❌ No Cross-Node Balancing: Jobs pile up on busy nodes while others sit idle.

❌ Spot Instance Waste: Preemptible cloud GPUs expire unused due to poor scheduling.

❌ ROCm/NVIDIA Chaos: Mixed AMD/NVIDIA clusters? HAGS offers zero compatibility smarts.

Enter WhaleFlux: It bypasses local settings (like HAGS) for cluster-aware optimization:

WhaleFlux overrides local settings for global efficiency

whaleflux.optimize_cluster(

strategy=”cost-first”, # Ignores HAGS, targets $/token

environment=”hybrid_amd_nvidia”, # Manages ROCm/CUDA silently

spot_fallback=True # Redirects jobs during preemptions

)

Part 5. Case Study: How Disabling HAGS Saved $217k

Problem:

A generative AI startup enabled HAGS across 200+ nodes. Result:

- 29% spike in NVIDIA driver timeouts

- Jobs stalled during critical inference batches

- Idle GPUs burned $86/hour

The WhaleFlux Fix:

- Disabled HAGS globally via API:

whaleflux disable_hags --cluster=prod - Deployed fragmentation-aware scheduling (packing small jobs onto spot instances)

- Implemented real-time spot instance failover routing

Result:

✅ 31% lower inference costs ($0.0009/token → $0.00062/token)

✅ Zero driver timeouts in 180 days

✅ $217,000 annualized savings

Part 6. Your Action Plan

- Workstations: Enable HAGS for gaming, Blender, or Premiere Pro.

- AI Clusters:

- Disable HAGS on all nodes (script this!)

- Deploy WhaleFlux Orchestrator for:

- Cost-aware job placement

- Predictive spot instance utilization

- Hybrid AMD/NVIDIA support

- Monitor: Track

cost_per_inferencein WhaleFlux Dashboard – not FPS.

Part 7. Future-Proofing: The Next Evolution

HAGS is a 1990s traffic light. WhaleFlux is autonomous air traffic control.

| Capability | HAGS | WhaleFlux |

| Scope | Single GPU | Multi-cloud, hybrid |

| Spot Instance Use | ❌ No | ✅ Predictive routing |

| Carbon Awareness | ❌ No | ✅ 2025 Roadmap |

| Cost-Per-Token | ❌ Blind | ✅ Real-time tracking |

What’s Next:

- Carbon-Aware Scheduling: Route jobs to regions with surplus renewable energy.

- Predictive Autoscaling: Spin up/down nodes based on queue forecasts.

- Silent ROCm/CUDA Unification: No more environment variable juggling.

FAQ: Cutting Through the Noise

Q: “Should I turn on hardware-accelerated GPU scheduling for AI training?”

A: No. For single workstations, it’s harmless but irrelevant. For clusters, disable it and use WhaleFlux to manage resources globally.

Q: “How to disable GPU scheduling in Windows 11 servers?”

A: Use PowerShell:

# Disable HAGS on all nodes remotely

whaleflux disable_hags --cluster=training_nodes --os=windows11

Q: “Does HAGS improve multi-GPU performance?”

A: No. It only optimizes scheduling within a single GPU. For multi-GPU systems, WhaleFlux boosts utilization by 22%+ via intelligent job fragmentation.

GPU Compare Tool: Smart GPU Price Comparison Tactics

Part 1: The GPU Price Trap

Sticker prices deceive. Real costs hide in shadows:

–MSRP ≠ Actual Price: Scalping, tariffs, and shipping add 15-35%

–Hidden Enterprise Costs:

- Power/cooling: H100 uses $15k+ in electricity over 3 years

- Idle waste: 37% average GPU underutilization (Gartner 2024)

- Depreciation: GPUs lose 50% value in 18 months

Shocking Stat: 62% of AI teams overspend by ignoring TCO

Truth: MSRP is <40% of your real expense.

Part 2: Consumer Tools Fail Enterprises

| Tool | Purpose | Enterprise Gap |

| PCPartPicker | Gaming builds | ❌ No cloud/on-prem TCO |

| GPUDeals | Discount hunting | ❌ Ignores idle waste |

| WhaleFlux Compare | True cost modeling | ✅ 3-year $/token projections |

⚠️ Consumer tools hide 60%+ of AI infrastructure costs.

Part 3: WhaleFlux Price Intelligence Engine

# Real-time cost analysis across vendors/clouds

cost_report = whaleflux.compare_gpus(

gpus = ["H100", "MI300X", "L4"],

metric = "inference_cost",

workload = "llama2-70b",

location = "aws_us_east"

)

→ Output:

| GPU | Base Cost | Tokens/$ | Waste-Adjusted |

|---------|-----------|----------|----------------|

| H100 | $4.12 | 142 | **$3.11** (↓24.5%) |

| MI300X | $3.78 | 118 | **$2.94** (↓22.2%) |

| L4 | $2.21 | 89 | **$1.82** (↓17.6%) |

Automatically factors idle time, power, and regional pricing

Part 4: True 3-Year TCO Exposed

| GPU | MSRP | Legacy TCO | WhaleFlux TCO | Savings |

| NVIDIA H100 | $36k | $218k | $162k | ↓26% |

| AMD MI300X | $21.5k | $189k | $139k | ↓27% |

| Cloud A100 | $3.06/hr | $80k | $59k | ↓27% |

Savings drivers:

- Spot instance arbitrage

- Fragmentation reduction

- Dynamic power tuning

Part 5: Strategic Procurement in 5 Steps

Profile Workloads:

whaleflux.profiler(model=”mixtral-8x7b”) → min_vram=80GB

Simulate Scenarios:

Compare on-prem/cloud/hybrid TCO in WhaleFlux Dashboard

Calculate Waste-Adjusted Pricing:

https://example.com/formula

Negotiate with Vendor Reports:

Generate “AMD vs NVIDIA Break-Even Analysis” PDFs

Auto-Optimize:

WhaleFlux scales resources with spot price fluctuations

Part 6: Price Comparison Red Flags

❌ “Discounts” on EOL hardware (e.g., V100s in 2024)

❌ Cloud reserved instances without usage commitments

❌ Ignoring software costs (CUDA Enterprise vs ROCm)

✅ Green Flag: WhaleFlux Saving Guarantee (37% avg. reduction)

Part 7: AI-Driven Procurement Future

WhaleFlux predictive features:

- Chip shortage alerts: Preempt price surges

- Spot instance bidding: Auto-bid below market rates

- Carbon costing: Track €0.002/kgCO₂ per token

- Demand forecasting: Right-size clusters 6 months ahead

GPU Compare Chart Mastery From Spec Sheets to AI Cluster Efficiency Optimization

GPU spec sheets lie. Raw TFLOPS don’t equal real-world performance. 42% of AI teams report wasted spend from mismatched hardware. This guide cuts through the noise. Learn to compare GPUs using real efficiency metrics – not paper specs. Discover how WhaleFlux (intelligent GPU orchestration) unlocks hidden value in AMD, NVIDIA, and cloud GPUs.

Part 1: Why GPU Spec Sheets Lie: The Comparison Gap

Don’t be fooled by big numbers:

- TFLOPS ≠ Real Performance: A 67 TFLOPS GPU may run slower than a 61 TFLOPS chip under AI workloads due to memory bottlenecks.

- Thermal Throttling: A GPU running at 90°C performs 15-25% slower than its “peak” spec.

- Enterprise Reality: 42% of AI teams bought wrong GPUs by focusing only on specs (WhaleFlux Survey 2024).

Key Insight: Paper specs ignore cooling, software, and cluster dynamics.

Part 2: Decoding GPU Charts: What Matters for AI

| Component | Gaming Use | AI Enterprise Use |

| Clock Speed | FPS Boost | Minimal Impact |

| VRAM Capacity | 4K Textures | Model Size Limit |

| Memory Bandwidth | Frame Consistency | Batch Processing Speed |

| Power Draw (Watts) | Electricity Cost | Cost Per Token ($) |

⚠️ Warning: Consumer GPU charts are useless for AI. Focus on throughput per dollar.

Part 3: WhaleFlux Compare Matrix: Beyond Static Charts

WhaleFlux replaces outdated spreadsheets with a dynamic enterprise dashboard:

- Real-time overlays of NVIDIA/AMD/Cloud specs

- Cluster Efficiency Score (0-100 rating)

- TCO projections based on your workload

- Bottleneck heatmaps (spot VRAM/PCIe issues)

Part 4: AI Workload Showdown: Specs vs Reality

| GPU Model | FP32 (Spec) | Real Llama2-70B Tokens/Sec | WhaleFlux Efficiency |

| NVIDIA H100 | 67.8 TFLOPS | 94 | 92/100 (Elite) |

| AMD MI300X | 61.2 TFLOPS | 78 ➜ 95* | 84/100 (Optimized) |

| Cloud L4 | 31.2 TFLOPS | 41 | 68/100 (Limited) |

*With WhaleFlux mixed-precision routing

The Shock: AMD MI300X beats its paper specs when orchestrated properly.

Part 5: Build Future-Proof GPU Frameworks

1. Dynamic Weighting (Prioritize Your Needs)

WhaleFlux API: Custom GPU scoring

# WhaleFlux API: Custom GPU scoring

weights = {

"vram": 0.6, # Critical for 70B+ LLMs

"tflops": 0.1,

"cost_hr": 0.3

}

gpu_score = whaleflux.calculate_score('mi300x', weights) # Output: 87/100

2. Lifecycle Cost Modeling

- Hardware cost

- 3-year power/cooling (H100: ~$15k electricity)

- WhaleFlux Depreciation Simulator

3. Sustainability Index

Compare performance-per-watt – NVIDIA H100: 3.4 tokens/watt vs AMD MI300X: 4.1 tokens/watt.

Part 6: Case Study: FinTech Saves $217k/Yr

Problem:

- Mismatched A100 nodes → 40% idle time

- $28k/month wasted cloud spend

WhaleFlux Solution:

- Identified overprovisioned nodes via Compare Matrix

- Switched to L40S + fragmentation compression

- Automated spot instance orchestration

Results:

✅ 37% higher throughput

✅ $217,000 annual savings

✅ 28-point efficiency gain

Part 7: Your Ultimate GPU Comparison Toolkit

Stop guessing. Start optimizing:

| Tool | Section | Value |

| Interactive Matrix Demo | Part 3 | See beyond static charts |

| Cloud TCO Calculator | Part 5 | Compare cloud vs on-prem |

| Workload Benchmark Kit | Part 4 | Real-world performance |

| API Priority Scoring | Part 5 | Adapt to your needs |

AMD vs NVIDIA GPU Comparison Specs vs AI Performance & Cost

Part 1: Gaming & Creative Workloads – Where They Actually Excel

Forget marketing fluff. Real-world performance and cost decide winners.

Price-to-Performance:

AMD’s RX 7900 XTX ($999) often beats NVIDIA’s RTX 4080 Super ($1,199) in traditional gaming.

Winner: AMD for budget-focused gamers.

Ray Tracing:

NVIDIA’s DLSS 3.5 (hardware-accelerated AI) delivers smoother ray-traced visuals. AMD’s FSR 3.0 relies on software.

Winner: NVIDIA for visual fidelity.

Professional Software (Blender, Adobe):

NVIDIA dominates with its mature CUDA ecosystem. AMD support lags in time-sensitive tasks.

Winner: NVIDIA for creative pros.

The Bottom Line:

Maximize frames per dollar? Choose AMD.

Need ray tracing or pro app support? Choose NVIDIA.

Part 2: Enterprise AI Battle: MI300X vs H100

Specs ≠ Real-World Value. Throughput and cost-per-token matter.

| Benchmark | AMD MI300X (192GB VRAM) | NVIDIA H100 (80GB VRAM) | WhaleFlux Boost |

| Llama2-70B Inference | 78 tokens/sec | 95 tokens/sec | +22% (Mixed-Precision Routing) |

| 8-GPU Cluster Utilization | 73% | 81% | →95% (Fragmentation Compression) |

| Hourly Inference Cost | $8.21 | $11.50 | ↓40% (Spot Instance Orchestration) |

Key Insight:

NVIDIA leads raw speed, but AMD’s massive VRAM + WhaleFlux optimization delivers 44% lower inference costs – a game-changer for scaling AI.

Part 3: The Hidden Cost of Hybrid GPU Clusters

Mixing AMD and NVIDIA GPUs? Beware these traps:

❌ 15-30% Performance Loss: Driver/environment conflicts cripple speed.

❌ Resource Waste: Isolated ROCm (AMD) and CUDA (NVIDIA) environments.

❌ 300% Longer Troubleshooting: No unified monitoring tools.

WhaleFlux Fixes This:

Automatically picks the BEST GPU for YOUR workload

gpu_backend = whaleflux.detect_optimal_backend(

model=”mistral-8x7B”,

precision=”int8″

) # Output: amd_rocm OR nvidia_cuda

Result: Zero configuration headaches. Optimal performance. Lower costs.

Part 4: Your 5-Step GPU Selection Strategy

Stop guessing. Optimize with data:

Define Your Workload:

- Training huge models? AMD’s VRAM advantage wins.

- Low-latency inference? NVIDIA’s speed leads.

Test Cross-Platform:

Use WhaleFlux Benchmark Kit (Free) for unified reports.

Calculate True 3-Year TCO:

| Cost Factor | Typical Impact | WhaleFlux Savings |

| Hardware | $$$ | N/A |

| Power & Cooling | $$$ (per Watt!) | Up to 25% |

| Ops Labor | $$$$ (engineer hrs) | Up to 60% |

| Total | High | Avg 37% |

Test Cluster Failover:

Simulate GPU failures. Is recovery automatic?

Validate Software:

Does your stack REQUIRE CUDA? Test compatibility early.

Part 5: The Future: Unified GPU Ecosystems

PyTorch 2.0+ breaks vendor lock-in by supporting both AMD (ROCm) and NVIDIA (CUDA). Orchestration is now critical:

- WhaleFlux Dynamic Routing: Sends workloads to the right GPU – automatically.

- Auto Model Conversion: Runs ANY model on ANY hardware. No code changes.

- Cost Revolution: Achieves $0.0001 per token via multi-cloud optimization.

GPU Performance Comparison: Enterprise Tactics & Cost Optimization

Hook: Did you know 40% of AI teams choose underperforming GPUs because they compare specs, not actual workloads? One company wasted $217,000 on overprovisioned A100s before realizing RTX 4090s delivered better ROI for their specific LLM. Let’s fix that.

1. Why Your GPU Spec Sheet Lies (and What Actually Matters)

Comparing raw TFLOPS or clock speeds is like judging a car by its top speed—useless for daily driving. Real-world bottlenecks include:

- Thermal Throttling: A GPU running at 85°C performs 23% slower than at 65°C (NVIDIA whitepaper)

- VRAM Walls: Running a 13B Llama model on a 24GB GPU causes constant swapping, adding 200ms latency

- Driver Overhead: PyTorch versions can create 15% performance gaps on identical hardware

Enterprise Pain Point: When a Fortune 500 AI team tested GPUs using synthetic benchmarks, their “top performer” collapsed under real inference loads—costing 3 weeks of rework.

2. Free GPU Tools: Quick Checks vs. Critical Gaps

| Tool | Best For | Missing for AI Workloads |

| UserBenchmark | Gaming GPU comparisons | Zero LLM/inference metrics |

| GPU-Z + HWMonitor | Temp/power monitoring | No multi-GPU cluster support |

| TechPowerUp DB | Historical game FPS data | Useless for Stable Diffusion |

⚠️ The Gap: None track token throughput or inference cost per dollar—essential for business decisions.

3. Enterprise GPU Metrics: The Trinity of Value

Forget specs. Measure what impacts your bottom line:

Throughput Value:

- Tokens/$ (e.g., Llama 2-70B: A100 = 42 tokens/$, RTX 4090 = 68 tokens/$)

- Images/$ (Stable Diffusion XL: 3090 = 1.2 images/$, A6000 = 0.9 images/$)

Cluster Efficiency:

- Idle time >15%? You’re burning cash.

- VRAM utilization <70%? Buy fewer GPUs.

True Ownership Cost:

- Cloud egress fees + power ($0.21/kWh × 24/7) + cooling can exceed hardware costs by 3×.

4. Pro Benchmarking: How to Test GPUs Like an Expert

Step 1: Standardize Everything

- Use identical Docker containers (e.g.,

nvcr.io/nvidia/pytorch:23.10) - Fix ambient temp to 23°C (±1° variance allowed)

Step 2: Test Real AI Workloads

WhaleFlux API automates consistent cross-GPU testing

benchmark_id = whaleflux.create_test(

gpus = [“A100-80GB”, “RTX_4090”, “MI250X”],

models = [“llama2-70b”, “sd-xl”],

framework = “vLLM 0.3.2”

)

results = whaleflux.get_report(benchmark_id)

Step 3: Measure These Hidden Factors

- Sustained Performance: Run 1-hour stress tests (peak ≠ real)

- Neighbor Effect: How performance drops when 8 GPUs share a rack (up to 22% loss!)

5. WhaleFlux: The Missing Layer in GPU Comparisons

Raw benchmarks ignore cluster chaos. Reality includes:

- Resource Contention: Three models fighting for VRAM? 40% latency spikes.

- Cold Starts: 45 seconds lost initializing GPUs per job.

WhaleFlux fixes this by:

- 📊 Unified Dashboard: Compare actual throughput across NVIDIA/AMD/Cloud GPUs

- 💸 Cost-Per-Inference Tracking: Live $/token calculations including hidden overhead

- ⚡ Auto-Optimized Deployment: Routes workloads to best-fit GPUs using benchmark data

Case Study: Generative AI startup ScaleFast reduced Mistral-8x7B inference costs by 37% after WhaleFlux identified underutilized A10Gs in their cluster.

6. Your GPU Comparison Checklist

Define workload type:

- Training? Prioritize memory bandwidth.

- Inference? Focus on batch latency.

Run WhaleFlux Test Mode:

whaleflux.compare(gpus=[“A100″,”L40S”], metric=”cost_per_token”)

Analyze Cluster Metrics:

- GPU utilization variance >15% = imbalance

- Memory fragmentation >30% = wasted capacity

Project 3-Year TCO:

WhaleFlux’s Simulator factors in:

- Power cost spikes

- Cloud price hikes

- Depreciation curves

7. Future Trends: What’s Changing GPU Comparisons

- Green AI: Performance-per-watt now beats raw speed (e.g., L40S vs. A100)

- Cloud/On-Prem Parity: Test identical workloads in both environments simultaneously

- Multi-Vendor Clusters: WhaleFlux’s scheduler mixes NVIDIA + AMD + Cloud GPUs seamlessly

Conclusion: Compare Business Outcomes, Not Specs

The fastest GPU isn’t the one with highest TFLOPS—it’s the one that delivers:

- ✅ Highest throughput per dollar

- ✅ Lowest operational headaches

- ✅ Proven stability in your cluster

Next Step: Benchmark Your Stack with WhaleFlux → Get a free GPU Efficiency Report in 48 hours.

“We cut GPU costs by 41% without upgrading hardware—just by optimizing deployments using WhaleFlux.”

— CTO, Generative AI Scale-Up

The Ultimate GPU Benchmark Guide: Free Tools for Gamers, Creators & AI Pros

Introduction: Why GPU Benchmarks Matter

Think of benchmarks as X-ray vision for your GPU. They reveal real performance beyond marketing claims. Years ago, benchmarks focused on gaming. Today, they’re vital for AI, 3D rendering, and machine learning. Choosing the right GPU without benchmarks? That’s like buying a car without a test drive.

Free GPU Benchmark Tools Compared

Stop paying for tools you don’t need. These free options cover 90% of use cases:

| Tool | Best For | Why It Shines |

| MSI Afterburner | Real-time monitoring | Tracks FPS, temps & clock speeds live |

| Unigine Heaven | Stress testing | Pushes GPUs to their thermal limits |

| UserBenchmark | Quick comparisons | Compares your GPU to others in seconds |

| FurMark | Thermal performance | “Stress test mode” finds cooling flaws |

| PassMark | Cross-platform tests | Works on Windows, Linux, and macOS |

Online alternatives: GFXBench (mobile/desktop), BrowserStack (web-based testing).

GPU Benchmark Methodology 101

Compare GPUs like a pro with these key metrics:

- Gamers: Prioritize FPS (frames per second) at your resolution (1080p/4K)

- AI/ML Pros: Track TFLOPS (compute power) and VRAM bandwidth

- Content Creators: Balance render times and power efficiency

Pro Tip: Always test in identical environments. Synthetic benchmarks (like 3DMark) show theoretical power. Real-world tests (actual games/apps) reveal true performance.

AI/Deep Learning GPU Benchmarks Deep Dive

For AI workloads, generic tools won’t cut it. Use these specialized frameworks:

- MLPerf Inference: Industry standard for comparing AI acceleration

- TensorFlow Profiler: Optimizes TensorFlow model performance

- PyTorch Benchmarks: Tests PyTorch model speed and memory use

Critical factors:

- Precision: FP16/INT8 throughput (higher = better)

- VRAM: 24GB+ needed for large language models like Llama 3

When benchmarking GPUs for AI workloads like Stable Diffusion or LLMs, raw TFLOPS only tell half the story. Real-world performance hinges on:

- GPU Cluster Utilization – Idle resources during peak loads

- Memory Fragmentation – Wasted VRAM from inefficient allocation

- Multi-Node Scaling – Communication overhead in distributed training

For enterprise AI teams: These hidden costs can increase cloud spend by 40%+ (AWS case study, 2024). This is where intelligent orchestration layers like WhaleFlux become critical:

- + Automatically allocates GPU slices based on model requirements

- + Reduces VRAM waste by 62% via fragmentation compression

- + Cuts cloud costs by prioritizing spot instances with failover

Application-Specific Benchmark Shootout

| Task | Key Metric | Top GPU (2024) | Free Test Tool |

| Stable Diffusion | Images/minute | RTX 4090 | AUTOMATIC1111 WebUI |

| LLM Inference | Tokens/second | H100 | llama.cpp |

| 4K Gaming | Average FPS | RTX 4080 Super | 3DMark (Free Demo) |

| 8K Video Editing | Render time (min) | M2 Ultra | PugetBench |

| Task | Top GPU (Raw Perf) | Cluster Efficiency Solution |

| Stable Diffusion | RTX 4090 (38 img/min) | WhaleFlux Dynamic Batching: Boosts throughput to 52 img/min on same hardware |

| LLM Inference | H100 (195 tokens/sec) | WhaleFlux Quantization Routing: Achieves 210 tokens/sec with INT8 precision |

How to Compare GPUs Like a Pro

Follow this 4-step framework:

- Define your use case: Gaming? AI training? Video editing?

- Choose relevant tools: Pick 2-3 benchmarks from Section II/IV

- Compare price-to-performance: Calculate FPS/$ or Tokens/$

- Check thermal throttling: Run FurMark for 20 minutes – watch for clock speed drops

Avoid these mistakes:

- Testing only synthetic benchmarks

- Ignoring power consumption

- Forgetting driver overhead

The Hidden Dimension: GPU Resource Orchestration

While comparing individual GPU specs is essential, enterprise AI deployments fail when ignoring cluster dynamics:

- The 50% Utilization Trap: Most GPU clusters run below half capacity

- Power Spikes: Unmanaged loads cause thermal throttling

Tools like WhaleFlux solve this by:

✅ Predictive Scaling: Pre-warm GPUs before inference peaks

✅ Cost Visibility: Real-time $/token tracking per model

✅ Zero-Downtime Updates: Maintain 99.95% SLA during upgrades

Emerging Trends to Watch

- Cloud benchmarking: Test high-end GPUs without buying them (Lambda Labs)

- Energy efficiency metrics: Performance-per-watt becoming critical

- Ray tracing benchmarks: New tools like Portal RTX test next-gen capabilities

Conclusion: Key Takeaways

- No single benchmark fits all – match tools to your tasks

- Free tools like UserBenchmark and llama.cpp cover most needs

- For AI work, prioritize VRAM and TFLOPS over gaming metrics

- Always test real-world performance, not just specs

Pro Tip: Bookmark MLPerf.org and TechPowerUp GPU Database for ongoing comparisons.

Ready to test your GPU?

→ Gamers: Run 3DMark Time Spy (free on Steam)

→ AI Developers: Try llama.cpp with a 7B parameter model

→ Creators: Download PugetBench for Premiere Pro

Remember that maximizing ROI requires both powerful GPUs and intelligent resource management. For teams deploying LLMs or diffusion models:

- Use free benchmarks to select hardware

- Leverage orchestration tools like WhaleFlux to unlock 30-50% hidden capacity

- Monitor $/inference as your true north metric

How to Reduce AI Inference Latency: Optimizing Speed for Real-World AI Applications

Introduction

AI inference latency—the delay between input submission and model response—can make or break real-world AI applications. Whether deploying chatbots, recommendation engines, or computer vision systems, slow inference speeds lead to poor user experiences, higher costs, and scalability bottlenecks.

This guide explores actionable techniques to reduce AI inference latency, from model optimization to infrastructure tuning. We’ll also highlight how WhaleFlux, an end-to-end AI deployment platform, automates latency optimization with features like smart resource matching and 60% faster inference.

1. Model Optimization: Lighten the Load

Adopt Efficient Architectures

Replace bulky models (e.g., GPT-4) with distilled versions (e.g., DistilBERT) or mobile-friendly designs (e.g., MobileNetV3).

Use quantization (e.g., FP32 → INT8) to shrink model size without significant accuracy loss.

Prune Redundant Layers

Tools like TensorFlow Model Optimization Toolkit trim unnecessary neurons, reducing compute overhead by 20–30%.

2. Hardware Acceleration: Maximize GPU/TPU Efficiency

Choose the Right Hardware

- NVIDIA A100/H100 GPUs: Optimized for parallel processing.

- Google TPUs: Ideal for matrix-heavy tasks (e.g., LLM inference).

- Edge Devices (Jetson, Coral AI): Cut cloud dependency for real-time apps.

Leverage Optimization Libraries

CUDA (NVIDIA), OpenVINO (Intel CPUs), and Core ML (Apple) accelerate inference by 2

–5×.

3. Deployment Pipeline: Streamline Serving

Use High-Performance Frameworks

- FastAPI (Python) or gRPC minimize HTTP overhead.

- NVIDIA Triton enables batch processing and dynamic scaling.

Containerize with Docker/Kubernetes

WhaleFlux’s preset Docker templates automate GPU-accelerated deployment, reducing setup time by 90%.

4. Autoscaling & Caching: Handle Traffic Spikes

Dynamic Resource Allocation

WhaleFlux’s 0.001s autoscaling response adjusts GPU/CPU resources in real time.

Output Caching

Store frequent predictions (e.g., chatbot responses) to skip redundant computations.

5. Monitoring & Continuous Optimization

Track Key Metrics

Latency (ms), GPU utilization, and error rates (use Prometheus + Grafana).

A/B Test Optimizations

- Compare quantized vs. full models to balance speed/accuracy.

- WhaleFlux’s full-stack observability pinpoints bottlenecks from GPU to application layer.

Conclusion

Reducing AI inference latency requires a holistic approach—model pruning, hardware tuning, and intelligent deployment. For teams prioritizing speed and cost-efficiency, platforms like WhaleFlux automate optimization with:

- 60% lower latency via smart resource allocation.

- 99.9% GPU uptime and self-healing infrastructure.

- Seamless scaling for high-traffic workloads.

Ready to optimize your AI models? Explore WhaleFlux’s solutions for frictionless low-latency inference.