Imagine standing at a technology crossroads. One path is paved with freely available, modifiable tools backed by a global community of innovators. The other offers polished, powerful, and ready-to-use solutions from industry giants, accessible for a fee. This is the fundamental choice businesses face today between open-source and proprietary (or closed-source) AI models. It’s a decision that goes beyond mere technical preference, shaping your cost structure, control over technology, speed of innovation, and long-term strategic autonomy.

This guide will demystify both paths, providing a clear framework to help you make an informed strategic choice based on your company’s unique needs, resources and goals.

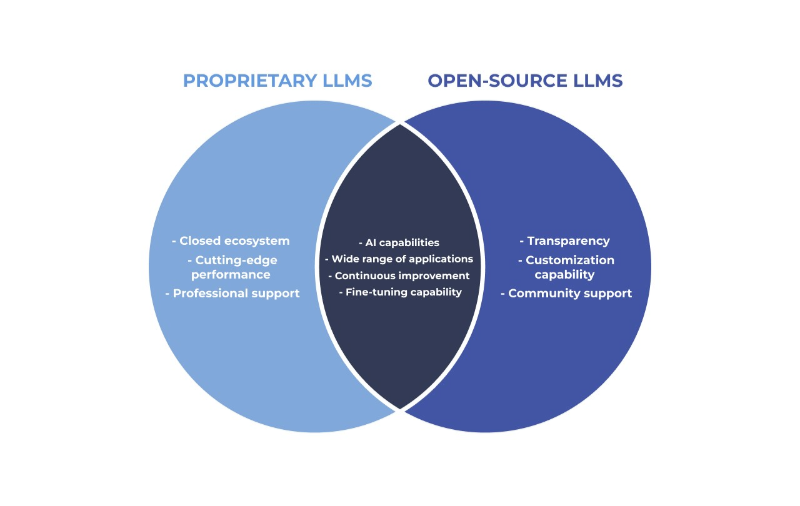

Defining the Contenders

Open-Source Models (like Llama 2/3, Mistral, BERT):

These are publicly released by their creators (often research institutions or companies like Meta) under permissive licenses. You can download, use, modify, and even deploy them commercially without paying licensing fees to the model’s originator. The “source code” of the model—its architecture and, critically, its weights—is open for inspection and alteration. Think of it as buying a fully transparent car where you’re given the blueprints and the keys to the factory.

Proprietary/Closed-Source Models (like GPT-4, Claude, Gemini):

These are developed and owned by companies (OpenAI, Anthropic, Google). You access them exclusively through APIs or managed interfaces. You pay for usage (per token or per call) but cannot see the model’s inner workings, modify its architecture, or host it yourself. It’s like hiring a premium chauffeur service: you get a fantastic ride but don’t own the car, can’t see the engine, and must follow the service’s routes and rules.

The Strategic Breakdown: A Multi-Dimensional Comparison

Let’s break down the comparison across the dimensions that matter most for a business.

1. Cost & Economics

Open-Source: Variable Capex, Predictable Opex.

- Upfront: No licensing fees. The primary costs are infrastructure and expertise (developers, MLOps engineers).

- Ongoing: Costs are tied to your own compute (cloud or on-premise). This can be highly predictable and often lower at scale, but you bear all optimization burdens. As Hugging Face’s 2024 report notes, the community-driven innovation around efficient fine-tuning (like LoRA) and inference optimization continues to push the cost-performance frontier.

Proprietary: Low Capex, Variable Opex.

- Upfront: Typically low to zero. You just sign up for an API key.

- Ongoing: Pay-as-you-go based on usage. This is excellent for prototyping and low-volume applications but can become prohibitively expensive at high scale. Costs are opaque and controlled by the vendor, subject to change.

Verdict: Open-source favors long-term, high-scale control over expenses. Proprietary favors short-term, low-volume predictability and low initial investment.

2. Control, Customization & Privacy

Open-Source: Maximum Control.

- You can fine-tune the model on your sensitive data without sending it to a third party—a critical advantage for finance, healthcare, or legal sectors.

- You can customize every layer for extreme performance on your specific task.

- You control the deployment environment, ensuring data never leaves your perimeter. This is a complete data privacy solution.

Proprietary: Minimal Control.

- Customization is limited to prompt engineering, retrieval-augmented generation (RAG), and sometimes light fine-tuning offered by the vendor (often at a premium and still on their cloud).

- Your prompts and data are often sent to the vendor’s servers, posing potential privacy and compliance risks (though vendors offer increasing enterprise data policies).

Verdict: Open-source is the clear winner for applications requiring deep customization, full data sovereignty, and strict compliance.

3. Performance & Capabilities

Proprietary: The High-Water Mark (for now).

- Models like GPT-4 have set benchmarks in general reasoning, creativity, and task versatility. They benefit from massive, proprietary training datasets and vast computational resources.

- They offer simplicity: you’re accessing the best possible version of that model.

Open-Source: Rapidly Catching Up & Specializing.

- While the largest open models may still trail in some broad benchmarks, they excel in specific domains when fine-tuned (e.g., CodeLlama for coding, medical models for healthcare).

- The “best” model is context-dependent. A fine-tuned 7B-parameter open model can drastically outperform a giant generalist proprietary model on its specific task.

Verdict: Proprietary leads in general-purpose intelligence. Open-source wins in cost-effective, task-specific superiority and offers more performance transparency.

4. Reliability, Support & Vendor Lock-in

Proprietary: Managed Service.

- The vendor handles uptime, scaling, and hardware updates. You get SLAs and dedicated enterprise support.

- The major risk is profound vendor lock-in. Your application logic, data workflows, and costs are tied to one provider’s ecosystem and pricing power. API changes or outages directly halt your business.

Open-Source: Self-Supported Freedom.

- You are responsible for your own infrastructure, monitoring, and performance.

- However, you gain portability and freedom from lock-in. You can run models on any cloud or your own servers. Support comes from the community and commercial vendors (like consulting firms or the platforms you use to manage them).

Verdict: Proprietary reduces operational burden but creates strategic dependency. Open-source increases operational responsibility but ensures long-term independence.

The Strategic Decision Framework: How to Choose

Your choice shouldn’t be ideological. It should be strategic, based on answering these key questions:

1.What is our Core Application?

Choose Proprietary if:

You need a general-purpose chatbot, a creative content brainstorming tool, or a rapid prototype where development speed and versatility are paramount, and volume is low.

Choose Open-Source if:

You are building a product feature that requires specific tone/style, operates on sensitive data, needs deterministic output, or will be used at very high scale. Fine-tuning is your best path.

2.What are our Data Privacy and Compliance Requirements?

Healthcare, Legal, Government, Finance:

The compliance scale almost always tips towards open-source or locally hosted proprietary solutions where you maintain full data custody.

3.What is our In-House Expertise?

Do you have strong ML engineering and MLOps teams? If yes, open-source unlocks its full value. If no, proprietary APIs lower the skill barrier to entry, though you may eventually need engineers to build robust applications around them anyway.

4.What is our Long-Term Vision?

Is AI a supporting feature or the core intellectual property of your product? If it’s core, relying on a closed external API can be an existential risk. Building expertise around open-source models creates a defensible moat.

The Hybrid Path and the Platform Enabler

The most sophisticated enterprises are not choosing one over the other. They are adopting a hybrid, pragmatic strategy.

- Use proprietary models for rapid prototyping, exploring new ideas, and handling non-sensitive, variable tasks.

- Use open-source models for core, scaled, sensitive, and customized production workloads.

Managing this hybrid landscape—with different models, deployment environments, and cost centers—is complex. This is where an integrated AI platform like WhaleFlux becomes a strategic asset.

WhaleFlux provides the control plane for a hybrid model strategy:

Unified Gateway:

It can act as a single endpoint that routes requests intelligently—sending appropriate tasks to cost-effective open-source models and others to powerful proprietary APIs, all while managing API keys and costs.

Simplified Open-Source Ops:

It abstracts away the infrastructure complexity of hosting and fine-tuning open models. WhaleFlux’s integrated compute, model registry, and observability tools turn open-source from an engineering challenge into a manageable resource.

Cost & Performance Observability:

It gives you a single pane of glass to compare the cost and performance of different models (open and closed) for the same task, enabling data-driven decisions on where to allocate resources.

With a platform like WhaleFlux, the question shifts from “open or closed?” to “which tool is best for this specific job, and how do we manage our toolbox efficiently?“

Conclusion

The open-source vs. proprietary model debate is not a war with one winner. It’s a spectrum of trade-offs between convenience and control, between short-term speed and long-term sovereignty.

For businesses, the winning strategy is one of informed pragmatism. Start by ruthlessly assessing your application needs, compliance landscape, and team capabilities. Use proprietary models to experiment and accelerate, but invest in open-source capabilities for your mission-critical, differentiated, and scaled applications.

By leveraging platforms that simplify the management of both worlds, you can build a resilient, cost-effective, and future-proof AI strategy that keeps you in the driver’s seat, no matter which road you choose to travel.

FAQs: Open-Source vs. Proprietary AI Models

Q1: Is open-source always cheaper than proprietary in the long run?

Not automatically. While open-source avoids per-token API fees, its total cost includes development, fine-tuning, deployment, and maintenance. For low or variable usage, proprietary APIs can be cheaper. For high, predictable scale and with good MLOps, open-source typically becomes more cost-effective. The key is to model your total cost of ownership (TCO) based on projected usage.

Q2: Are proprietary models more secure and aligned than open-source ones?

They are often more filtered against harmful outputs due to intensive post-training (RLHF). However, “security” also means data privacy. Sending data to a vendor’s API can be a risk. Open-source models run in your environment offer superior data security. Alignment is a mixed bag; open models allow you to perform your own alignment fine-tuning to match your specific ethical guidelines.

Q3: Can we switch from a proprietary API to an open-source model later?

Yes, but it requires work. Applications built tightly around a specific API’s quirks (like OpenAI’s function calling) will need refactoring. A best practice is to abstract the model calls in your code from the start, making it easier to switch the backend model—a pattern that platforms like WhaleFlux inherently support.

Q4: How do we evaluate the quality of an open-source model vs. a closed one?

- Create a representative evaluation dataset of prompts and expected outputs.

- Test both types of models on this set, using both automated metrics (like accuracy) and human evaluation for quality, tone, and safety.

- For open-source models, test them after fine-tuning on your data, as their out-of-the-box performance may be misleading.

Q5: What is a “hybrid” strategy in practice?

A hybrid strategy means using multiple models. For example:

- Use GPT-4 for initial draft generation of creative marketing copy.

- Use a fine-tuned, open-source Llama model to rewrite all internal documents into your brand voice.

- Use a small, distilled open model running on-edge for real-time, low-latency classification in your mobile app.

The goal is to match the right tool to each task based on cost, performance, and data requirements.