The Role of Data Centers in Powering AI’s Future

Introduction

Data centers, the beating heart of the digital world, are the unprecedented infrastructure demanded by AI’s voracious appetite for data and computational power, surpassing traditional setups as the silent engines powering the AI revolution.

Data centers have transcended beyond their initial roles as mere repositories of information. They have evolved into dynamic and incredibly sophisticated centers of computation, powering not just enterprise-level IT operations but also the complex algorithms that AI systems demand. These facilities, with their racks of humming servers and sprawling webs of networking cables, are critical in teaching machines how to learn, analyze, and act.

This blog casts a spotlight on the symbiotic relationship between AI and data centers, exploring how this partnership is critical not only to the present state of AI but also to its future horizons. The journey will walk through history, the demanding infrastructure requirements, the operational efficiencies, the pressing energy concerns, and real-world case studies that underline the synergy of data centers and AI.

Historical Context of Data Centers and Computing

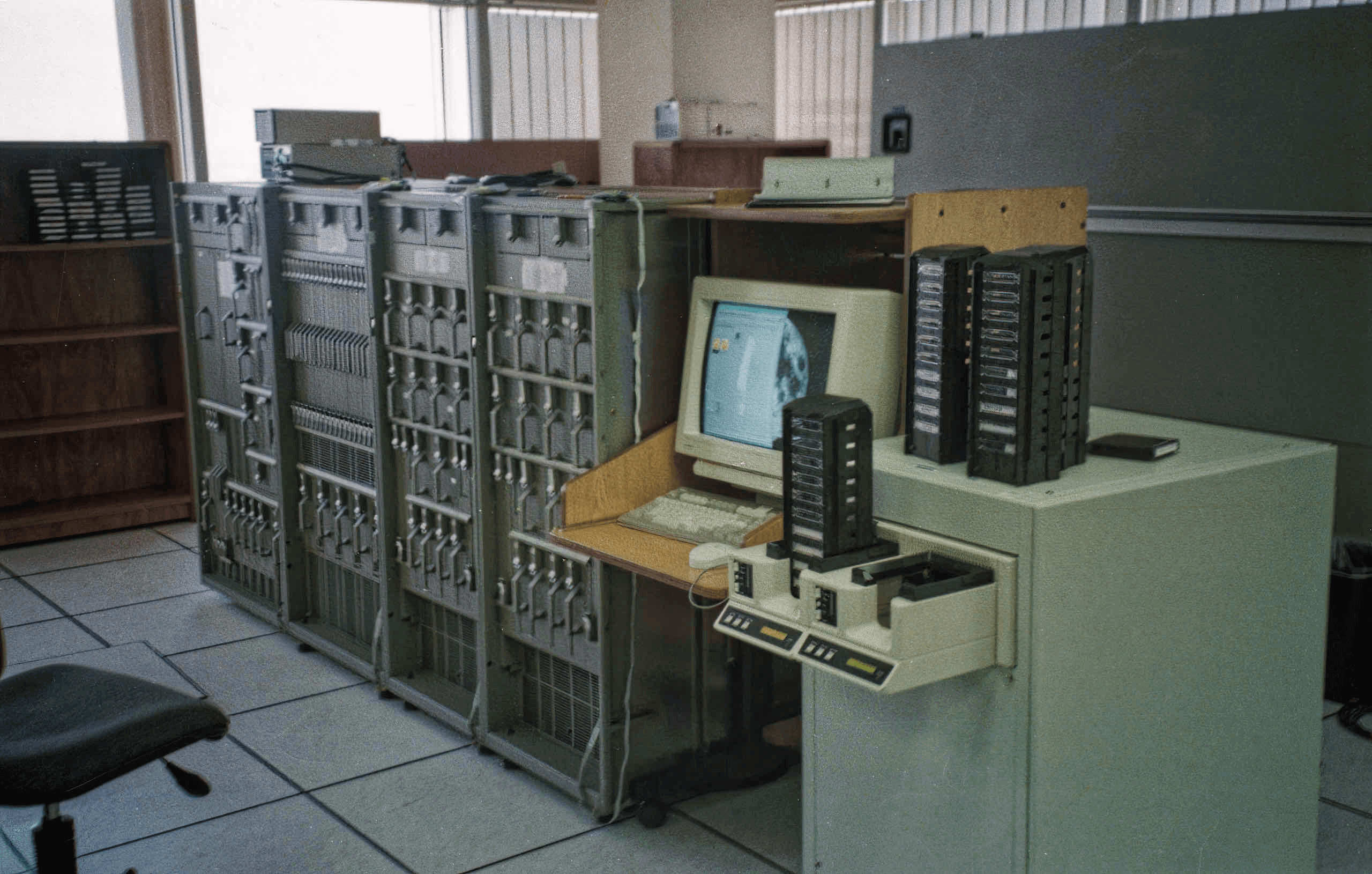

Before we can fully appreciate the role of data centers in the AI era, we must look back at their genesis and evolution. Initially, they were conceived as large rooms dedicated to housing the mainframe computers of the 20th century. These were substantial machines that required significant space and a controlled environment. Over time, as technology advanced, so too did the design and operation. They became repositories for servers that stored and managed the burgeoning volumes of data the internet age brought forth.

The advent of cloud computing further redefined data centers, transforming them from static storage facilities to dynamic, networked systems essential for delivering a range of services over the internet. They adapted to support a multitude of applications, web hosting, and data analytics, enabling businesses and consumers to leverage powerful computing resources remotely.

The progression from mere data storage to complex computing hubs is inextricably linked with advances in computer technology. As processors shrank in size but expanded in capability, the concentration of computing power within data centers increased. The efficiencies gained in processing, storage, and networking paved the way for today’s highly interconnected and cloud-dependent world. With these advances, they have become agile support systems for a variety of computing needs, including the computationally intensive tasks of AI.

As the digital revolution unfolded, data centers evolved to become not just keepers of information but also sophisticated nerve centers of computational activity. They are now poised at the frontier of the AI revolution, offering the infrastructure that is indispensable to machine learning’s progression.

The retrospect into the past lays a foundation for understanding the critical transformation of data centers from their origin to the present day—a transformation that has mirrored the trajectory of computing itself. Our next sections will delve into the synergistic relationship between AI and these evolved data centers—one that is vital for powering AI’s future.

The Symbiosis between AI and Data Centers

Catalyzing AI’s Operating Power

The growth of artificial intelligence has been inextricably linked to the evolution of data center capabilities. Powering AI requires more than just strong algorithms; it necessitates an infrastructure capable of handling vast amounts of data and lightning-fast computations. Within the controlled environments of modern data centers, AI has found the fertile ground needed for its complex workloads, all thanks to high-performance computing systems that are the linchpin of AI operations.

AI’s Brain and Brawn

In turn, the advancement and widespread implementation of artificial intelligence have profoundly influenced the architectural design and operational procedures of data centers. Through robust analytics and pattern recognition, AI bolsters the efficiency and reliability of data center operations, enhancing everything from workload distribution to energy use. The alignment of AI’s growth with these technological meccas ensures that as AI models become more sophisticated, data center designs continue to adapt and advance in response.

The Reciprocal Evolution

The data center and AI advancement cycle is reciprocal—data centers provide AI the environment it needs to flourish, while AI continually redefines what is required of that environment. As we witness enterprises like Google apply AI to optimize data center energy efficiency or Amazon Web Services (AWS) offer accessible machine learning services through its vast cloud infrastructure, this intertwined progression becomes increasingly evident. AI and data centers are not just growing side by side; they are co-evolving, united by a vision of a smarter future.

Refining with AI’s Own Tools

Perhaps one of the most intriguing aspects of this symbiosis is that AI has begun to refine the very data centers it relies on. Machine learning algorithms now predictively manage infrastructure load and maintenance, turning data centers into self-optimizing entities. Such applications exemplify the dynamic potential of AI to revitalize the operations within data centers, ensuring they remain at the forefront of technological innovation, ready to power AI’s relentless advancement.

There are several AI tools and technologies that have been instrumental in refining data center operations. Here are some examples:

Google’s DeepMind AI for Data Center Cooling

Google has applied its DeepMind machine learning algorithms to the problem of energy consumption in data centers. Their system uses historical data to predict future cooling requirements and dynamically adjusts cooling systems to improve energy efficiency. In practice, this AI-powered system has achieved a reduction in the amount of energy used for cooling by up to 40 percent, according to Google.

Emerging AI for Computing Power Management

The Emerging AI computing power management platform is a multi-tenant platform

solution for computing power cluster management, designed to optimize and automate the allocation, scheduling and management of computing resources.

The platform enables private cloud management, multi-GPU cloud management and GPU cluster deep observation.

NVIDIA’s AI Platform for Predictive Maintenance

NVIDIA has developed AI platforms that integrate deep learning and predictive analytics to perform predictive maintenance within data centers. These tools analyze operational data in real-time to predict hardware failures before they happen, significantly reducing downtime and maintenance costs.

IBM Watson for Data Center Management

IBM’s Watson uses AI to proactively manage IT infrastructure. By analyzing data from various sources within the data center, Watson can identify trends, anticipate outages, and optimize workloads across the data center environment, thereby enhancing operational efficiency and resilience.

Infrastructure: The Backbone of AI Operations

A New Class of Hardware

Data centers have become the proving grounds for AI’s most demanding workloads, reshaping the landscape of computational hardware. Cutting-edge GPUs are now the mainstay in these environments, accelerating complex mathematical computations at the core of machine learning tasks. With dedicated AI processors, such as Google’s TPUs, data centers are pushing beyond traditional computation limits, vastly improving the efficiency and speed of AI training and inferencing phases.

High-Speed Networking: The Connective Tissue

None of this computational power could be fully harnessed without advancements in networking technology. High-speed networks within data centers facilitate the rapid transmission of data, crucial for collaborative AI processing. Technological marvels like NVIDIA’s Mellanox networking solutions exemplify the significant leaps made in ensuring data centers can operate at the speed AI demands.

Advances in Storage: The Data Storehouses

AI’s insatiable demand for data necessitates not merely large storage capacities but also swift data retrieval systems. Innovations in solid-state drive technology and software-defined storage have transformed data centers into highly efficient data storehouses, capable of feeding AI models with the necessary data volumes at unprecedented speeds.

Conclusion of Data Centers

The intertwining of AI and data center marks a pivotal shift in our digital epoch. Data centers, the once silent sentinels of data and servers, have evolved into the lifeblood of AI’s advancement, offering the computational might and data processing prowess necessary for AI to thrive. The transformative influence of AI, in turn, is streamlining these hubs of technology into smarter, more efficient operations.

Crafting Intelligence: A Step-by-Step Guide to Building Your AI Application

Introduction

Welcome to the future, where artificial intelligence (AI) is not just a buzzword but an accessible reality for innovators and entrepreneurs across the globe. The realm of AI applications is vast, ranging from simple chatbots to complex predictive analytics systems. But how does one take the first steps towards building an AI application?

In this guide, we’ll walk through the pivotal phases of crafting your AI application, highlight the tools you’ll need, and offer insights to set you on the path to AI success. Whether you’re a seasoned developer or a curious newcomer, this guide promises to unravel the mysteries of AI development and put the power of intelligent technology in your hands.

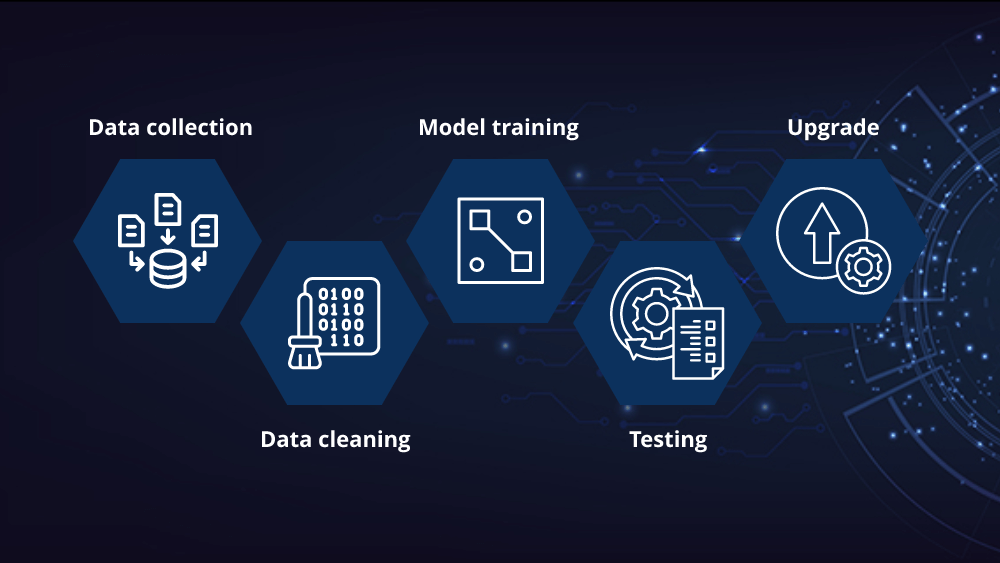

Creating an AI Application

Creating an AI application is an exciting venture that requires careful planning, a clear understanding of objectives, and the right technical skills and resources. Here’s a step-by-step guide to help you get started:

- Define Your ObjectiveDetermine what problem you want to solve with your AI application.Identify the needs of your target users and how your AI solution will address those needs.

- Conceptualize the AI ModelDecide on the type of AI you want to create. Will it involve natural language processing, computer vision, machine learning, or another AI discipline?Sketch out a high-level design of how the model will work, its inputs and outputs, and the user interactions.

- Gather Your DatasetCollect relevant data that your AI model will learn from. The quality and quantity of data can significantly impact the accuracy and performance of your AI application.Ensure that you have the right to use the data and that it’s free of biases to the best of your ability.

- Choose Your Tools and TechnologiesSelect programming languages that are commonly used for AI, such as Python or R.Choose appropriate AI frameworks and libraries, like TensorFlow, PyTorch, Keras, or scikit-learn.

- Develop a PrototypeStart coding your AI model based on the frameworks and datasets you’ve selected.Develop a minimum viable product (MVP) or prototype to test the feasibility of your concept.

- Train and Test Your ModelUse machine learning techniques to train your AI model with your dataset.Test your AI model rigorously to evaluate its performance, accuracy, and reliability.

- Incorporate FeedbackGather feedback by testing your prototype with potential end-users.Make iterative improvements based on the feedback and continue refining your AI model.

- Ensure Ethical Considerations and ComplianceConsider the ethical implications of your AI application and ensure that it complies with relevant AI ethics guidelines and regulations.Include privacy measures and data security to protect user information.

- Deploy the ApplicationChoose a cloud platform or in-house servers to deploy your AI application.Ensure that you have the appropriate infrastructure to support the AI application’s computational and storage needs.

- Monitor and MaintainAfter the deployment, monitor how the application performs in the real world.Set up processes for ongoing maintenance, updates, and performance tuning.

- Scale Your AI ApplicationAs your user base grows and your application proves successful, consider scaling your infrastructure.Explore possibilities for expanding your AI application’s features and reach.

Throughout this process, it may be beneficial to collaborate with AI experts, data scientists, and developers, especially if you’re new to the field of artificial intelligence. Remember that creating a successful AI application is not just about technical excellence; it’s also about understanding and delivering value to users in a responsible and ethical way.

Tools and Software options that Can Assist You

Data Collection and Processing:

Web Scraping Tools: Octoparse, Import.io

Data Cleaning Tools: OpenRefine, Trifacta Wrangler

Programming Languages:

Python: Widely used for AI due to libraries like NumPy, Pandas, and a supportive community.

R: Great for statistical analysis and data visualization.

AI Frameworks and Libraries:

TensorFlow: An end-to-end open-source platform for machine learning.

PyTorch: An open-source machine learning library based on the Torch library.

Keras: A high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

scikit-learn: A Python library for machine learning and data mining.

AI Development Platforms:

Google AI Platform: Offers a managed service for deploying ML models.

IBM Watson: Hosts a suite of AI tools for building applications.

Microsoft Azure AI: A collection of services and infrastructure for building AI applications.

Data Storage and Computation:

Cloud Services: AWS, Google Cloud Platform, Microsoft Azure

Big Data Platforms: Apache Hadoop, Apache Spark

Data Visualization:

Tableau: A powerful business intelligence and data visualization tool.

PowerBI: A business analytics service by Microsoft.

Version Control:

Git: Widely used for code version control.

GitHub/GitLab: Online platforms that provide hosting for software development and version control using Git.

Machine Learning Model Training and Evaluation:

MLflow: An open-source platform for the machine learning lifecycle.

Weights & Biases: Tools for tracking experiments in machine learning.

Deployment and Monitoring:

Emerging AI WhaleFlux: An autoscaled scheduling service towards stable and economical serverless LLM serving. Automate deployment processes for efficient LLM service management without manual intervention.

Docker: A tool designed to make it easier to create, deploy, and run applications by using containers.

Kubernetes: An open-source system for automating deployment, scaling, and management of containerized applications.

Prometheus & Grafana: For monitoring deployed applications and visualizing metrics.

Ethics and Compliance:

AI Fairness 360: An extensible open-source toolkit for detecting and mitigating algorithmic bias.

Collaboration and Project Management:

JIRA: An agile project management tool.

Slack: For team communications and collaboration.

These tools serve different aspects of the AI development lifecycle, from planning and building models to deploying and monitoring your application. It’s important to choose the right set tools that match the specific requirements of your AI project and your team’s skills.

Conclusion

Congratulations on completing your explorative expedition into the world of AI application development. By now, you should have a road map etched in your mind, punctuated by the landmarks of defining your project’s goals, selecting the appropriate tools, training your model, and ultimately, watching your AI solution come to life. The journey might seem arduous, marked with challenges and the need for continual learning, but the rewards are equally great—bringing forth an application that harnesses the power of AI to solve real-world problems.

Remember, your journey doesn’t end with deployment; the iterative process of refining your application based on user feedback and advancing technology is what will keep your AI application not just functional but formidable. So venture forth with confidence, knowing you are now armed with the knowledge to transform the seeds of your AI aspirations into the fruits of innovation. Keep innovating, keep iterating, and let your AI application be a testament to the intelligence and ingenuity you possess.

Enhancing LLM Inference with GPUs: Strategies for Performance and Cost Efficiency

How to Run Large Language Models (LLMs) on GPUs

LLMs (Large Language Models) have caused revolutionary changes in the field of deep learning, especially showing great potential in NLP (Natural Language Processing) and code-based tasks. At the same time, HPC (High Performance Computing), as a key technology for solving large-scale complex computational problems, also plays an important role in many fields such as climate simulation, computational chemistry, biomedical research, and astrophysical simulation. The application of LLMs to HPC tasks such as parallel code generation has shown a promising synergistic effect between the two.

Why Use GPUs for Large Language Models?

GPUs (Graphics Processing Units) are crucial for accelerating LLMs due to their massive parallel processing capabilities. They can handle the extensive matrix operations and data flows inherent in LLM training and inference, significantly reducing computation time compared to CPUs. GPUs are designed with thousands of cores that enable them to perform numerous calculations simultaneously, which is ideal for the complex mathematical computations required by deep learning algorithms. This parallelism allows LLMs to process large volumes of data efficiently, leading to faster training and more effective performance in various NLP tasks and applications.

Key Differences Between GPUs and CPUs

GPUs and CPUs (Central Processing Units) differ primarily in their design and processing capabilities. CPUs have fewer but more powerful cores optimized for sequential tasks and handling complex instructions, typically with a few cores (dual to octa-core in consumer settings). They are suited for jobs that require single-threaded performance and can execute various operations with high control.

In contrast, GPUs are designed with thousands of smaller cores, making them excellent for parallel processing. They can perform the same operation on multiple data points at once, which is ideal for tasks like rendering images, simulating environments, and training deep learning models including LLMs. This architecture allows GPUs to process large volumes of data much faster than CPUs, giving them a significant advantage in handling parallelizable workloads.

How GPUs Power LLM Training and Inference

Leveraging Parallel Processing for LLMs

LLMs leverage GPU architecture for model computation by utilizing the massive parallel processing capabilities of GPUs. GPUs are equipped with thousands of smaller cores that can execute multiple operations simultaneously, which is ideal for the large-scale matrix multiplications and tensor operations inherent in deep learning. This parallelism allows LLMs to process vast amounts of data efficiently, accelerating both training and inference phases. Additionally, GPUs support features like half-precision computing, which can further speed up computations while maintaining accuracy, and they are optimized for memory bandwidth, reducing the time needed to transfer data between memory and processing units.

GPU-Accelerated Inference for Real-time Applications

GPUs enhance the inference of large-scale models like LLMs through strategies such as parallel processing, quantization, layer and tensor fusion, kernel tuning, precision optimization, batch processing, multi-GPU and multi-node support, FP8 support, operator fusion, and custom plugin development. Advancements like FP8 training and tensor scaling techniques further improve performance and efficiency.

These techniques, as highlighted in the comprehensive guide to TensorRT-LLM, enable GPUs to deliver dramatic improvements in inference performance, with speeds up to 8x faster than traditional CPU-based methods. This optimization is crucial for real-time applications such as chatbots, recommendation systems, and autonomous systems that require quick responses.

Key Optimization Techniques for LLMs on GPUs

Quantization and Fusion Techniques for Faster Inference

Quantization is another technique that GPUs use to speed up inference by reducing the precision of weights and activations, which can decrease the model size and improve speed. Layer and tensor fusion, where multiple operations are merged into a single operation, also contribute to faster inference by reducing the overhead of managing separate operations.

NVIDIA TensorRT-LLM and GPU Optimization

NVIDIA’s TensorRT-LLM is a tool that optimizes LLM inference by applying these and other techniques, such as kernel tuning and in-flight batching. According to NVIDIA’s tests, applications based on TensorRT can show up to 8x faster inference speeds compared to CPU-only platforms. This performance gain is crucial for real-time applications like chatbots, recommendation systems, and autonomous systems that require quick responses.

GPU Performance Benchmarks in LLM Inference

Token Processing Speed on GPUs

Benchmarks have shown that GPUs can significantly improve inference speed across various model sizes. For instance, using TensorRT-LLM, a GPT-J-6B model can process 34,955 tokens per second on an NVIDIA H100 GPU, while a Llama-3-8B model can process 16,708 tokens per second on the same platform. These performance improvements highlight the importance of GPUs in accelerating LLM inference.

Challenges in Using GPUs for LLMs

The High Cost of Power Consumption and Hardware

High power consumption, expensive pricing, and the cost of cloud GPU rentals are significant considerations for organizations utilizing GPUs for deep learning and high-performance computing tasks. GPUs, particularly those designed for high-end applications like deep learning, can consume substantial amounts of power, leading to increased operational costs. The upfront cost of purchasing GPUs is also substantial, especially for the latest models that offer the highest performance.

Cloud GPU Rental Costs

Additionally, renting GPUs in the cloud can be costly, as it often involves paying for usage by the hour, which can accumulate quickly, especially for large-scale projects or ongoing operations. However, cloud GPU rentals offer the advantage of flexibility and the ability to scale resources up or down as needed without the initial large capital outlay associated with purchasing hardware.

Cost Mitigation Strategies for GPU Usage

Balancing Costs with Performance

It’s important for organizations to weigh these costs against the benefits that GPUs provide, such as accelerated processing times and the ability to handle complex computational tasks more efficiently. Strategies for mitigating these costs include optimizing GPU utilization, considering energy-efficient GPU models, and carefully planning cloud resource usage to ensure that GPUs are fully utilized when needed and scaled back when not in use.

Challenges of Cost Control in Real-World GPU Applications

Real-world cost control challenges in the application of GPUs for deep learning and high-performance computing are multifaceted. High power consumption is a primary concern, as GPUs, especially those used for intensive tasks, can consume significant amounts of electricity. This not only leads to higher operational costs but also contributes to a larger carbon footprint, which is a growing concern for many organizations.

The initial purchase cost of GPUs is another significant factor. High-end GPUs needed for cutting-edge deep learning models are expensive, and organizations must consider the return on investment when purchasing such hardware.

Optimizing Efficiency: Key Strategies for Success

Advanced GPU Cost Optimization Techniques

Recent advancements in GPU technology for cost control in deep learning applications include vectorization for enhanced data parallelism, model pruning for reduced computational requirements, mixed precision computing for faster and more energy-efficient computations, and energy efficiency improvements that lower electricity costs. Additionally, adaptive layer normalization, specialized inference parameter servers, and GPU-driven visualization technology further optimize performance and reduce costs associated with large-scale deep learning model inference and analysis.

WhaleFlux: An Open Source in Optimizing GPU Costs

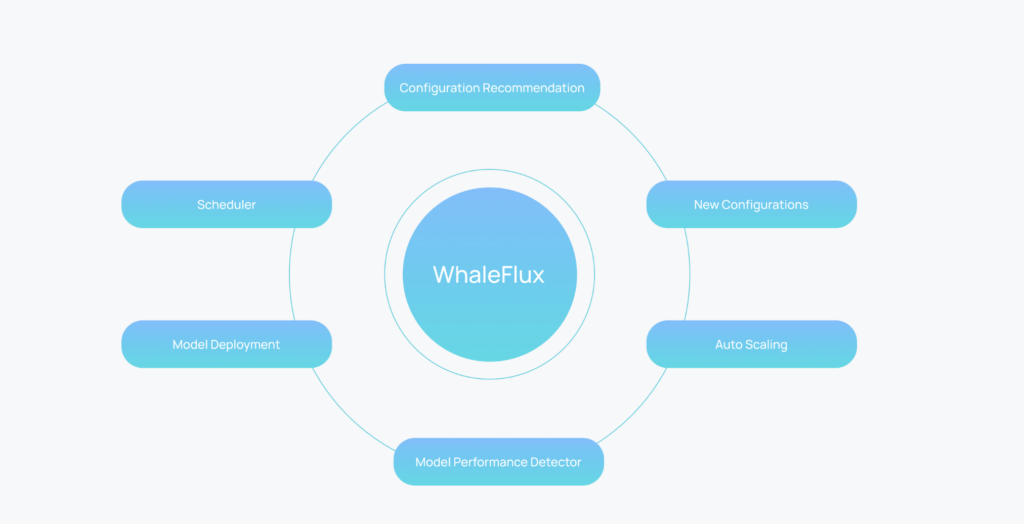

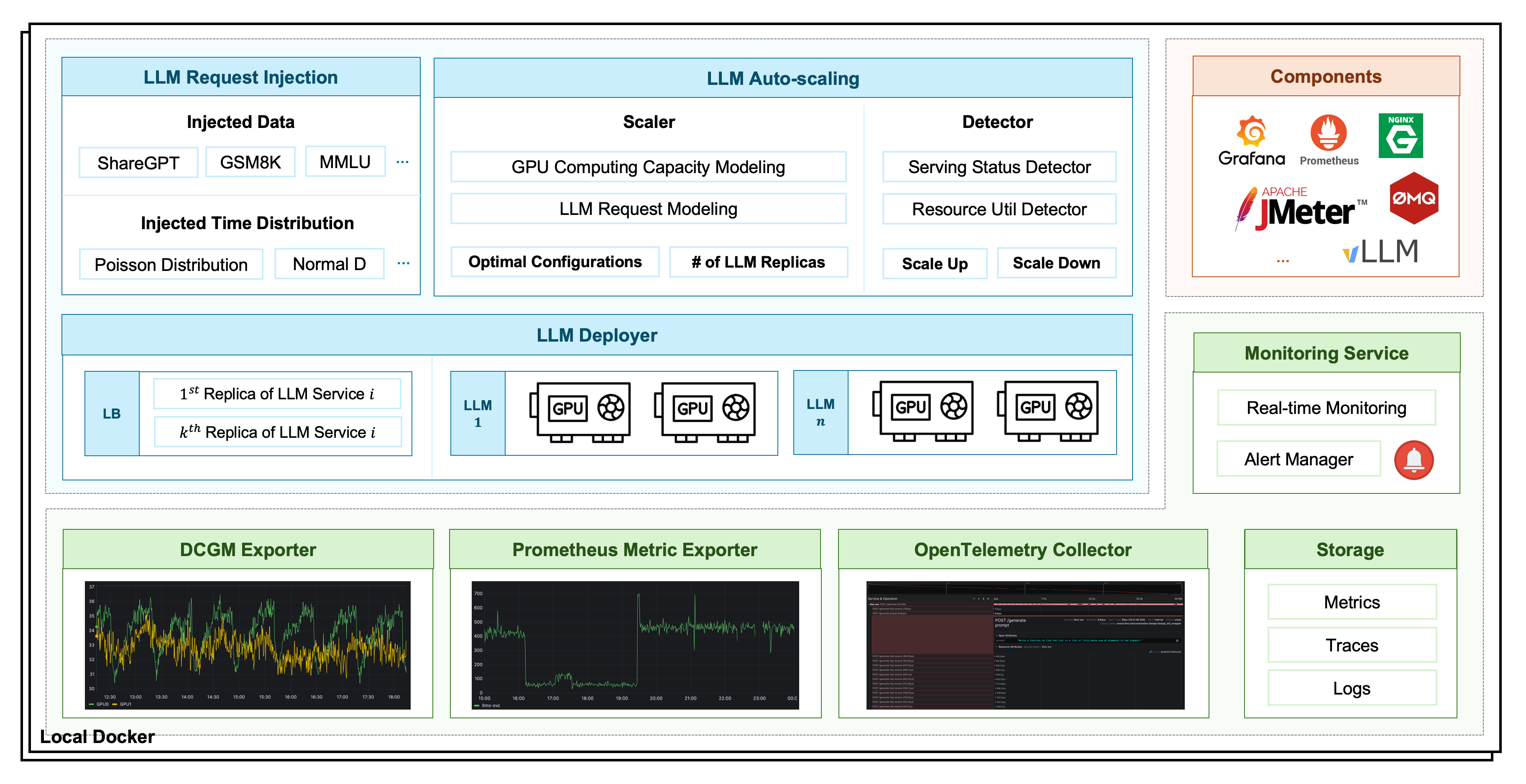

WhaleFlux is a service designed to optimize the deployment, monitoring, and autoscaling of LLMs on multi-GPU clusters. It addresses the challenges of diverse and co-located applications in multi-GPU clusters that can lead to low service quality and GPU utilization. WhaleFlux comprehensively deconstructs the execution process of LLM services and provides a configuration recommendation module for automatic deployment on any GPU cluster. It also includes a performance detection module for autoscaling, ensuring stable and cost-effective serverless LLM serving.

The service configuration module in Enova is designed to determine the optimal configurations for LLM services, such as the maximal number of sequences handled simultaneously and the allocated GPU memory. The performance detection module monitors service quality and resource utilization in real-time, identifying anomalies that may require autoscaling actions. Enova’s deployment execution engine manages these processes across multi-GPU clusters, aiming to reduce the workload for LLM developers and provide stable and scalable performance.

Future Innovations in GPU Efficiency

Emerging Technologies for Better GPU Performance

WhaleFlux’s approach to autoscaling and cost-effective serving is innovative as it specifically targets the needs of LLM services in multi-GPU environments. The service is designed to be adaptable to various application agents and GPU devices, ensuring optimal configurations and performance across diverse environments. The implementation code for WhaleFlux is publicly available for further research and development.

Future Outlook

Emerging technologies such as application-transparent frequency scaling, advanced scheduling algorithms, energy-efficient cluster management, deep learning job optimization, hardware innovations, and AI-driven optimization enhance GPU efficiency and cost-effectiveness in deep learning applications.

Fine-Tuning vs. Pre-Training: How to Choose for Your AI Application

Imagine you are standing in a grand library, where the books hold centuries of human thoughts. But you are tasked with a singular mission: find the one book that contains the precise knowledge you need. Do you dive deep and explore from scratch? Or do you pick a book that’s already been written, and tweak it, refining its wisdom to suit your needs?

This is the crossroads AI business and developers face when deciding between pre-training and fine-tuning. Both paths have their own fun and challenges. In this blog, we explore what lies at the heart of each approach: definitions, pros and cons, then the strategy to choose wisely.

Introduction

What is Pre-Training?

Pre-training refers to the process of training an AI model from scratch on a large dataset to learn general patterns and representations. Typically, this training happens over many iterations, requiring substantial computational resources, time, and data. The model, in essence, develops a deep understanding of the general features within the data, and can be used for inference at convenience.

The outcome of pre-training is usually a relatively stable, effective model adapted to the application scenarios as designed. It could be specified on a certain data domain and particular tasks, or applicable to general usage. Typical examples of pre-trained models include large language models like ChatGPT, Llama, Claude; or large vision models such as CLIP.

What is Fine-Tuning?

The fine-tuning process usually takes a pre-trained model and adjusts it to perform a specific task. This involves updating the weights of the pre-trained model using a smaller, task-specific dataset. Since the model already understands general patterns in the data (from pre-training), fine-tuning further improves it to specialize in your particular problem while reusing the knowledge from pre-training.

This process is often quicker and less resource-intensive than pre-training, as the pre-trained model already captures a wide range of useful features. And more importantly, the task-specific dataset is usually small. Fine-tuning is widely used especially for domain specialization, recent developments include finance, education, science, medicine, etc.

A Little More on the History

The liaison between fine-tuning and pre-training does not emerge in the era of LLMs. In fact, the development of deep learning brings about a perspective of these two “routines”. We can view the brief history of deep learning as three stages with respect to pre-training and fine-tuning.

- First stage (20thcentury): supervised fine-tuning only

- Second stage (early 21stcentury to 2020): supervised layer-wise pretraining + supervised fine-tuning

- Third stage (2020 to date): unsupervised layer-wise pretraining+ supervised fine-tuning

Indeed as we define above, especially for the recent foundation models, the pre-training phase on large datasets follows unsupervised fashion, i.e. for general knowledge, then the fine-tuning phase tailors the model into specific applications. However before this stage, reducing the horizon of the scale, we are already using supervised pretraining and fine-tuning for typical deep learning tasks.

Pros and Cons

If pre-training is the great journey across the general knowledge, then fine-tuning is the delicate craft of specialization. We promised fun and challenges for each approach, now it’s time to check them out.

Pros of Pre-Training

- Full control over the model: Pre-training gives you complete flexibility in designing the architecture and learning objectives, enabling the model to suit your specific needs.

- Task-generalized learning: Since pre-trained models learn from vast, diverse datasets, they develop a rich and generalized understanding that can transfer across multiple tasks.

- Potential for state-of-the-art performance: Starting from scratch allows for new innovations in the model structure, potentially pushing the boundaries of what AI can achieve.

Cons of Pre-Training

- High resource cost: Pre-training is computationally intensive, often requiring large-scale infrastructure (like cloud servers or specialized hardware) and extensive time.

- Vast amounts of data required: For meaningful pre-training, you need enormous datasets, which can be difficult or expensive to acquire for niche applications.

- Extended development time: Pre-training models from scratch can take weeks or even months, significantly slowing down the time to market.

The pros and cons of fine-tuning are straightforward by flipping the coin. In addition to that, we also mention a few nuances.

Pros of Fine-Tuning

- Straightforward:lower resource requirements, less strict data requirement.

- Faster to market: This becomes critical if you are running an AI business and would like to take one step faster than the competitors

- Flexible with target domains and tasks.Your AI applications and business may vary with time, or simply require a refinement of functionality. Fine-tuning makes that much easier.

Cons of Fine-Tuning

- Straightforward:less architectural flexibility, potential suboptimal performance.

- Model bias inheritance: Pre-trained models can sometimes carry biases from the datasets they were trained on. If your fine-tuned task is sensitive to fairness or requires unbiased predictions, this could be a concern. In general, the quality of fine-tuned models depends more or less on the pre-trained model.

When to Choose Fine-tuning

Fine-tuning is ideal when you want to leverage the power of large pre-trained models without the overhead of training from scratch. It’s particularly advantageous when your problem aligns with the general patterns already learned by the pre-trained model but requires some degree of customization to achieve optimal results. We raise several factors, with priority arranged in order:

- Limited resources: Either computational power or the dataset, if acquiring the resources is hard, fine-tuning a pre-trained model is more realistic.

- Sensitive timeline: Fine-tuning is mostly faster than pre-training, not just because of training from scratch. There are always risks that pre-training is sub-optimal and requires further refinement on the model architectures etc.

- Not necessarily the best model: If the performance requirements are not SOTA, but just a reliable, stable application of your foundation model, then fine-tuning is usually enough to achieve the goal. But it is considered much harder to beat all other models just by fine-tuning.

- Leverage existing frameworks: If your application fits well within existing frameworks (such as system compatibility), fine-tuning offers a simpler, more efficient solution.

Your Product’s Role

Inference acceleration refers to techniques that optimize the speed and efficiency of model predictions once the model is trained or fine-tuned. Whichever approach you choose, a faster inference time is always beneficial, both in the development stage and on market. We mention one major factor that the impact of inference acceleration on fine-tuning is more immediate.

- A Matter of Priority: During pre-training, the model’s complexity and computational demands are very high, and the primary concern is optimizing the learning process. The fine-tuning process, on the other hand, will soon move forward to the evaluation or deployment phase, when inference acceleration saves significant resources.

Inference Process of LLM

LLMs, particularly decoder-only models, use auto-regressive method to generate output sequences. This method generates tokens one at a time, where each step in the sequence requires the model to process the entire token history—both the input tokens and previously generated tokens. As the sequence length increases, the computational time required for each new token grows rapidly, making the process less efficient.

Inference Acceleration Methods

- Data-level acceleration: improve the efficiency via optimizing the input prompts (i.e., input compression) or better organizing the output content (i.e., output organization). This category of methods typically does not change the original model.

- Model-level acceleration: design an efficient model structure or compressing the pre-trained models in the inference process to improve its efficiency. This category of methods (1) often requires costly pre-training or a smaller amount of fine-tuning cost to retain or recover the model ability, and (2) is typically lossy in the model performance.

- System-level acceleration: optimize the inference engine or the serving system. This category of methods (1) does not involve costly model training, and (2) is typically lossless in model performance. The challenge is it also requires more efforts on the system design.

An Example of System-level Acceleration: WhaleFlux

WhaleFlux falls into the system-level acceleration, with a focus on GPU scheduling optimization. It is an open-source service for LLM deployment, monitoring, injection, and auto-scaling. Here are the major technical features and values:

- Automatic configuration recommendation.

- Real-time performance monitor.

- Stable, efficient and scalable. Increase resource utilization by over 50%and enhance comprehensive GPU memory utilization from 40% to 90%.

The figure above shows the components and functionalities of WhaleFlux. For more details we refer to the Github repo and the official website of WhaleFlux.

Conclusion

Choosing between fine-tuning and pre-training, by the end of day, depends on your specific project’s needs, resources, and goals. Fine-tuning is the go-to option for most businesses and developers who require fast, cost-effective solutions. On the other hand, pre-training is the preferred approach when developing novel AI applications that require deep customization, or when working with unique, domain-specific data. Though pre-training is more resource-intensive, it can lead to state-of-the-art performance and open the door to new innovations.

For practical concerns, most applications would favor inference acceleration techniques during pre-training and fine-tuning stages, especially for real-time predictions or deployment on edge devices. Data and model-level acceleration are more studied in the academic field, while system-level acceleration has more immediate effectiveness. We hope the content in this blog helps with your choice for your AI applications.

The Evolution of NVIDIA GPUs: A Deep Dive into Graphics Processing Innovation

In the world of computing and gaming, NVIDIA’s GPUs (Graphics Processing Units) have played a pivotal role in shaping the future of technology. From the early days of basic graphical rendering to the cutting-edge AI and deep learning advancements of today, NVIDIA’s GPU innovations have revolutionized multiple industries. In this blog post, we’ll explore the history and evolution of NVIDIA GPUs, analyzing how they have transformed the gaming, professional visualization, and AI landscapes.

The Birth of NVIDIA and the First Generation of GPUs

NVIDIA was founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem. The company’s primary goal was to create high-performance graphics technology for the rapidly growing personal computer (PC) market. The first major breakthrough came in 1995 with the introduction of the RIVA series. This early line of graphics cards laid the groundwork for what would eventually become one of the most dominant players in the GPU market.

The RIVA 128, released in 1997, was NVIDIA’s first true success, offering both 2D and 3D graphics acceleration. It wasn’t until 1999, however, that NVIDIA truly made its mark with the release of the GeForce 256, touted as the world’s first “GPU.” This groundbreaking chip featured hardware transformation and lighting (T&L), a feature that would go on to become a standard in modern graphics rendering. The GeForce 256 set the stage for NVIDIA’s future dominance in the GPU space.

- RIVA series (1997): Early 2D and 3D graphics acceleration, marking NVIDIA’s first successful product.

- GeForce 256 (1999): World’s first GPU with hardware transformation and lighting (T&L), revolutionizing 3D graphics rendering.

The GeForce Era: Dominating the Gaming Market

As NVIDIA continued to refine its graphics technology, the GeForce brand became synonymous with high-performance gaming. The release of the GeForce 2 in 2000 was a significant milestone, offering enhanced performance, better visual effects, and support for DirectX 7. NVIDIA continued this trajectory with the GeForce 3 in 2001, which introduced support for programmable shaders, allowing developers to create more complex and realistic visuals.

One of the most important leaps in GPU technology came with the introduction of the GeForce 8800 GTX in 2006, which was powered by the Tesla architecture. This GPU not only offered groundbreaking performance in gaming but also laid the foundation for future advancements in computational computing, including AI, deep learning, and parallel processing.

- GeForce 2 & 3 (2000-2001): Enhanced performance, programmable shaders, and better graphics effects for gaming.

- GeForce 8800 GTX(2006): Powered by Tesla architecture, it introduced GPUs optimized for high-performance computing (HPC) and laid the groundwork for AI and deep learning.

- Tesla Architecture(2006): Shifted focus to general-purpose GPU computing, supporting applications like scientific simulations and medical research.

The Tesla and Fermi Architectures: Expanding Beyond Gaming

NVIDIA’s Tesla architecture, introduced in 2006, marked the company’s first serious foray into high-performance computing (HPC) and scientific applications. With the Tesla series, NVIDIA began to design GPUs optimized for general-purpose computation, giving birth to the field of GPGPU (General-Purpose Graphics Processing Unit). This allowed GPUs to be used for tasks far beyond gaming, including scientific simulations, medical research, and financial modeling.

In 2010, NVIDIA released the Fermi architecture, which was a significant upgrade over the Tesla series. Fermi-based GPUs featured improved parallel computing capabilities, making them ideal for deep learning and data science workloads. The Fermi-based Tesla C2050 and C2070 cards were among the first GPUs to be widely adopted in supercomputing clusters, setting the stage for NVIDIA’s future dominance in AI and deep learning.

- Fermi Architecture(2010): Introduced improved parallel computing capabilities, ideal for data science and AI workloads.

The Kepler and Maxwell Architectures: Power Efficiency and Performance

As GPU technology evolved, NVIDIA continued to push the envelope with newer, more efficient architectures. The Kepler architecture (released in 2012) brought significant improvements in power efficiency, allowing for better performance without increasing power consumption. This was a critical factor as GPUs began to be used in mobile devices and laptops.

The Maxwell architecture, introduced in 2014, continued this trend, focusing on improving both performance and power efficiency. Maxwell-powered GPUs, like the GeForce GTX 970, offered significant improvements in gaming performance while also providing superior computational power for professional tasks such as 3D rendering and video editing.

- Kepler Architecture(2012): Focused on power efficiency, offering better performance-per-watt for mobile and desktop GPUs.

- Maxwell Architecture(2014): Further power efficiency improvements, delivering great performance for both gaming and professional graphics tasks.

The Pascal and Volta Architectures: AI and Deep Learning Breakthroughs

In 2016, NVIDIA released the Pascal architecture, which marked a huge leap in GPU performance. Pascal-based GPUs were built on a 16nm process and introduced CUDA cores that could handle more complex computational tasks. This architecture played a crucial role in the rise of AI and deep learning, as it provided the raw computational power needed to train large neural networks.

The Volta architecture, which debuted in 2017, further cemented NVIDIA’s leadership in AI and machine learning. The Tesla V100 GPU, powered by Volta, was designed specifically for AI workloads, featuring Tensor Cores that accelerated deep learning tasks. Volta-based GPUs quickly became the go-to solution for data centers, research institutions, and AI-driven companies.

- Pascal Architecture(2016): Significant performance boost, optimized for gaming and deep learning, and built on a 16nm process.

- Volta Architecture(2017): Introduced Tensor Cores for AI and deep learning, tailored for high-end AI workloads and data centers.

The Turing Architecture: Real-Time Ray Tracing and AI-Enhanced Graphics

The Turing architecture, launched in 2018, marked a major milestone in the evolution of gaming and graphics technology. Turing introduced real-timeray tracing, a technique that simulates the way light interacts with objects in the virtual world to produce incredibly realistic graphics. Ray tracing had long been a staple of CGI in movies but was too computationally expensive for real-time gaming.

Turing’s RTX series (including the RTX 2080 Ti) brought ray tracing to the mainstream, offering gamers and developers the ability to create lifelike environments and lighting effects. Additionally, Turing integrated AI-driven technologies like DLSS (Deep Learning Super Sampling), which uses machine learning to upscale lower-resolution images to higher resolutions, improving gaming performance while maintaining visual fidelity.

- Turing Architecture(2018): Introduced real-time ray tracing and DLSS (Deep Learning Super Sampling), transforming gaming visuals and performance.

The Ampere Architecture: A New Era of Gaming and AI Performance

In 2020, NVIDIA introduced the Ampere architecture, which powered the RTX 30 series of GPUs. Ampere brought substantial improvements in performance, efficiency, and ray tracing capabilities. The RTX 3080 and RTX 3090 set new benchmarks for gaming and content creation, offering unparalleled performance in both rasterized and ray-traced graphics.

Ampere was also a key player in AI advancements, with significant performance boosts for AI workloads, including deep learning training and inference tasks. The A100 Tensor Core GPU, based on Ampere, became a cornerstone of AI research and development, widely adopted in data centers and AI-accelerated supercomputing projects.

- Ampere Architecture(2020): Improved gaming and AI performance with enhanced ray tracing and computational power, leading the way for next-gen GPUs like the RTX 30 series.

The Future: Hopper, Ada Lovelace, and Beyond

Looking to the future, NVIDIA has already begun to unveil its next-generation architectures. The Hopper architecture, expected to revolutionize AI and supercomputing, focuses on increasing the efficiency and speed of data processing for deep learning applications.

In the consumer graphics space, NVIDIA’s Ada Lovelace architecture is poised to further elevate gaming and creative applications, with expectations of better ray tracing, AI-powered technologies, and even greater power efficiency.

Conclusion of NVIDIA GPUs

From their early days as simple graphics accelerators to their current status as the driving force behind AI, deep learning, and high-performance computing, NVIDIA’s GPUs have undergone a dramatic transformation. The company’s relentless innovation and focus on both gaming and professional markets have cemented its position as a leader in the technology industry.

As we look forward to the next generation of GPUs, one thing is clear: NVIDIA’s GPUs will continue to shape the future of technology, enabling new breakthroughs in gaming, artificial intelligence, and beyond.

Inference Acceleration: Unlocking the Extreme Performance of AI Models

Introduction

In current era of increasingly widespread artificial intelligence applications, inference acceleration has played a crucial role in enhancing model performance and optimizing user experience. The operating speed in real-world scenarios is vital to the practical effectiveness of applications, especially in scenarios requiring real-time responses, such as recommendation systems, voice assistants, intelligent customer service, and medical image analysis.

The significance of inference acceleration lies in improving speed of system response, reducing computational costs and saving hardware resources. In many real-time scenarios, the speed of model inference not only affects the user experience but also the system’s real-time feedback capabilities. For example, recommendation systems need to make quick recommendations based on users’ real-time behavior, intelligent customer service needs to immediately understand user’s inquiries and generate appropriate responses, and medical image analysis requires models to quickly and accurately analyze a large amount of medical image data. Therefore, through inference acceleration, models can quickly respond to various requests while ensuring accuracy, thereby providing users with a smoother interactive experience.

Challenges

Despite the significant value that inference acceleration brings, it also faces some challenges.

· High computational load: Large AI models, such as natural language processing models (e.g. GPT-3) and computer vision models (e.g. YOLO, ResNet), typically contain hundreds of millions to trillions of parameters, leading to an extremely large computational load. The computational load during the inference phase can significantly impact the model’s response time, especially in high-concurrency environments.

· Hardware limitations: The inference process requires high hardware resource. Although the cloud has powerful computational resources, many applications (e.g. smart homes, edge monitoring, etc.) require models to run on edge devices. The computational capabilities of these devices are often insufficient to support the efficient operation of large models, which may result in high latency and delayed response.

· Memory and bandwidth consumption: AI models consume a large amount of memory and bandwidth resources during inference. For example, large models typically require tens or even hundreds of GB of memory. When memory is insufficient, the system may frequently invoke external storage, for further increasing latency. Moreover, if the model needs to be loaded from a remote cloud, bandwidth limitations can also affect loading speeds.

Solution

In case of what I mentioned above, here are several leverages for inference acceleration.

- Model Compression

Model compression is a way of reducing model parameters and computational load. by optimizing the model structure, it can improve inference efficiency. The main model compression technologies includequantization, pruning and knowledge distillation.

· Quantization:

Quantization can reduce model parameters from high precision to low precision, such as from 32-bit floating-point numbers to 8-bit integers. This approach can significantly reduce the model’s memory usage and computational load with minimal impact on model accuracy. Quantization tools from TensorFlow Lite and PyTorch make this process simpler.

·Pruning

Pruning reduces computational load by removing unimportant weights or neurons in neural networks. Some weights in the model have a minor impact on the prediction results, and by deleting these weights, computational load can be reduced while maintaining accuracy. For example, BERT models can be reduced in size by more than 50% through pruning techniques.

· Knowledge Distillation

The core of knowledge distillation is to train a small model to simulate the performance of a large model, maintaining similar accuracy but significantly reducing computational load. This method is often used to accelerate inference without significantly reducing model accuracy.

- Hardware Acceleration

In addition to model compression, hardware acceleration can also effectively improve model inference speed. Common hardware acceleration methods include:

· GPU Acceleration

GPUs can process a large number of computational tasks in parallel, making them particularly suitable for computationally intensive models during the inference phase. Common deep learning frameworks (such as TensorFlow and PyTorch) support GPU acceleration, significantly increasing speed during inference.

· FPGA and TPU Acceleration

Specialized hardware (such as Google’s TPU or FPGA) plays an important role in accelerating AI inference. FPGAs have a flexible architecture that can adapt to the inference requirements of different models, while TPUs are specifically designed for deep learning and are particularly suitable for Google’s cloud services.

· Edge Inference Device Acceleration

For edge computing needs, many inference acceleration devices, such as the NVIDIA Jetson series, are specifically developed for edge AI, enabling models to run efficiently under limited hardware conditions.

- Software Optimization

In addition to hardware and model structure optimization, software optimization techniques also play a key role in inference acceleration:

· Batch Inference

Batch inference involves packaging multiple inference requests together to accelerate processing speed through parallel computing. This method is very effective in dealing with high-concurrency requests.

· Memory Optimization

By optimizing memory usage, the inference process can be more efficient. For example, pipeline techniques and buffer management can reduce the repeated loading of data and memory consumption, thereby improving overall inference efficiency.

· Model Parallelism and Tensor Decomposition

Distributing the model across multiple GPUs and using tensor decomposition techniques to distribute computations across different computational units can increase inference speed.

Case

Inference acceleration has shown significant effects in many practical applications across various fields:

· Natural Language Processing (NLP)

In the field of natural language processing, the response speed of sentiment analysis and chatbot applications has been significantly improved through quantization and GPU acceleration. Especially in the customer service field, NLP-based chatbots can quickly respond to multi-round conversations with users.

· Recommendation Systems

Recommendation systems need to generate recommendation results in a very short time, and inference acceleration technology plays an important role. Through GPU acceleration and batch inference, personalized recommendation systems can provide personalized content to a large number of users in a shorter time, increasing user retention rates.

· Computer Vision

In scenarios such as autonomous driving and real-time monitoring, inference acceleration is particularly crucial. Edge inference and model compression technologies enable computer vision models to process camera inputs in real-time and react according to the processing results, ensuring safety.

· Biomedical

In medical image analysis, inference acceleration technology helps doctors analyze a large amount of image data in a shorter time, supporting rapid diagnosis. Through model pruning and quantization, medical AI models can efficiently analyze patient images, reducing misdiagnosis rates and saving medical resources.

Conclusion

Through model compression, hardware acceleration, and software optimization, inference acceleration has already demonstrated significant advantages in production environments, improving model response speed, reducing computational costs, and saving resources. Currently, WhaleFlux, a new optimization tool for computational power and performance is on the verge of launching. With the same hardware, it can conduct a more granular analysis of AI models and match them with precise resources. This effectively reduces the inference latency of large models by over 50%, maximizing the operational performance of the models and providing businesses with more cost-effective and efficient computational services.

In the future, with continuous innovation in inference acceleration, real-time AI applications will become more widespread, providing efficient and intelligent solutions for various industries.