GPU Performance Comparison: Enterprise Tactics & Cost Optimization

Hook: Did you know 40% of AI teams choose underperforming GPUs because they compare specs, not actual workloads? One company wasted $217,000 on overprovisioned A100s before realizing RTX 4090s delivered better ROI for their specific LLM. Let’s fix that.

1. Why Your GPU Spec Sheet Lies (and What Actually Matters)

Comparing raw TFLOPS or clock speeds is like judging a car by its top speed—useless for daily driving. Real-world bottlenecks include:

- Thermal Throttling: A GPU running at 85°C performs 23% slower than at 65°C (NVIDIA whitepaper)

- VRAM Walls: Running a 13B Llama model on a 24GB GPU causes constant swapping, adding 200ms latency

- Driver Overhead: PyTorch versions can create 15% performance gaps on identical hardware

Enterprise Pain Point: When a Fortune 500 AI team tested GPUs using synthetic benchmarks, their “top performer” collapsed under real inference loads—costing 3 weeks of rework.

2. Free GPU Tools: Quick Checks vs. Critical Gaps

| Tool | Best For | Missing for AI Workloads |

| UserBenchmark | Gaming GPU comparisons | Zero LLM/inference metrics |

| GPU-Z + HWMonitor | Temp/power monitoring | No multi-GPU cluster support |

| TechPowerUp DB | Historical game FPS data | Useless for Stable Diffusion |

⚠️ The Gap: None track token throughput or inference cost per dollar—essential for business decisions.

3. Enterprise GPU Metrics: The Trinity of Value

Forget specs. Measure what impacts your bottom line:

Throughput Value:

- Tokens/$ (e.g., Llama 2-70B: A100 = 42 tokens/$, RTX 4090 = 68 tokens/$)

- Images/$ (Stable Diffusion XL: 3090 = 1.2 images/$, A6000 = 0.9 images/$)

Cluster Efficiency:

- Idle time >15%? You’re burning cash.

- VRAM utilization <70%? Buy fewer GPUs.

True Ownership Cost:

- Cloud egress fees + power ($0.21/kWh × 24/7) + cooling can exceed hardware costs by 3×.

4. Pro Benchmarking: How to Test GPUs Like an Expert

Step 1: Standardize Everything

- Use identical Docker containers (e.g.,

nvcr.io/nvidia/pytorch:23.10) - Fix ambient temp to 23°C (±1° variance allowed)

Step 2: Test Real AI Workloads

WhaleFlux API automates consistent cross-GPU testing

benchmark_id = whaleflux.create_test(

gpus = [“A100-80GB”, “RTX_4090”, “MI250X”],

models = [“llama2-70b”, “sd-xl”],

framework = “vLLM 0.3.2”

)

results = whaleflux.get_report(benchmark_id)

Step 3: Measure These Hidden Factors

- Sustained Performance: Run 1-hour stress tests (peak ≠ real)

- Neighbor Effect: How performance drops when 8 GPUs share a rack (up to 22% loss!)

5. WhaleFlux: The Missing Layer in GPU Comparisons

Raw benchmarks ignore cluster chaos. Reality includes:

- Resource Contention: Three models fighting for VRAM? 40% latency spikes.

- Cold Starts: 45 seconds lost initializing GPUs per job.

WhaleFlux fixes this by:

- 📊 Unified Dashboard: Compare actual throughput across NVIDIA/AMD/Cloud GPUs

- 💸 Cost-Per-Inference Tracking: Live $/token calculations including hidden overhead

- ⚡ Auto-Optimized Deployment: Routes workloads to best-fit GPUs using benchmark data

Case Study: Generative AI startup ScaleFast reduced Mistral-8x7B inference costs by 37% after WhaleFlux identified underutilized A10Gs in their cluster.

6. Your GPU Comparison Checklist

Define workload type:

- Training? Prioritize memory bandwidth.

- Inference? Focus on batch latency.

Run WhaleFlux Test Mode:

whaleflux.compare(gpus=[“A100″,”L40S”], metric=”cost_per_token”)

Analyze Cluster Metrics:

- GPU utilization variance >15% = imbalance

- Memory fragmentation >30% = wasted capacity

Project 3-Year TCO:

WhaleFlux’s Simulator factors in:

- Power cost spikes

- Cloud price hikes

- Depreciation curves

7. Future Trends: What’s Changing GPU Comparisons

- Green AI: Performance-per-watt now beats raw speed (e.g., L40S vs. A100)

- Cloud/On-Prem Parity: Test identical workloads in both environments simultaneously

- Multi-Vendor Clusters: WhaleFlux’s scheduler mixes NVIDIA + AMD + Cloud GPUs seamlessly

Conclusion: Compare Business Outcomes, Not Specs

The fastest GPU isn’t the one with highest TFLOPS—it’s the one that delivers:

- ✅ Highest throughput per dollar

- ✅ Lowest operational headaches

- ✅ Proven stability in your cluster

Next Step: Benchmark Your Stack with WhaleFlux → Get a free GPU Efficiency Report in 48 hours.

“We cut GPU costs by 41% without upgrading hardware—just by optimizing deployments using WhaleFlux.”

— CTO, Generative AI Scale-Up

The Ultimate GPU Benchmark Guide: Free Tools for Gamers, Creators & AI Pros

Introduction: Why GPU Benchmarks Matter

Think of benchmarks as X-ray vision for your GPU. They reveal real performance beyond marketing claims. Years ago, benchmarks focused on gaming. Today, they’re vital for AI, 3D rendering, and machine learning. Choosing the right GPU without benchmarks? That’s like buying a car without a test drive.

Free GPU Benchmark Tools Compared

Stop paying for tools you don’t need. These free options cover 90% of use cases:

| Tool | Best For | Why It Shines |

| MSI Afterburner | Real-time monitoring | Tracks FPS, temps & clock speeds live |

| Unigine Heaven | Stress testing | Pushes GPUs to their thermal limits |

| UserBenchmark | Quick comparisons | Compares your GPU to others in seconds |

| FurMark | Thermal performance | “Stress test mode” finds cooling flaws |

| PassMark | Cross-platform tests | Works on Windows, Linux, and macOS |

Online alternatives: GFXBench (mobile/desktop), BrowserStack (web-based testing).

GPU Benchmark Methodology 101

Compare GPUs like a pro with these key metrics:

- Gamers: Prioritize FPS (frames per second) at your resolution (1080p/4K)

- AI/ML Pros: Track TFLOPS (compute power) and VRAM bandwidth

- Content Creators: Balance render times and power efficiency

Pro Tip: Always test in identical environments. Synthetic benchmarks (like 3DMark) show theoretical power. Real-world tests (actual games/apps) reveal true performance.

AI/Deep Learning GPU Benchmarks Deep Dive

For AI workloads, generic tools won’t cut it. Use these specialized frameworks:

- MLPerf Inference: Industry standard for comparing AI acceleration

- TensorFlow Profiler: Optimizes TensorFlow model performance

- PyTorch Benchmarks: Tests PyTorch model speed and memory use

Critical factors:

- Precision: FP16/INT8 throughput (higher = better)

- VRAM: 24GB+ needed for large language models like Llama 3

When benchmarking GPUs for AI workloads like Stable Diffusion or LLMs, raw TFLOPS only tell half the story. Real-world performance hinges on:

- GPU Cluster Utilization – Idle resources during peak loads

- Memory Fragmentation – Wasted VRAM from inefficient allocation

- Multi-Node Scaling – Communication overhead in distributed training

For enterprise AI teams: These hidden costs can increase cloud spend by 40%+ (AWS case study, 2024). This is where intelligent orchestration layers like WhaleFlux become critical:

- + Automatically allocates GPU slices based on model requirements

- + Reduces VRAM waste by 62% via fragmentation compression

- + Cuts cloud costs by prioritizing spot instances with failover

Application-Specific Benchmark Shootout

| Task | Key Metric | Top GPU (2024) | Free Test Tool |

| Stable Diffusion | Images/minute | RTX 4090 | AUTOMATIC1111 WebUI |

| LLM Inference | Tokens/second | H100 | llama.cpp |

| 4K Gaming | Average FPS | RTX 4080 Super | 3DMark (Free Demo) |

| 8K Video Editing | Render time (min) | M2 Ultra | PugetBench |

| Task | Top GPU (Raw Perf) | Cluster Efficiency Solution |

| Stable Diffusion | RTX 4090 (38 img/min) | WhaleFlux Dynamic Batching: Boosts throughput to 52 img/min on same hardware |

| LLM Inference | H100 (195 tokens/sec) | WhaleFlux Quantization Routing: Achieves 210 tokens/sec with INT8 precision |

How to Compare GPUs Like a Pro

Follow this 4-step framework:

- Define your use case: Gaming? AI training? Video editing?

- Choose relevant tools: Pick 2-3 benchmarks from Section II/IV

- Compare price-to-performance: Calculate FPS/$ or Tokens/$

- Check thermal throttling: Run FurMark for 20 minutes – watch for clock speed drops

Avoid these mistakes:

- Testing only synthetic benchmarks

- Ignoring power consumption

- Forgetting driver overhead

The Hidden Dimension: GPU Resource Orchestration

While comparing individual GPU specs is essential, enterprise AI deployments fail when ignoring cluster dynamics:

- The 50% Utilization Trap: Most GPU clusters run below half capacity

- Power Spikes: Unmanaged loads cause thermal throttling

Tools like WhaleFlux solve this by:

✅ Predictive Scaling: Pre-warm GPUs before inference peaks

✅ Cost Visibility: Real-time $/token tracking per model

✅ Zero-Downtime Updates: Maintain 99.95% SLA during upgrades

Emerging Trends to Watch

- Cloud benchmarking: Test high-end GPUs without buying them (Lambda Labs)

- Energy efficiency metrics: Performance-per-watt becoming critical

- Ray tracing benchmarks: New tools like Portal RTX test next-gen capabilities

Conclusion: Key Takeaways

- No single benchmark fits all – match tools to your tasks

- Free tools like UserBenchmark and llama.cpp cover most needs

- For AI work, prioritize VRAM and TFLOPS over gaming metrics

- Always test real-world performance, not just specs

Pro Tip: Bookmark MLPerf.org and TechPowerUp GPU Database for ongoing comparisons.

Ready to test your GPU?

→ Gamers: Run 3DMark Time Spy (free on Steam)

→ AI Developers: Try llama.cpp with a 7B parameter model

→ Creators: Download PugetBench for Premiere Pro

Remember that maximizing ROI requires both powerful GPUs and intelligent resource management. For teams deploying LLMs or diffusion models:

- Use free benchmarks to select hardware

- Leverage orchestration tools like WhaleFlux to unlock 30-50% hidden capacity

- Monitor $/inference as your true north metric

How to Reduce AI Inference Latency: Optimizing Speed for Real-World AI Applications

Introduction

AI inference latency—the delay between input submission and model response—can make or break real-world AI applications. Whether deploying chatbots, recommendation engines, or computer vision systems, slow inference speeds lead to poor user experiences, higher costs, and scalability bottlenecks.

This guide explores actionable techniques to reduce AI inference latency, from model optimization to infrastructure tuning. We’ll also highlight how WhaleFlux, an end-to-end AI deployment platform, automates latency optimization with features like smart resource matching and 60% faster inference.

1. Model Optimization: Lighten the Load

Adopt Efficient Architectures

Replace bulky models (e.g., GPT-4) with distilled versions (e.g., DistilBERT) or mobile-friendly designs (e.g., MobileNetV3).

Use quantization (e.g., FP32 → INT8) to shrink model size without significant accuracy loss.

Prune Redundant Layers

Tools like TensorFlow Model Optimization Toolkit trim unnecessary neurons, reducing compute overhead by 20–30%.

2. Hardware Acceleration: Maximize GPU/TPU Efficiency

Choose the Right Hardware

- NVIDIA A100/H100 GPUs: Optimized for parallel processing.

- Google TPUs: Ideal for matrix-heavy tasks (e.g., LLM inference).

- Edge Devices (Jetson, Coral AI): Cut cloud dependency for real-time apps.

Leverage Optimization Libraries

CUDA (NVIDIA), OpenVINO (Intel CPUs), and Core ML (Apple) accelerate inference by 2

–5×.

3. Deployment Pipeline: Streamline Serving

Use High-Performance Frameworks

- FastAPI (Python) or gRPC minimize HTTP overhead.

- NVIDIA Triton enables batch processing and dynamic scaling.

Containerize with Docker/Kubernetes

WhaleFlux’s preset Docker templates automate GPU-accelerated deployment, reducing setup time by 90%.

4. Autoscaling & Caching: Handle Traffic Spikes

Dynamic Resource Allocation

WhaleFlux’s 0.001s autoscaling response adjusts GPU/CPU resources in real time.

Output Caching

Store frequent predictions (e.g., chatbot responses) to skip redundant computations.

5. Monitoring & Continuous Optimization

Track Key Metrics

Latency (ms), GPU utilization, and error rates (use Prometheus + Grafana).

A/B Test Optimizations

- Compare quantized vs. full models to balance speed/accuracy.

- WhaleFlux’s full-stack observability pinpoints bottlenecks from GPU to application layer.

Conclusion

Reducing AI inference latency requires a holistic approach—model pruning, hardware tuning, and intelligent deployment. For teams prioritizing speed and cost-efficiency, platforms like WhaleFlux automate optimization with:

- 60% lower latency via smart resource allocation.

- 99.9% GPU uptime and self-healing infrastructure.

- Seamless scaling for high-traffic workloads.

Ready to optimize your AI models? Explore WhaleFlux’s solutions for frictionless low-latency inference.

How to Test LLMs: Evaluation Methods, Metrics, and Best Practices

Introduction

Large Language Models (LLMs) are reshaping how we interact with technology, from drafting emails to answering life-changing medical queries. But do we always trust the answer given by LLMs? Small oversights in emails may be fixed timely, however, inaccurate descriptions in a medical query response may lead to serious repercussions.

The catch is straightforward: power comes with responsibility. While LLMs are capable of impressive feats, they are also prone to errors, biases, and unanticipated behaviors. Testing is therefore crucial to ensure that LLMs produce accurate, fair, and useful outputs in real-world applications.

This blog explores why LLM evaluation is essential, provides a deep dive into metrics and methods for testing LLMs. By the end, you will have a clearer understanding of why testing isn’t just a hindsight—it’s the backbone of building better, more reliable AI.

Why is LLM Testing Required?

We start with elaborating the potential benefits of thorough LLM testing, together with a related concrete application scenario.

Ensure Model Accuracy

Despite being trained on massive amounts of data, LLMs can still make inaccurate responses, ranging from generating nonsensical outputs to producing factually incorrect information. Testing plays a crucial role in identifying these inaccuracies and ensuring that the model produces reliable, factually correct outputs.

Example: In critical applications like healthcare consulting, responses must be delicate. A model error could lead to incorrect medical advice or even harm to patients.

Detecting and Mitigating Bias

LLMs inherit biases present in their training data, such as gender, racial, or cultural biases. This can lead to discrimination or reinforce harmful stereotypes. Rigorous testing helps identify these biases, enabling developers to mitigate them and ensure fairness in the model’s behavior.

Example: In recruitment tools, LLMs are used to help evaluate resumes and job applications. If a model exhibits gender or racial bias, it could inadvertently favor or reject candidates based on characteristics unrelated to their qualifications.

Improving Robustness and Generalization

LLMs are often evaluated on their performance in ideal conditions, but real-world inputs can be much more varied and unpredictable. Robustness testing ensures that a model performs well even under edge cases or adversarial conditions.

Example: Consider a customer support chatbot designed for multi-lingual purpose. If the model is only trained with datasets mainly in English and lacks generalization ability for other languages or dialects, it may fail to respond appropriately in non-English conversations.

Optimizing User Experience

Testing LLMs from a user experience (UX) perspective is vital for understanding how the model interacts with users. A well-tested model will generate outputs that are coherent, relevant, and engaging, enhancing user satisfaction and promoting retention.

Example: All applications based on in-context responses.

Regulatory and Ethical Compliance

For industrial AI applications, ensuring that they comply with ethical guidelines and regulations is crucial. Testing on this issue helps ensure that LLMs do not violate privacy regulations and adhere to ethical standards.

Example: Personalized content recommendation systems may use user data to provide tailored experiences. Many regions, such as the European Union, have implemented data protection regulations like GDPR, which govern how personal data is used.

LLM Evaluation Metrics and Methods

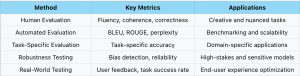

Now we explore common evaluation methods, the key metrics for each, and the scenarios in which they are most effective. Below is the roadmap of this section.

Human Evaluation

Human evaluation involves assessing LLM outputs by users or experts. This approach is particularly useful for creative tasks where subjective judgment matters; and tasks requiring professional backgrounds and only domain experts have the authority to give a reliable evaluation. Human evaluators can rate the fluency, coherence, and correctness of the generated text, helping to assess if the model meets human standards for quality.

Applications: Creative content generation, design, scientific domain, etc.

Automated Evaluation

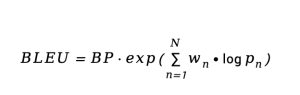

Automated evaluation uses computational metrics to assess the quality of LLM outputs. These metrics are often used for benchmarking models on standardized tasks, therefore mostly appear in research papers. Automated metrics follow certain mathematical definitions and can quickly process large volumes of data, making them ideal for testing models at scale. Many metrics have been adopted for NLP tasks such as BLEU score in machine translation. We introduce several metrics that have been widely used for evaluation on NLP tasks.

- BLEU (Bilingual Evaluation Understudy)

BLEU compares the n-grams of the generated translation with a reference translation, assigning a score between 0 and 1. Higher BLEU scores indicate that the generated text is closer to the reference.

where BP is the brevity penalty, and is the precision of n-grams.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

ROUGE is a set of metrics used for evaluating text summarization and other tasks where generating a relevant summary is the goal. It focuses on recall, measuring how many n-grams in the generated text overlap with the reference. A higher Rouge score shows better performance.

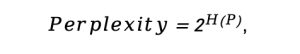

- Perplexity

Perplexity measures how well a probabilistic model predicts a sample. It is commonly used for evaluating language models, indicating how effectively the model predicts the next word/token in a sequence. A lower perplexity value indicates that the model is better at predicting the next token. It’s often used as a proxy for fluency and coherence.

where H (p) is the cross-entropy of the model’s predicted distribution.

Application: machine translation, summarization, question-answering systems, etc.

Task-Specific Evaluation

LLMs have shown potentials on more and more challenging tasks, for which particular metrics should be proposed to evaluate the performance thoroughly. These metrics are directly tied to the model’s ability to solve complicated real-world problems. For example, to evaluate scientific question-answering performance of LLMs, recent research proposed a metric called RACAR, leveraging the power of GPT-4. This metric consists of five dimensions: Relevance, Agnosticism, Completeness, Accuracy and Reasonableness. Indeed, using GPT-4 to design evaluation metrics is happening!

Applications: education, scientific domains, medical diagnosis, legal document analysis.

Robustness Testing

Robustness testing ensures that LLMs can handle edge cases, adversarial inputs, and unexpected conditions. For sensitive applications, this type of testing is critical to avoid disastrous consequences. It also includes bias detection to ensure the model doesn’t make discriminatory decisions based on problematic inputs. Concrete assessment may include: adversarial attack test, fairness test, out-of-distribution test, perturbation test, etc.

Applications: autonomous vehicle, healthcare, high-risk applications

Real-World Testing

Real-world testing involves collecting feedback from terminals (e.g. users) and observing how they interact with the model in practical scenarios. Metrics like user satisfaction, task success, and engagement help developers understand how well the model meets user needs. If the LLMs target human users, then this method is closely entangled with human evaluation. On the other hand, after the LLM system is deployed, the testing should also include system stability, inference latency, resource consumption, etc.

Best Practices and Case Studies

Several companies have successfully improved their LLM performance through extensive testing. We give an example of Claude, an LLM developed by Anthropic, which has improved the performance in the new generation by robustness testing.

In the early stages, Claude 1 showed significant weaknesses in handling adversarial inputs, such as biased queries and subtle misleading prompts. It also struggled with complex, multi-step reasoning tasks. Anthropic conducted extensive robustness testing focusing on bias detection, safety, and accuracy under ambiguity. They tested Claude’s performance using adversarial inputs, ethical dilemma questions, and complex logical problems.

Anthropic fine-tuned Claude 2 using more robust, curated datasets focused on reducing bias and improving long-term reasoning. Consequently, Claude 2 shows a substantial improvement in safety (less likely to produce biased or harmful responses) and logical coherence when handling multi-step or complex reasoning tasks. It also demonstrated better resilience to adversarial inputs, providing more accurate, contextually appropriate answers even when the input was ambiguous or adversarial.

Popular Tools for Testing LLMs

Several tools have emerged to support LLM testing. One notable tool is WhaleFlux.

Other popular LLM testing tools include:

- Hugging Face’s transformers library: Provides easy access to a range of pre-trained models and benchmarking datasets.

- OpenAI’s GPT Evaluation Framework: Allows developers to assess GPT models on various metrics like task completion, creativity, and coherence.

Conclusion

Testing LLMs is an essential step in ensuring that models are accurate, fair, robust, and optimized for user experience. From human evaluations to real-world user feedback, a comprehensive testing strategy is key to addressing the challenges posed by LLMs in practical applications.

We encourage developers to adopt these strategies in their LLM development and testing pipelines, and to continuously iterate on their models based on feedback and testing results. Share your experiences and feedback to contribute to the ongoing improvement of LLMs!

Mastering LLM Inference: A Comprehensive Guide to Inference Optimization

Introduction

Running a state-of-the-art LLM in production is more than just having the right model — it’s like owning a high-performance sports car but being stuck in traffic. The model has the power to generate insights, but without proper inference mechanism, you’re left idling, wasting time and resources. As LLMs grow larger, the inference process becomes the bottleneck that can turn even the most advanced model into a sluggish, expensive system.

Inference optimization is the key to unlocking that potential. It’s not just about speeding things up; it’s about refining the engine — finding the sweet spot between performance and resource consumption to enable scalable, efficient AI applications. In this blog, we will show you how to optimize your LLM inference pipeline to keep your AI running at full throttle. From hardware acceleration to advanced algorithms and distributed computing, optimizing inference is what allows LLMs to get ready for high-demand, real-time tasks.

Understanding LLM Inference

Before diving into optimization techniques, it’s crucial to understand the two core steps of LLM inference: prefill and decoding.

- Prefill: Tokenization and Contextualization

In the prefill stage, the model receives an input, typically in the form of text, and breaks it down into tokens. These tokens are then transformed into numerical representations, which the model processes in its neural network. The goal of prefill is to set up a context in which the model can begin its generative task.

- Decoding: The Generation Phase

Once the input is tokenized and contextualized, the model begins to generate output, one token at a time, based on the patterns it learned during training. The efficiency of this phase dictates the overall performance of the system, especially in latency-sensitive applications.

However, decoding isn’t as straightforward as it seems. Generating text, for example, can require vast amounts of computation. Longer sequences, higher complexity in prompt structure, and the model’s size all contribute to making this phase resource-demanding.

- Challenges

The challenges in LLM inference lie primarily in latency, computation cost, and memory consumption. As models grow in size, they require more computational power and memory to generate reliable responses, making optimization essential for practical deployment.

How to Optimize LLM Inference

Now is the time for the main entrée. We will cover common LLM inference optimization techniques based on various participants of the pipeline, such as: hardware, algorithm, system, deployment and external tool.

- Hardware Acceleration. LLM inference can benefit from using a combination of CPUs, GPUs, and specialized hardware like TPUs and FPGAs.

- Leveraging heterogeneous hardware for parallel inference.

GPUs are well-suited for parallel processing tasks and excel at handling the matrix operations common in LLMs. For inference, distributing workloads across multiple GPUs, while utilizing CPUs for lighter orchestration, can significantly reduce latency and improve throughput.

By combining GPUs and CPUs in a heterogeneous architecture, you can ensure each hardware component plays to its strengths—CPUs handling sequential operations and GPUs accelerating tensor calculations. This dual approach maximizes performance and minimizes cost, especially in cloud-based and large-scale deployments.

- Specialized Hardware: TPUs and FPGAs

TPUs (Tensor Processing Units) are purpose-built for deep learning tasks and optimized for matrix multiplication, which is essential to LLMs training and inference. TPUs can outperform GPUs for some workloads, especially in large-scale inference scenarios. On the other hand, FPGAs (Field-Programmable Gate Arrays) offer customization, enabling users to create highly efficient hardware accelerators tailored to specific inference tasks, though their implementation can be more complicated.

Each of these specialized units—GPUs, TPUs, and FPGAs—can accelerate LLM inference, but the choice of hardware should align with the specific needs of your application, balancing cost, speed, and scalability.

- Algorithmic Optimization.

Efficient algorithms always stay at the heart of systematic optimization. The context here can be diverse, ranging from the self-attention mechanism design to task-specific scenarios. Below, we list a few common techniques related to faster LLM inference algorithms.

- Selective Context Compression

LLMs often handle long contexts to generate coherent text. Selective context compression involves identifying and pruning less relevant information from the input, allowing the model to focus on the most crucial parts of the text. This reduces the amount of data processed, cutting down both memory usage and inference time. This technique is especially useful for real-time applications where input lengths can vary dramatically, allowing the model to scale efficiently without sacrificing output quality.

- Speculative Decoding

LLMs are mostly autoregressive models, where tokens are generated one by one, with each token prediction depending on the previous ones. Speculative decoding is different: it predicts multiple possible tokens in parallel, significantly reducing the time spent on each decision. While this approach seems more efficient, it also raises the challenge of managing the predicted tokens, ensuring that the final output is the most accurate one.

- Continuous Batching

In continuous batching, incoming requests are buffered in a queue for a short duration. Once the batch reaches a sufficient size or time threshold, the model processes the batch in one pass. This approach shows more effectiveness when dealing with large data stream applications, like serving large-scale search engines or recommendation systems. One significant challenge is to adaptively determine the optimal batch sizes according to different application scenarios.

- Distributed Computing. A common technique during LLMs training, distributed computing can certainly be adopted during the inference phase. By spreading the workload across multiple servers or GPUs, we have more resources to handle inference with larger models. One good example is parallel decoding.

- Parallel Decoding

Parallel decoding optimizes inference by allowing multiple tokens to be processed simultaneously. This technique splits the task across multiple computational units, therefore may speed up the inference process. It is particularly useful for handling batch processing, where large amount of data needs to be processed in a short time frame. However, parallel decoding can strain memory resources, especially for large models. Thus, balancing the batch size and memory usage is crucial to preventing bottlenecks.

In addition to reducing latency and accommodating larger models for inference, distributed computing offers other benefits, such as load balancing fault tolerance. It becomes easier to manage traffic spikes and prevent any single unit from becoming overloaded. This enhances the overall reliability and availability of the system, allowing for consistent inference performance under heavy load.

- Industrial Inference Frameworks. For organizations looking to deploy LLMs at scale, there are frameworks like DeepSpeed and TurboTransformers that offer ready-made solutions to streamline the inference process. Both frameworks are open-sourced.

- DeepSpeed

Developed by Microsoft, DeepSpeed is a powerful framework that provides tools for model parallelism and pipeline parallelism, enabling large models to be split across multiple devices for faster inference. Zero Redundancy Optimizer (ZeRO) is a crucial part for inference because it reduces memory overhead by partitioning model parameters and gradients across devices. Inference can leverage ZeRO’s parameter partitioning to fit larger models on devices with limited memory. DeepSpeed also incorporates techniques like activation checkpointing and quantization to further optimize resource usage.

- TurboTransformers

Built specifically for transformer-based models, TurboTransformers focus on techniques like tensor fusion and dynamic quantization to optimize the execution of large models. One of TurboTransformers’ standout features is its efficient attention mechanism. By optimizing attention through techniques like block sparse attention or local attention, it reduces the time complexity of the attention layers. One apparent limitation of TurboTransformers is the lack of flexibility with other models. In addition, it highly relies on NVIDIA’s CUDA ecosystem.

The principle of this step is straightforward: provide the best customer experience with reasonably small cost. However, it is non-trivial to implement in production environments, which require careful attention to performance and resource utilization.

The techniques mentioned above can all contribute to effective and efficient LLM serving. The deployment usually involves lots of engineering efforts such as user interaction, docker container, etc. In addition, we introduce another practice for inference optimization in the serving and deployment phase: Mixture-of-experts.

- Mixture-of-experts (MoE) Models

MoE divides the LLM into multiple expert sub-models, each responsible for specific tasks. Only a subset of these experts is activated for each inference request, therefore enabling faster responses without sacrificing accuracy, as only the most relevant experts are engaged.

Case Study and Real-world Applications

LLM inference optimization has been driving impactful transformation across industries, many have already reaped the rewards of these advancements. In healthcare, for example, MedeAnalytics and PathAI have successfully integrated LLMs for diagnostic assistance and record summarization. The Selective context compression technique has been applied to help prioritize crucial patient data, thus improving efficiency.

Another example is the social media, where user-generated content is a primary driver of engagement on online forums, video-sharing websites, etc. Multi-modal LLMs have been actively studied for text and image analysis and generation. Many of the techniques mentioned above have been adopted in both training and inference phase.

Challenges: where LLM Inference Still Struggles

Living in Manhattan, even with the most efficient city planning and traffic control, drivers suffer from time to time. Same story for LLM inference, the model size always grows, capturing more modalities, accommodating more down-stream domains, etc. The punchline of LLM inference is the efficiency-accuracy trade-off, according to various applications. Can we design the LLM system to achieve the optimal trade-off? If yes, what compromise is exactly reasonable? Besides these high-level challenges, there are several concrete scenarios where better techniques would be helpful.

- Inference optimization for multi-modal LLMs.

- In-context generation with long interaction.

- Efficient load-balancing in dynamic environments.

Conclusion

Optimizing LLM inference is essential for making these advanced models practical and scalable in real-world applications. This blog explores key techniques that enhance LLM inference performance, covering various aspects of the LLM ecosystem, including hardware, algorithms, system architecture, deployment, and external tools. While current optimization methods are already sophisticated and versatile, the growing integration of LLMs across industries presents new challenges that will require continued innovation in inference efficiency.

Maximizing Efficiency in AI: The Role of LLM Serving Frameworks

Introduction

In the vast and ever-evolving landscape of artificial intelligence, LLM serving stands out as a pivotal component for deploying sophisticated machine learning models. At its core, LLM serving refers to the methodologies and technologies used to deliver large language models’ capabilities to end-users seamlessly. Large language models, or LLMs, serve as the backbone of numerous applications, providing the ability to parse, understand, and generate human-like text in real-time. Their significance extends beyond mere novelty, as they are reshaping how businesses operationalize AI to gain actionable insights and propel their customer experiences.

The evolution of LLM serving technology is a testimony to the AI industry’s commitment toward efficiency and scalability. Pioneering technologists in AI infrastructures recognized the need for robust, auto-scalable solutions that could not only withstand the growing demands but also streamline the complexity entailed in deploying and managing these cognitive powerhouses. Today, as we witness the blossoming of AI businesses across the globe, LLM serving mechanisms have become central to successful AI strategies, representing one of the most discussed topics within the industry.

Embracing an integrated framework for LLM serving paves the way for organizations to harvest the full spectrum of AI’s potential — making this uncharted territory an exciting frontier for developers, enterprises, and technology enthusiasts alike.

Understanding LLMs in AI

Large Language Models (LLMs) are revolutionizing the way we interact with artificial intelligence. These powerful tools can understand and generate human-like text, making them indispensable in today’s AI industry. But what exactly are LLMs, and why are they so significant?

LLMs are advanced machine learning models that process and predict language. They are trained on vast amounts of text data, learning the nuances of language structure and meaning. This training enables them to perform a variety of language-related tasks, such as translation, summarization, and even creative writing.

In the current AI landscape, LLMs play a pivotal role. They power chatbots, aid in customer service, enhance search engine results, and provide smarter text predictions. Their ability to understand context and generate coherent responses has made them vital for businesses seeking to automate and improve communication with users.

Popular examples of LLMs include OpenAI’s GPT-3 and Google’s BERT. GPT-3, known for its ability to produce human-like text, can write essays, create code, and answer questions with a high degree of accuracy. BERT, on the other hand, is designed to understand the context of words in search queries, improving the relevancy of search engine results.

By integrating LLMs, industries are witnessing a significant transformation in how machines understand and use human language. As these models continue to evolve, their potential applications seem limitless, promising a future where AI can communicate as naturally as humans do.

Key Components of LLM Serving

When deploying large language models (LLMs) like GPT-3 or BERT, understanding the server and engine components is crucial. The server acts as the backbone, processing requests and delivering responses. Its computing power is essential, as it directly influences the efficiency and speed with which the LLM operates. High-powered servers can rapidly perform complex language model inferences, translating to quicker response times and a smoother user experience. The ability to service multiple requests concurrently without delay is paramount, especially using resource-intensive LLMs.

Meanwhile, the engine of the serving system is akin to the brain of the operation—it’s where the algorithms interpret input data to provide human-like text. The engine’s performance is hinged on the server’s ability to provide the necessary computing power, which comes from high-quality CPUs and GPUs and sufficient memory for processing.

For an LLM to deliver its full potential, the server and engine must work in unison, leveraging high throughput and low latency to ensure user satisfaction. Auto-scaling capabilities and AI-specific infrastructures further empower these components, providing dynamic resource allocation to match demand. This ensures that services remain responsive across varying workloads, ultimately delivering a consistently efficient user experience.

In essence, the interplay between a server’s computing power and the LLM engine is a dance of precision and power, with each component magnifying the other’s effectiveness. A robust server infrastructure elevates the LLM’s performance, turning AI’s promise into a reality across user interactions.

LLM Serving Frameworks: A Comparative Analysis

When it comes to deploying large language models (LLMs), selecting the right serving framework is crucial. It’s not just about keeping the lights on; it’s about blazing a trail for efficient, scalable LLM inference that can keep pace with your needs. Let’s examine various frameworks such as TensorRT-LLM, vLLM, and RayServe to see how they stack up.

TensorRT-LLM: The Speed Demon

NVIDIA’s TensorRT-LLM is revered for its ability to deliver low latency and high throughput, essential for rapid LLM deployment. This high-performance deep learning inference optimizer and runtime is adept at auto-scaling across various NVIDIA GPUs. For those with CUDA-enabled environments, TensorRT-LLM shines by fine-tuning models for peak performance, ensuring every ounce of computing power is well-utilized. When throughput takes precedence, TensorRT-LLM is a game-changer.

vLLM: The Memory Magician

vLLM stands out in its approach to memory optimization. It is designed for scenarios where memory is a bottleneck yet high-speed LLM inference is non-negotiable. Offering a compromise between powerful performance and modest hardware demands, vLLM is a valuable asset, particularly in edge computing environments where conserving resources is paramount. If you’re wrestling with memory constraints but can’t compromise on speed, vLLM warrants serious consideration.

RayServe: The Flexible Powerhouse

With simplicity and flexibility at its core, RayServe offers a serving solution that is not only model-agnostic but also excels in diverse computational settings. Its auto-scaling prowess, based on incoming traffic, ensures optimal resource allocation while maintaining low latency. This makes RayServe ideal for those who desire a straightforward yet robust framework capable of dynamically adapting to fluctuating demands.

Benchmarking for Your Needs

Benchmarking these frameworks against your specific requirements is essential. Throughput, latency, and memory usage are critical metrics you’ll need to appraise. While TensorRT-LLM may boast superior throughput, vLLM could address your memory constraints with finesse. RayServe, with its auto-scaling abilities, ensures that LLM deployment is effectively managed over different loads.

Making an informed decision on the LLM serving framework affects the success of your application. By weighing your performance needs against various limitations, you can pinpoint a framework that satisfies immediate requirements and grows in tandem with your long-term goals. Whether you prioritize the sheer speed of TensorRT-LLM, the memory efficiency of vLLM, or the adaptability of RayServe, the right server framework is key to meeting your LLM inference challenges.

Challenges and Solutions in LLM Serving

Scaling LLM deployment to meet the high demands of modern user bases presents notable challenges. One primary concern for many organizations is the resource-intensive nature of these models, which can result in significant costs and technical constraints. Latency is another critical issue, with the need to provide real-time responses often at odds with the computational complexity involved in LLM inference.

Innovative solutions, such as PagedAttention and continuous batching, have emerged to address these hurdles. PagedAttention is a mechanism that reduces memory consumption during inference by carefully managing how memory is allocated and used, allowing for sophisticated LLMs to serve on more modest hardware without compromising processing speed. Continuous batching harnesses the power of parallel processing, executing multiple inference tasks collectively, thereby driving down latency and making the most of the computing resources at hand.

The key to success in LLM serving lies in striking the perfect balance between resource allocation, cost management, and system responsiveness. By employing these innovative techniques, organizations can improve efficiency, reduce overhead, and maintain a competitive edge in the fast-paced world of AI.

The future of LLM serving hinges on advancements in the technology that powers these language models. The distinction lies in the infrastructure and strategies employed to deliver the capabilities of LLMs to end-users efficiently. As the serving aspect evolves, AI businesses will likely see more robust, adaptable, and cost-effective solutions emerging. This will impact not only the accessibility and scalability of LLMs but also the breadth of applications and services AI companies can offer.

Enhancements in LLM serving tech are on track to streamline complex AI operations, making it easier for businesses to implement sophisticated natural language processing features. This will facilitate new heights of personalization and automation within the industry, fueling innovation and potentially altering the competitive landscape.

To sum up, the progression in LLM serving is crucial for shaping the application of Large Language Models within AI businesses, promising to drive growth and transformative change across the AI industry.

The Future-Proofing of AI: Strategic Management of Computing Power and Predictions in Industry Advancements

Introduction to Computing Power in the AI Field

In the field of artificial intelligence (AI), computing power is the crucial pillar supporting the development and application of AI technologies. This power is foundational—it enables the processing of large datasets, runs complex algorithms, and accelerates the pace of innovation. Thus, efficient management of computing resources is essential for the advancement and sustainability of AI projects and ventures.

The Challenges with Managing Computing Power

The effort to harness and manage computing power within the field of artificial intelligence is riddled with a variety of intricate challenges, each posing potential roadblocks to optimal operations. These challenges necessitate vigilant management and innovative solutions to ensure that the infrastructure aligning with an AI-driven environment is both robust and adaptable.

Expanded key challenges include

Scaling Infrastructure: As the demand for AI workloads grows, the infrastructure must scale commensurately. This is a two-pronged challenge involving physical hardware expansion, and the seamless integration of this hardware into existing systems to avoid performance bottlenecks and compatibility issues.

Energy Efficiency: The demands of AI workloads are significant, often leading to elevated energy consumption which in turn increases operational costs and carbon footprints. Finding ways to reduce energy use without sacrificing performance requires the implementation of sophisticated power management strategies and possibly the overhaul of traditional data center designs.

Heat Dissipation: The high-performance computing necessary for AI generates substantial heat. Designing and maintaining cooling solutions that are both effective and energy-efficient is critical to protect the longevity of hardware components and ensure continued optimal performance.

Allocation and Scheduling: Effective utilization of computing resources necessitates that tasks are prioritized and scheduled to optimize the usage of every GPU in the cluster. This involves complex decision-making processes, often relying on sophisticated algorithms that can dynamically adjust to the changing demands of AI workloads.

Investing in Innovation: The fast-paced nature of AI technology means that new and potentially game-changing innovations are continually on the horizon. Deciphering which new technologies to invest in—and when—requires a deep understanding of the trajectory of AI and its resultant computational demands.

Security Concerns: The valuable data processed and stored for AI tasks makes it a prime target for cyber threats. Ensuring the integrity and confidentiality of this data requires a multi-layered security approach that is robust and ahead of potential vulnerabilities.

Maintaining Flexibility: The computing infrastructure must remain flexible to adapt to new AI methodologies and data processing techniques. This flexibility is key to leveraging advancements in AI while maintaining the relevance and effectiveness of existing computing resources.

Meeting these challenges head-on is essential for the sustainability and progression of AI technologies. The following segments of this article will delve into practical strategies, tools, and best practices for overcoming these obstacles and optimizing computing power for AI’s dynamic demands.

GPU Cluster Management

Effectively managing GPU clusters is critical for enhancing computing power in AI. Well-managed GPU clusters can significantly improve processing and computational abilities, enabling more advanced AI functionalities. Best practices in GPU cluster management focus on maximizing GPU utilization and ensuring that the processing capabilities are fully exploited for the intensive workloads typical in AI applications.

Deep Observation of GPU Clusters: The Backbone of AI Computing

The intricate systems powering today’s AI require more than raw computing force; they require intelligent and meticulous oversight. This critical oversight is where deep observation of GPU clusters comes into play. In the realm of AI, where data moves constantly and demands can spike unpredictably, the real-time analysis provided by GPU monitoring tools is essential not just for maintaining operational continuity, but also for strategic planning and resource allocation.

In-depth observation allows for:

- Proactive Troubleshooting: Anticipating and addressing issues before they escalate into costly downtime or severe performance degradation.

- Resource Optimization: Identifying underutilized resources, ensuring maximum ROI on every bit of computing power available to your AI projects.

- Performance Benchmarking: Establishing performance benchmarks aids in long-term planning and is crucial for scaling operations efficiently and sustainably.

- Cost Management: By monitoring and optimizing GPU clusters, organizations can significantly reduce wastage and improve the cost-efficiency of their AI initiatives.

- Future Planning: Historical and real-time data provide insights that guide future investments in technology, ensuring your infrastructure is always one step ahead.

By embracing comprehensive GPU performance analysis, AI enterprises not only ensure their current operations are running at peak efficiency, but they also arm themselves with the knowledge to forecast future needs and trends, all but guaranteeing their place at the vanguard of AI’s advancement.

Recommendations for Software and Tools

The market offers a variety of software and tools designed to assist with managing and optimizing computing power dedicated to AI tasks. Tools for GPU cluster management, private cloud management, and GPU performance observation are crucial for any organization aiming to maintain a competitive edge in AI.

below is a curated list of software that professionals in the AI industry can use to manage and optimize computing power:

NVIDIA AI Enterprise

An end-to-end platform optimized for managing computing power on NVIDIA GPUs. It includes comprehensive tools for model training, simulation, and advanced data analytics.

AWS Batch

Facilitates efficient batch computing in the cloud. It dynamically provisions the optimal quantity and type of compute resources based on the volume and specific requirements of the batch jobs submitted.

DDN Storage

Provides solutions specifically designed to address AI bottlenecks in computing. With a focus on accelerated computing, DDN Storage helps in scaling AI and large language model (LLM) performance.

The Future of Computing Power Management

As the field of AI continues to evolve, so too will the strategies for managing computing power. Advancements in technology will introduce new methods for optimizing resources, reducing energy consumption, and maximizing performance.

The AI industry can expect to see more autonomous and intelligent systems for managing computing power, driven by AI itself. These systems will likely be designed to predict and adapt to the computational needs of AI workloads, leading to even more efficient and cost-effective AI operations.

Our long-term vision must incorporate these upcoming innovations, ensuring that as the AI field grows, our management of its foundational resources evolves concurrently. By staying ahead of these trends, organizations can future-proof their AI infrastructure and remain competitive in a rapidly advancing technological landscape.

New Frontiers in AI: Scaling Up with the Latest AI Infrastructure Advances

Introduction

Artificial Intelligence (AI) infrastructure is the confluence of layered technologies that enable machine learning algorithms to be trained, run, and implemented. It is an amalgamation of cutting-edge computational hardware, expansive data storage capabilities, and nuanced networking that acts as the nervous system for AI applications. These combine to form an ecosystem capable of handling the intensive workloads synonymous with AI, facilitating the rapid processing and analysis of vast data sets in real-time.

Core Components of Modern AI Infrastructure

Compute power in AI infrastructure is increasingly reliant on GPUs for parallel processing capabilities and TPUs that offer specialized processing for neural network machine learning. Next-generation storage solutions like NVMe (Non-Volatile Memory Express) SSDs allow for faster data access speeds, critical for feeding data-hungry AI models. Networking technologies, including high-speed fiber connections and 5G, are integral for the low-latency transfer of large data sets and real-time analytics.

A harmonious orchestration between these components is crucial, as it allows for the seamless integration of AI models into various applications, from predictive analytics to autonomous vehicles, ensuring that latency does not hinder performance.

Trends and Innovations in AI Infrastructure

Hardware Innovations: GPUs and TPUs Leading the Charge

In the hardware domain, innovation is spearheaded by GPUs and TPUs, which are rapidly evolving to address the complex computation needs of AI. NVIDIA’s latest series of GPUs introduces significant improvements in parallel processing, making them ideal for training deep neural networks. TPUs, designed by Google, are tailored for the high-volume, low-latency processing required by large-scale AI applications. These TPUs are increasingly becoming part of the cloud AI infrastructure, granting businesses access to powerful AI compute resources on demand.

Software Frameworks and APIs: The Tools for Democratizing AI

On the software front, frameworks like TensorFlow and PyTorch offer open-source libraries for machine learning that drastically simplify the development of AI models. In combination with robust APIs, such as NVIDIA’s CUDA or Intel’s oneAPI, developers are empowered to customize their AI solutions and optimize performance across various hardware architectures. This democratization of AI development tools is drastically lowering the barrier to entry for AI innovation and enabling a broader range of scientists, engineers, and entrepreneurs to contribute to the AI revolution.

AI Deployment Trends: Cloud Services, Edge AI, and Decentralization

AI infrastructure is undergoing a significant transformation with the rise of cloud AI services, edge computing, and decentralized architectures. Cloud AI services, like AWS’s SageMaker, Azure AI, and Google AI Platform, simplify the process of deploying AI solutions by providing scalable compute resources and managed services. Edge AI brings intelligence processing closer to the source of data generation, enabling real-time decision-making and reducing reliance on centralized data centers. Decentralization further aids in improving the resilience and privacy of AI systems by distributing processing across multiple nodes.

Challenges and Considerations

Scaling AI infrastructure faces the challenge of maintaining the delicate balance between soaring computational demands and the constraints of current technology. This includes addressing bottlenecks in data throughput, ensuring cyber-security in the face of sophisticated AI-oriented threats, and being aware of the carbon footprint associated with running large-scale AI operations.Innovations like quantum computing bring future prospects for AI scalability, while developments in homomorphic encryption present potential breakthroughs in data security. Sustainable AI is an emerging concept, focusing on optimizing algorithms to reduce electrical consumption, promoting environmentally friendly AI operations.

Case Studies

An example of a company at the forefront of AI infrastructure is NVIDIA. Their AI platform houses powerful GPUs in combination with deep learning software, enabling businesses to scale up their AI applications efficiently. In contrast, IBM’s AI infrastructure focuses on building holistic, integrated AI solutions, with hardware like the Power Systems AC922 laying the groundwork for robust, enterprise-level AI workflows.

Startups, too, are making waves. EmergingAI’ innovative design, the LLM serving software, stands to revolutionize AI operations by offering AI cluster management, in-depth observability, and real-time scheduling.

LLM Serving 101: Everything About LLM Deployment & Monitoring

A General Guide to Deploying an LLM

- Infrastructure Preparation:

- Choose a deployment environment: local servers, cloud services (like AWS, GCP, Azure), or hybrid.

- Ensure that you have the requisite computational resources: CPUs, GPUs, or TPUs, depending on the size of the LLM and expected traffic.

- Configure networking, storage, and security settings according to your needs and compliance requirements.

- Model Selection and Testing:

- Select the appropriate LLM (GPT-4, BERT, T5, etc.) for your use-case based on factors like performance, cost, and language support.

- Test the model on a smaller scale to ensure it meets your accuracy and performance expectations.

- Software Setup:

- Set up the software stack needed for serving the model, including machine learning frameworks (like TensorFlow or PyTorch), and application servers.

- Containerize the model and related services using Docker or similar technologies for consistency across environments.

- Scaling and Optimization:

- Implement load balancing to distribute the inference requests effectively.

- Apply optimization techniques like model quantization, pruning, or distillation to improve performance.

- API and Integration:

- Develop an API to interact with the LLM. The API should be robust, secure, and have rate limiting to prevent abuse.

- Integrate the LLM’s API with your application or platform, ensuring seamless data flow and error handling.

- Data and Privacy Considerations:

- Implement data management policies to handle the input and output securely.

- Address privacy laws and ensure data is handled in compliance with regulations such as GDPR or CCPA.

- Monitoring and Maintenance:

- Set up monitoring systems to track the performance, resource utilization, and health of the deployment.

- Plan for regular maintenance, updates to the model, and the software stack.

- Automation and CI/CD:

- Implement continuous integration and continuous deployment (CI/CD) pipelines for automated testing and deployment of changes.

- Automate scaling, using cloud services’ auto-scaling features or orchestration tools like Kubernetes.

- Failover and Redundancy:

- Design the system for high availability with redundant instances across zones or regions.

- Implement a failover strategy to handle outages without disrupting the service.

- Documentation and Training:

- Document your deployment architecture, API usage, and operational procedures.

- Train your team to troubleshoot and manage the LLM deployment.

- Launch and Feedback Loop:

- Soft launch the deployment to a restricted user base, if possible, to gather initial feedback.

- Use feedback to fine-tune performance and usability before a wider release.

- Compliance and Ethics Checks:

- Conduct an audit for compliance with ethical AI guidelines.

- Implement mechanisms to monitor biased outputs or misuse of the model.

Deploying an LLM is not a one-time event but an ongoing process. It’s essential to keep improving and adapting your approach based on new advancements in technology, changes in data privacy laws, and evolving business requirements.

Best Practices for Monitoring the Performance of an LLM After Deployment

After deploying a Large Language Model (LLM), monitoring its performance is crucial to ensure it operates optimally and continues to meet user needs and expectations. Here are some best practices for monitoring the performance of an LLM post-deployment:

- Establish Key Performance Indicators (KPIs):

- Define clear KPIs that align with your business objectives, such as response time, throughput, error rate, and user satisfaction.

- Application Performance Monitoring (APM):

- Utilize APM tools to monitor application health, including latency, error rates, and uptime to quickly identify issues that may impact the user experience.

- Infrastructure Monitoring:

- Track the utilization of computing resources like CPU, GPU, memory, and disk I/O to detect possible bottlenecks or the need for scaling.

- Monitor network performance to ensure data is flowing smoothly between the model and its clients.

- Model Inference Monitoring:

- Measure the inference time of the LLM, as delays could indicate a potential problem with the model or infrastructure.

- Log Analysis:

- Collect and analyze logs to gain insights into system behavior and user interactions with the LLM.

- Ensure logs are structured to facilitate easy querying and analysis.

- Anomaly Detection:

- Implement anomaly detection systems to flag any deviations from normal performance metrics. This could indicate an issue that requires attention.

- Quality Assurance:

- Continuously evaluate the accuracy and relevance of the LLM’s outputs. Set up automated testing or use human reviewers to assess quality.

- Track changes in performance after updates to the model or related software.

- User Feedback:

- Collect and analyze user feedback for qualitative insights into the LLM’s performance and user satisfaction.

- Integrate mechanisms for users to report issues with the model’s responses directly.

- Automate Incident Response:

- Develop automated alerting mechanisms to notify your team of critical incidents needing immediate attention.

- Create incident response protocols and ensure your team is trained to handle various scenarios.

- Usage Patterns:

- Monitor usage patterns to understand how users are interacting with the LLM. Look for trends like peak usage times, common queries, and feature utilization.

- Failover and Recovery:

- Regularly test failover procedures to ensure the system can quickly recover from outages.

- Monitor backup systems to make sure they are capturing data accurately and can be restored as expected.

- Security Monitoring:

- Implement security monitoring to detect and respond to threats such as unauthorized access or potential data breaches.

- Regular Audits:

- Conduct regular audits to ensure that the LLM is compliant with all relevant policies and regulations, including data protection and privacy.

- Continual Improvement:

- Use the insights gained from monitoring to continuously improve the system. This should include tuning the model and updating the infrastructure to address any identified issues.

- Collaboration and Sharing:

- Facilitate information sharing and collaboration between different team members (data scientists, engineers, product managers) to leverage different perspectives for better monitoring and quick resolution of issues.

By implementing these best practices, you can establish a robust monitoring framework that helps maintain the integrity, availability, and quality of the LLM service you provide.

Tools Used for Real-time Monitoring of LLMs

There are several tools available that can be used for real-time monitoring of Large Language Models (LLMs). Here are some examples categorized by their primary function:

All-in-one LLM Serving

WhaleFlux Serving: an open source LLM server with deployment, monitoring, injection and auto-scaling service. It is built to improve the execution process of LLM service comprehensively and designs a configuration recommendation module for automatic deployment on any GPU clusters and a performance detection module for auto-scaling.

Application Performance Monitoring (APM) Tools

- New Relic: Offers real-time insights into application performance and user experiences. It can track transactions, application dependencies, and health metrics.

- Datadog: A monitoring service for cloud-scale applications, providing visibility into servers, containers, services, and functions.

- Dynatrace: Uses AI to provide full-stack monitoring, including user experience and infrastructure monitoring, with root-cause analysis for detected anomalies.

- AppDynamics: Provides application performance management and IT Operations Analytics for businesses and applications.

Infrastructure Monitoring Tools

- Prometheus: An open-source monitoring solution that offers powerful querying capabilities and real-time alerting.

- Zabbix: Open-source, enterprise-level software designed for real-time monitoring of millions of metrics collected from various sources like servers, virtual machines, and network devices.

- Nagios: A powerful monitoring system that enables organizations to identify and resolve IT infrastructure problems before they affect critical business processes.

Cloud-Native Monitoring Tools

- Amazon CloudWatch: Monitors AWS cloud resources and the applications you run on AWS. It can track application and infrastructure performance.

- Google Operations (Stackdriver): Provides monitoring, logging, and diagnostics for applications on the Google Cloud Platform. It aggregates metrics, logs, and events from cloud and hybrid applications.

- Azure Monitor: Collects, analyzes, and acts on telemetry data from Azure and on-premises environments to maximize the performance and availability of applications.

Log Analysis Tools

- Elastic Stack (ELK Stack – Elasticsearch, Logstash, Kibana): An open-source log analysis platform that provides real-time insights into log data.

- Splunk: A tool for searching, monitoring, and analyzing machine-generated big data via a web-style interface.

- Graylog: Streamlines log data from various sources and provides real-time search and log management capabilities.

Error Tracking and Exception Monitoring

- Sentry: An open-source error tracking tool that helps developers monitor and fix crashes in real time.

- Rollbar: Provides real-time error alerting and debugging tools for developers.

Quality of Service Monitoring

- Wireshark: A network protocol analyzer that lets you capture and interactively browse the traffic running on a computer network.

- PRTG Network Monitor: Monitors networks, servers, and applications for availability, bandwidth, and performance.

How AI and Cloud Computing are Converging

Introduction

The digital age has been marked by remarkable independent advancements in both Artificial Intelligence (AI) and cloud computing. However, when these two titans of tech join forces, they give rise to a synergy potent enough to fuel an entirely new wave of technological innovation. This convergence is not just enhancing capabilities in data analytics and storage solutions; it is reimagining how we interact with and derive value from technology.

The Synergy of AI and Cloud Computing

The unification of AI and cloud computing is no mere melding; it is an orchestrated fusion of strengths where each technology amplifies the capabilities of the other. Cloud computing presents an almost boundless arena for AI operations, granting access to on-demand computing power and expansive data storage possibilities. This environment is primordial for the growth of AI, which thrives on data and requires significant computational resources to evolve.

Enabling Scalability and Accessibility

The cloud provides a scalable infrastructure that can grow with the demands of AI algorithms. This elasticity means that as AI models become more complex or as datasets expand, the cloud can adapt with additional resources. Startups to large enterprises can leverage this capability, entering arenas that were once dominated by tech giants with significant on-premise resources. Accessibility is another key boon, with cloud services democratizing AI by offering high-level compute resources remotely to anyone with internet access.

Advancements in Machine Learning Platforms

Cloud providers have recognized the necessity of specialized platforms for machine learning and AI. For instance, services such as Google Cloud AI, Amazon SageMaker, and Microsoft Azure Machine Learning provide integrated environments for the training, deployment, and management of machine learning models. These services offer pre-built algorithms and the ability to create custom models, significantly reducing the time and knowledge barrier for businesses to incorporate AI solutions.

Data Management and Analytics at Scale

AI’s insatiable appetite for data pairs perfectly with the cloud’s solution to storage. Cloud platforms can effectively manage vast datasets, making it feasible to store and process the big data which AI systems analyze. Furthermore, AI enhances cloud capabilities by introducing analytics and machine learning directly into the data repositories, allowing for more advanced data processing and extraction of insights.

Intelligent Automation and Resource Optimization

AI introduces smart automation of cloud infrastructure management, optimizing resource usage without human intervention. Algorithms predict resource needs, automatically adjusting capacity, and ensuring efficient operation. This not only decreases costs but also improves the performance of hosted applications and services.

Case Studies: Successful Convergence in Action

The convergence of AI and cloud computing has led to successful implementations across various sectors:

Healthcare Delivery and Research

In healthcare, Project Baseline by Verily (an Alphabet company) is a stellar example. The initiative leverages cloud infrastructure for an AI-driven platform that collects and analyzes vast amounts of health-related data. This convergence enables predictive analytics for disease patterns and personalizes patient care protocols, translating into tangible improvements in patient outcomes.

Retail and Consumer Insights

In the retail space, Walmart harnesses cloud-based AI to refine logistical operations, manage inventory, and personalize shopping experiences for customers. Through their data analytics and machine learning tools, Walmart can predict shopping trends and optimize supply chains, reducing waste and improving customer satisfaction.

Financial Services and Risk Management

JPMorgan Chase has turned to the cloud to bolster its AI capabilities for real-time fraud detection. Their AI models, hosting complex algorithms on cloud platforms, scan transaction data to identify potential threats, significantly reducing the occurrence of false positives and enhancing the security of client assets.These cases underscore the powerful impact of AI and cloud computing’s convergence on operational efficiency, user experience, and outcome-driven strategies. As organizations continue to realize the benefits of this collaboration, it’s becoming increasingly clear that the duo of AI and cloud computing stands at the heart of the next frontier in digital transformation.

Challenges and Potential Solutions

The merger of AI and cloud computing is paving the way for a smarter technology landscape. However, it’s not without its share of challenges that require strategic solutions:

Data Privacy and Governance

As data becomes more centralized in the cloud and AI models more nuanced in their data requirements, privacy concerns escalate. Solutions like federated learning allow AI model training on decentralized data, enhancing user privacy without compromising the dataset’s utility. Additionally, implementing stronger data governance and privacy laws aligned with technological advances can provide a structured approach to maintaining user trust.

Securing AI and Cloud Platforms

Securing the infrastructure underpinning AI and the cloud is critical. This means deploying advanced cybersecurity measures such as AI-driven threat detection systems, end-to-end encryption for data in transit and at rest, and multi-factor authentication. Regular security assessments and adherence to compliance standards like GDPR or HIPAA for specific industries can fortify the AI and cloud ecosystem against cyber threats.

Tackling the Skills Shortage

The sophisticated nature of AI and the expansive scope of cloud computing creates a high demand for specialized talent. Companies can forge partnerships with academic institutions to create targeted curricula that meet industry needs. Investing in continuous employee development through workshops and certifications can also help mitigate the skills gap and empower existing workforce members to adapt to new roles necessitated by AI and cloud technology.

Future Prospects

As the fusion between AI and cloud computing strengthens, the horizon of digital innovation expands:

IoT and AI: Smarter Devices Everywhere

The Internet of Things (IoT) stands to benefit immensely from AI and cloud computing, transitioning from simple connectivity to intelligent, autonomous operations. AI can be leveraged to process and analyze data collected by IoT devices to facilitate real-time decisions, while cloud platforms ensure global accessibility and scalability for IoT systems.

Amplifying Edge Computing

Edge computing is set to revolutionize how data is processed by bringing computation closer to the source of data creation. The convergence with AI allows for smarter edge devices that can process data on-site, reducing latency and reliance on centralized data centers. Cloud services come into play by providing management and orchestration layers for these distributed networks.

Building the Foundations of Smart Cities

Smart cities stand as testament to the potential of AI and cloud collaboration. They use AI’s analytical power and the cloud’s vast resource pool to optimize urban services, from traffic management to energy distribution. As smart cities evolve, they’ll likely become more responsive and adaptive, creating urban environments that are not only interconnected but also intelligent.In this landscape of emerging opportunities, businesses must pivot to embrace a future where AI and cloud computing no longer function as standalone tools, but as integrated components of a comprehensive tech ecosystem, propelling innovation at the speed of thought.