What Is a Graphics Processing Unit (GPU)?

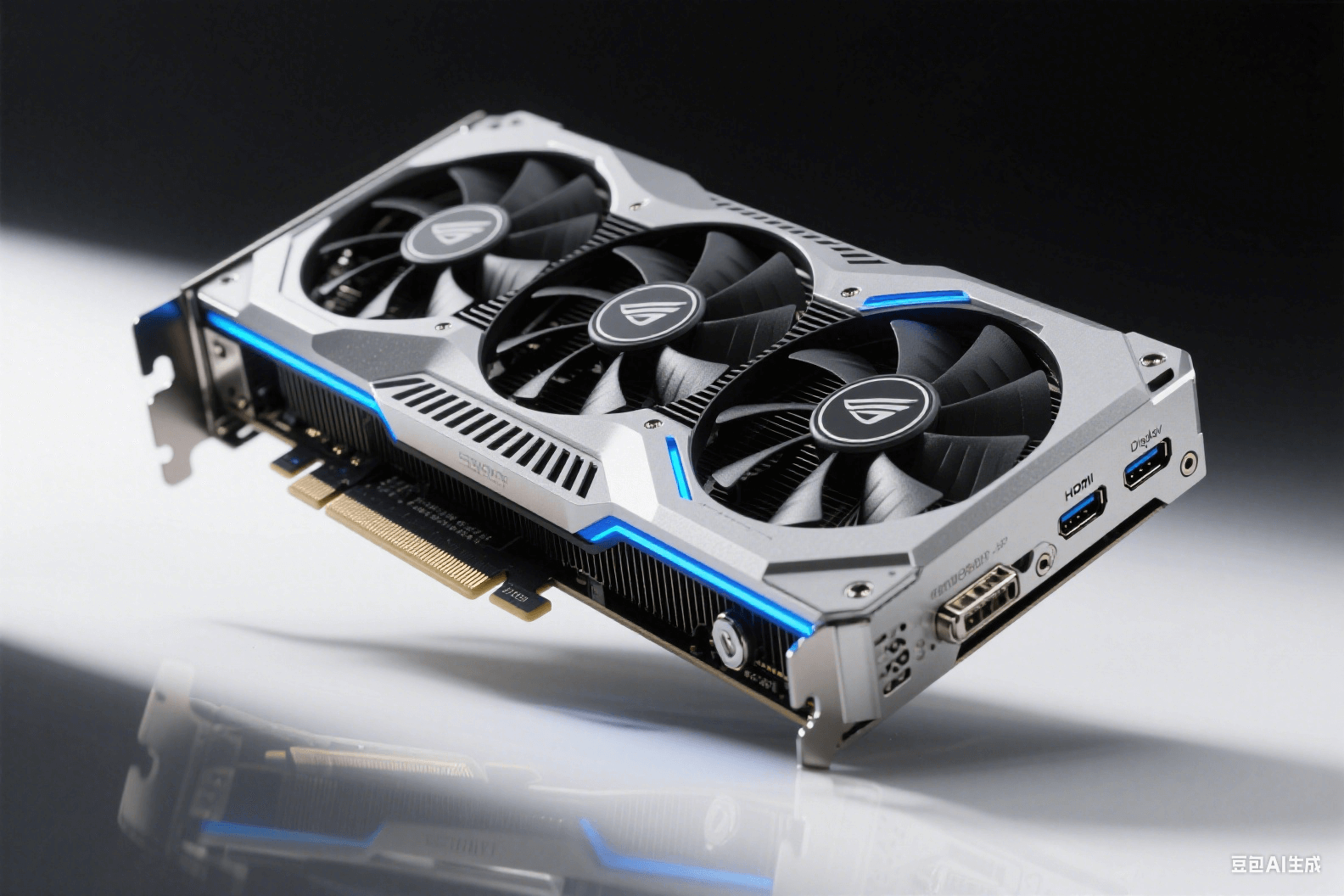

A Graphics Processing Unit (GPU) is a microprocessor specifically designed to rapidly handle image and graphics computing tasks. Unlike Central Processing Units (CPUs), which excel at complex sequential tasks, GPUs feature a parallel computing architecture with thousands of small, efficient cores. This enables them to process massive volumes of similar tasks simultaneously, making them highly efficient for graphics rendering and parallel computing workloads.

Modern GPUs have evolved beyond their early role of pure graphics processing to become general-purpose parallel computing processors. This transformation has made GPUs indispensable not only in traditional fields like video gaming, video editing, and 3D rendering but also in emerging areas such as artificial intelligence (AI), scientific computing, and data analysis.

Core Functions and Application Scenarios of GPUs

Core Functions and Application Scenarios of GPUs

What does a graphics processing unit do? The capabilities of modern GPUs can be categorized into three core areas:

- Graphics Rendering and Display

This is the most original and fundamental function of GPUs. By rapidly computing and converting 3D models into 2D images, GPUs handle complex graphics tasks such as texture mapping, lighting calculations, and shadow generation. Whether it’s realistic scenes in video games, rendering of film special effects, or smooth display of user interfaces, all rely on the powerful graphics processing capabilities of GPUs.

- Parallel Computing and Acceleration

Leveraging their large-scale parallel architecture, GPUs accelerate various non-graphical computing tasks. In scientific research, GPUs speed up molecular dynamics simulations and astrophysical calculations; in the financial sector, they enable rapid risk analysis and algorithmic trading.

- Artificial Intelligence and Deep Learning

This is the fastest-growing application area for GPUs. GPUs are particularly well-suited for matrix operations in deep learning, significantly accelerating the training and inference processes of neural networks. Large language models, image recognition systems, and recommendation engines all depend on GPUs for computing power support.

GPU Market Overview and Key Type Analysis

The current graphic processing unit market exhibits characteristics of diversification and specialization. From a macro perspective, GPUs are primarily divided into three types:

- Integrated GPUs: Embedded directly in CPUs or motherboards, they offer low power consumption and cost-effectiveness, making them suitable for daily office work and light graphics applications. They provide basic graphics capabilities for laptops and entry-level desktops.

- Discrete GPUs: Standalone hardware devices with dedicated memory and cooling systems, offering far superior performance compared to integrated GPUs. In the discrete GPU sector, nvidia products lead the market with their comprehensive technology ecosystem.

| Type | Performance Features | Key Application Scenarios | Advantages | Limitations |

| Integrated GPU | Basic graphics processing | Daily office work, web browsing, video playback | Low power consumption, low cost, high integration | Limited performance; unsuitable for professional use |

| Consumer Discrete GPU | Medium to high performance | Gaming, content creation, light AI applications | High cost-effectiveness, rich software ecosystem | Limited support for professional features |

| Professional Discrete GPU | Professional-grade performance | Industrial design, medical imaging, professional rendering | Professional software certification, high stability | Higher price point |

| Data Center GPU | Extreme performance & reliability | AI training, scientific computing, cloud computing | High throughput, ECC memory, optimized cooling | High cost, high power consumption |

Key Criteria for Enterprise-Grade GPU Selection

When conducting gpu comparison, enterprises need to comprehensively evaluate multiple technical indicators based on their specific needs:

- Computing Performance: The primary metric for measuring GPU computing power is TFLOPS (trillions of floating-point operations per second). TFLOPS values across different precisions (FP16, FP32, FP64) are critical for different application types. AI training typically focuses on FP16 performance, while scientific computing may require stronger FP64 capabilities.

- Memory Capacity & Bandwidth: Memory capacity determines the size of datasets a GPU can handle—critical for large-model training. Memory bandwidth affects data access speed; high bandwidth helps fully unleash the GPU’s computing potential.

- Energy Efficiency: In data center environments, power costs and heat dissipation capabilities are key constraints. Higher energy efficiency (performance/power consumption) reduces total cost of ownership (TCO).

- Software Ecosystem & Compatibility: A robust software stack and framework support shorten development cycles. NVIDIA’s CUDA ecosystem holds a distinct advantage in the AI field, supporting mainstream deep learning frameworks.

WhaleFlux Intelligent GPU Resource Management Solutions

After selecting suitable GPU hardware, enterprises face the next challenge: efficiently managing and optimizing these high-value computing resources. As an intelligent GPU resource management tool designed specifically for AI enterprises, WhaleFlux helps maximize the value of GPU clusters through innovative technologies.

WhaleFlux’s core advantage lies in its intelligent resource scheduling algorithm, which real-time monitors the status of multi-GPU clusters and automatically assigns computing tasks to the most suitable GPU nodes. This dynamic scheduling ensures:

- Load Balancing: Prevents overload on individual GPUs while others remain idle.

- Fault Tolerance: Automatically migrates tasks to healthy nodes if a GPU fails.

- Energy Optimization: Intelligently adjusts GPU power states based on task requirements.

Comprehensive GPU Resource Solution

WhaleFlux offers end-to-end services from hardware to software, covering the following core components:

| Service Layer | Service Content | Core Value | Target Customers |

| Hardware Resource Layer | NVIDIA H100/H200/A100/RTX 4090 | Top-tier computing performance, flexible configuration | All AI enterprises |

| Platform Service Layer | Intelligent scheduling, monitoring & alerts, resource isolation | Improved utilization, reduced O&M costs | Enterprises with limited technical teams |

| Business Support Layer | Model deployment, performance optimization, technical support | Accelerated AI application launch | Enterprises pursuing rapid business deployment |

Detailed Comparison of WhaleFlux’s Core GPU Products

WhaleFlux offers a range of NVIDIA GPU products, combined with an intelligent management platform, to meet the computing needs of different enterprises. Below is a detailed comparison of four core products:

| Specification | NVIDIA H200 | NVIDIA H100 | NVIDIA A100 | NVIDIA RTX 4090 |

| Architecture | Hopper | Hopper | Ampere | Ada Lovelace |

| Memory Capacity | 141GB HBM3e | 80GB HBM3 | 40GB/80GB HBM2e | 24GB GDDR6X |

| Memory Bandwidth | 4.8TB/s | 3.35TB/s | 2TB/s | 1TB/s |

| FP16 Performance | 989 TFLOPS | 756 TFLOPS | 312 TFLOPS | 165 TFLOPS |

| Interconnect Tech | NVLink 4.0 | NVLink 3.0 | NVLink 3.0 | PCIe 4.0 |

| Key Application Scenarios | Training of 100B-parameter large models | Large-scale AI training & HPC | Mid-scale AI & HPC | AI inference, rendering, development |

| Energy Efficiency | Excellent | Very Good | Good | Good |

| Target Customer Type | Large AI labs, cloud service providers | AI enterprises, research institutions | Small-to-medium AI enterprises, research teams | Startups, developers |

Enterprise GPU Procurement & Optimization Strategies

When formulating a GPU procurement strategy, enterprises should consider both hardware selection and resource management:

- Needs Analysis: Clarify core workload types (training vs. inference), model scale, performance requirements, and budget constraints. For R&D and testing environments, cost-effective configurations may be preferred; for production environments, reliability and performance should take priority.

- Scalability Planning: Account for future changes in computing power needs due to business growth. Multi-GPU systems and high-speed interconnect technologies (e.g., NVLink) provide flexibility for future expansion.

- TCO Optimization: Beyond hardware procurement costs, consider long-term operational expenses such as power consumption, cooling systems, and O&M labor. WhaleFlux’s intelligent management platform helps customers reduce overall operational costs by 20-30% through energy efficiency optimization and resource scheduling.

Conclusion

GPUs have become a core component of modern computing infrastructure, especially in AI and data analysis. Understanding GPU fundamentals, functional characteristics, and selection criteria is crucial for enterprises building efficient computing platforms. However, selecting suitable GPU hardware is only the first step—effectively managing and optimizing these high-value computing resources is equally important.

WhaleFlux provides end-to-end solutions from hardware to software, combining NVIDIA’s full range of high-performance GPU products with an innovative intelligent GPU resource management platform. Whether you need the extreme performance of the H200 or the cost-effective A100, WhaleFlux offers professional product configuration and resource optimization services to provide strong computing support for your enterprise’s digital transformation.