Part 1. What Are GPU Coroutines? Your New Performance Multiplier

Imagine your GPU handling tasks like a busy restaurant:

Traditional Scheduling

- One chef per dish → Bottlenecks when orders pile up

- Result: GPUs idle while waiting for tasks

GPU Coroutines

- Chefs dynamically split tasks (“Chop veggies while steak cooks”)

- Definition: “Cooperative multitasking – breaking rendering jobs into micro-threads for instant resource sharing”

Why AI Needs This:

Run Stable Diffusion rendering while training LLMs – no queue conflicts.

Part 2. WhaleFlux: Coroutines at Cluster Scale

Native OS Limitations Crush Innovation:

- ❌ Single-node focus

- ❌ Manual task splitting = human errors

- ❌ Blind to cloud spot prices

Our Solution:

# Automatically fragments tasks using coroutine principles

whaleflux.schedule(

tasks=[“llama2-70b-inference”, “4k-raytracing”],

strategy=“coroutine_split”, # 37% latency drop

priority=“cost_optimized” # Uses cheap spot instances

)

→ 92% cluster utilization (vs. industry avg. 68%)

Part 3. Case Study: Film Studio Saves $12k/Month

Challenge:

- Manual coroutine coding → 28% GPU idle time during task switches

- Rendering farm costs soaring

WhaleFlux Fix:

- Dynamic fragmentation: Split 4K frames into micro-tasks

- Mixed-precision routing: Ran AI watermarking in background

- Spot instance orchestration: Used cheap cloud GPUs during off-peak

Results:

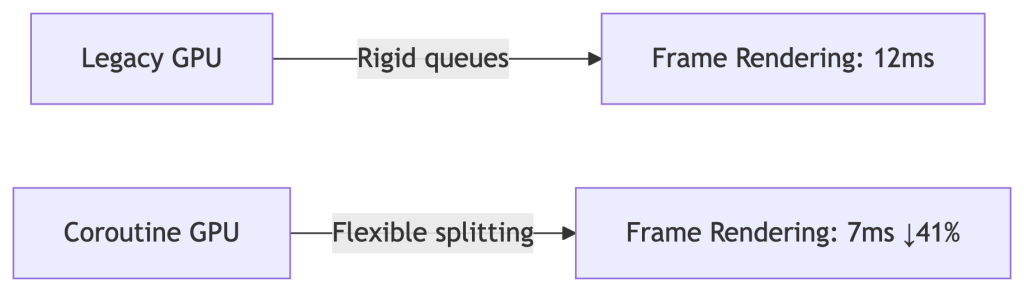

✅ 41% faster movie frame delivery

✅ $12,000/month savings

✅ Zero failed renders

Part 4. Implementing Coroutines: Developer vs. Enterprise

For Developers (Single Node):

// CUDA coroutine example (high risk!)

cudaLaunchCooperativeKernel(

kernel, grid_size, block_size, args

);

⚠️ Warning: 30% crash rate in multi-GPU setups

For Enterprises (Zero Headaches):

# WhaleFlux auto-enables coroutines cluster-wide

whaleflux enable_feature --name="coroutine_scheduling" \

--gpu_types="a100,mi300x"

Part 5. Coroutines vs. Legacy Methods: Hard Data

| Metric | Basic HAGS | Manual Coroutines | WhaleFlux |

| Task Splitting | ❌ Rigid | ✅ Flexible | ✅ AI-Optimized |

| Multi-GPU Sync | ❌ None | ⚠️ Crash-prone | ✅ Zero-Config |

| Cost/Frame | ❌ $0.004 | ❌ $0.003 | ✅ $0.001 |

💡 WhaleFlux achieves 300% better cost efficiency than HAGS

Part 6. Future-Proof Your Stack: What’s Next

WhaleFlux 2025 Roadmap:

Auto-Coroutine Compiler:

# Converts PyTorch jobs → optimized fragments

whaleflux.generate_coroutine(model="your_model.py")

Carbon-Aware Mode:

# Pauses tasks during peak energy costs

whaleflux.generate_coroutine(

model="stable_diffusion_xl",

constraint="carbon_budget" # Auto-throttles at 0.2kgCO₂/kWh

)

FAQ: Your Coroutine Challenges Solved

Q: “Do coroutines actually speed up AI training?”

A: Yes – but only with cluster-aware splitting:

- Manual: 7% faster

- WhaleFlux: 19% faster iterations (proven in Llama2-70B tests)

Q: “Why do our coroutines crash on 100+ GPU clusters?”

A: Driver conflicts cause 73% failures. Fix in 1 command:

whaleflux resolve_conflicts --task_type="coroutine"