TensorFlow GPU Mastery: From Installation Nightmares to Cluster Efficiency with WhaleFlux

1. Introduction: TensorFlow’s GPU Revolution – and Its Hidden Tax

Getting TensorFlow to recognize your A100 feels like victory… until you discover 68% of its 80GB VRAM sits idle. While TensorFlow democratized GPU acceleration, manual resource management costs teams 15+ hours/week while leaving $1M/year in cluster waste. The solution? WhaleFlux automates TensorFlow’s GPU chaos – transforming H100s and RTX 4090s into true productivity engines.

2. TensorFlow + GPU: Setup, Specs & Speed Traps

The Setup Struggle:

bash

# Manual CUDA nightmare (10+ steps)

pip install tensorflow-gpu==2.15.0 && export LD_LIBRARY_PATH=/usr/local/cuda...

# WhaleFlux one-command solution:

whaleflux create-env --tf-version=2.15 --gpu=h100

GPU Performance Reality:

| GPU | TF32 Performance | VRAM | Best For |

| NVIDIA H100 | 67 TFLOPS | 80GB | LLM Training |

| RTX 4090 | 82 TFLOPS (FP32) | 24GB | Rapid Prototyping |

| A100 80GB | 19.5 TFLOPS | 80GB | Large-batch Inference |

Even perfect tf.config.list_physical_devices('GPU') output doesn’t prevent 40% resource fragmentation.

3. Why Your TensorFlow GPU Workflow Is Bleeding Money

Symptom 1: “Low GPU Utilization”

- Cause: CPU-bound data pipelines starving H100s

- WhaleFlux Fix: Auto-injects

tf.dataoptimizations + GPU-direct storage

Symptom 2: “VRAM Allocation Failures”

- Cause: Manual memory management on multi-GPU nodes

- WhaleFlux Fix: Memory-aware scheduling across A100/4090 clusters

Symptom 3: “Costly Idle GPUs”

*”Idle H100s burn $40/hour – WhaleFlux pools them for shared tenant access.”*

4. WhaleFlux + TensorFlow: Intelligent Orchestration

Zero-Config Workflow:

python

# Manual chaos:

with tf.device('/GPU:1'): # Risky hardcoding

model.fit(dataset)

# WhaleFlux simplicity:

model.fit(dataset) # Auto-optimizes placement across GPUs

| TensorFlow Pain | WhaleFlux Solution |

| Multi-GPU fragmentation | Auto-binning (e.g., 4x4090s=96GB) |

| Cloud cost spikes | Burst to rented H100s during peaks |

| OOM errors | Model-aware VRAM allocation |

| Version conflicts | Pre-built TF-GPU containers |

*Computer Vision Team X: Cut ResNet-152 training from 18→6 hours using WhaleFlux-managed H200s.*

5. Procurement Strategy: Buy vs. Rent Tensor Core GPUs

| Option | H100 80GB (Monthly) | When to Choose |

| Buy | ~$35k + power | Stable long-term workloads |

| Rent via WhaleFlux | ~$8.2k (optimized) | Bursty training jobs |

*Hybrid Tactic: Use owned A100s for base load + WhaleFlux-rented H200s for peaks = 34% lower TCO than pure cloud.*

6. Optimization Checklist: From Single GPU to Cluster Scale

DIAGNOSE:

bash

whaleflux monitor --model=your_model --metric=vram_util # Real-time insights

CONFIGURE:

- Use WhaleFlux’s TF-GPU profiles for automatic mixed precision (

mixed_float16)

SCALE:

- Deploy distributed training via WhaleFlux-managed

MultiWorkerMirroredStrategy

SAVE:

*”Auto-route prototypes to RTX 4090s ($1.6k) → production to H100s ($35k) using policy tags.”*

7. Conclusion: Let TensorFlow Focus on Math, WhaleFlux on Metal

Stop babysitting GPUs. WhaleFlux transforms TensorFlow clusters from cost centers to competitive advantages:

- Slash setup time from hours → minutes

- Achieve 90%+ VRAM utilization

- Cut training costs by 50%+

GPU Usage 100%? Why High Use Isn’t Always High Efficiency in AI and How to Fix It

1. Introduction: The GPU Usage Paradox

Picture this: your gaming PC’s GPU hits 100% usage – perfect for buttery-smooth gameplay. But when enterprise AI clusters show that same 100%, it’s a $2M/year red flag. High GPU usage ≠ high productivity. Idle cycles, memory bottlenecks, and unbalanced clusters bleed cash silently. The reality? NVIDIA H100 clusters average just 42% real efficiency despite showing 90%+ “usage” (MLCommons 2024).

2. Decoding GPU Usage: From Gaming Glitches to AI Waste

Gaming vs. AI: Same Metric, Different Emergencies

| Scenario | Gaming Concern | AI Enterprise Risk |

| 100% GPU Usage | Overheating/throttling | $200/hr wasted per H100 at false peaks |

| Low GPU Usage | CPU/engine bottleneck | Idle A100s burning $40k/month |

| NVIDIA Container High Usage | Background process hog | Orphaned jobs costing $17k/day |

Gamers tweak settings – AI teams need systemic solutions. WhaleFlux exposes real utilization.

3. Why Your GPUs Are “Busy” but Inefficient

Three silent killers sabotage AI clusters:

- Memory Starvation:

nvidia-smishows 100% usage while HBM sits idle (common in vLLM) - I/O Bottlenecks: PCIe 4.0 (64GB/s) chokes H100’s 120GB/s compute demand

- Container Chaos: Kubernetes pods overallocate RTX 4090s by 300%

The Cost:

*A “100% busy” 32-GPU cluster often delivers only 38% real throughput = $1.4M/year in phantom costs.*

4. WhaleFlux: Turning Raw Usage into Real Productivity

WhaleFlux’s 3D Utilization Intelligence™ exposes hidden waste:

| Metric | DIY Tools | WhaleFlux |

| Compute Utilization | ✅ (nvidia-smi) | ✅ + Heatmap analytics |

| Memory Pressure | ❌ | ✅ HBM3/HBM3e profiling |

| I/O Saturation | ❌ | ✅ NVLink/PCIe monitoring |

AI-Optimized Workflows:

- Container Taming: Isolate rogue processes draining H200 resources

- Dynamic Throttling: Auto-scale RTX 4090 inference during off-peak

- Cost Attribution: Trace watt-to-dollar waste per project

5. Monitoring Mastery: From Linux CLI to Enterprise Control

DIY Method (Painful):

bash

nvidia-smi --query-gpu=utilization.gpu --format=csv

# Misses 70% of bottlenecks!

WhaleFlux Enterprise View:

Real-time dashboards tracking:

- Per-GPU memory/compute/I/O (H100/A100/4090)

- vLLM/PyTorch memory fragmentation

- Cloud vs. on-prem cost per FLOP

6. Optimization Playbook: Fix GPU Usage in 3 Steps

| Symptom | Root Cause | WhaleFlux Fix |

| Low GPU Usage | Fragmented workloads | Auto bin-packing across H200s |

| 100% Usage + Low Output | Memory bottlenecks | vLLM-aware scheduling for A100 80GB |

| Spiking Usage | Bursty inference | Predictive scaling for RTX 4090 fleets |

Pro Tip: Target 70–85% sustained usage. WhaleFlux enforces this “golden zone” automatically.

7. Conclusion: Usage Is Vanity, Throughput Is Sanity

Stop guessing why your GPU usage spikes. WhaleFlux transforms vanity metrics into actionable efficiency:

- Slash cloud costs by 40-60%

- Accelerate LLM deployments 5x faster

- Eliminate $500k/year in phantom waste

Distributed Computing Decoded: From Theory to AI Scale with WhaleFlux

1. Introduction: The Invisible Engine Powering Modern AI

When ChatGPT answers your question in seconds, it’s not one GPU working—it’s an orchestra of thousands coordinating flawlessly. This is distributed computing in action: combining multiple machines to solve problems no single device can handle. For LLMs like GPT-4, distributed systems aren’t optional—they’re essential. But orchestrating 100+ GPUs efficiently? That’s where most teams hit a wall.

2. Distributed vs. Parallel vs. Cloud: Cutting Through the Jargon

Let’s demystify these terms:

| Concept | Key Goal | WhaleFlux Relevance |

| Parallel Computing | Speed via concurrency | Splits jobs across multiple GPUs (e.g., 8x H100s) |

| Distributed Computing | Scale via decentralization | Manages hybrid clusters as one unified system |

| Cloud Computing | On-demand resources | Bursts to cloud GPUs during peak demand |

“Parallel computing uses many cores for one task; distributed computing chains tasks across machines. WhaleFlux masters both.”

3. Why Distributed Systems Fail: The 8 Fallacies & AI Realities

Distributed systems stumble on false assumptions:

- “The network is reliable”: GPU node failures can kill 72-hour training jobs.

- “Latency is zero”: Ethernet (100Gbps) is 30x slower than NVLink (300GB/s).

- “Topology doesn’t matter”: Misplaced A100s add 40% communication overhead.

*WhaleFlux solves this:

- Auto-detects node failures and reroutes training

- Enforces topology-aware scheduling across H200/RTX 4090 clusters*

4. Distributed AI in Action: From Ray to Real-World Scale

Frameworks like Ray (for Python) simplify distributed ML—but scaling remains painful:

- Manual cluster management leaves 50% of GPUs idle during uneven loads

- vLLM memory fragmentation cripples throughput

*WhaleFlux fixes this:

- Dynamically resizes Ray clusters based on GPU memory demand

- Cut GPT-4 fine-tuning time by 65% for Startup X using H100 + A100 clusters*

5. WhaleFlux: The Distributed Computing Brain for Your GPU Fleet

WhaleFlux transforms chaos into coordination:

| Layer | Innovation |

| Resource Management | Unified pool: Mix H200s, 4090s, and cloud GPUs |

| Fault Tolerance | Auto-restart containers + LLM checkpointing |

| Data Locality | Pins training data to NVMe-equipped GPU nodes |

| Scheduling | Topology-aware placement (NVLink > PCIe > Ethernet) |

*”Deploy hybrid clusters: On-prem H100s + AWS A100s + edge RTX 4090s—managed as one logical system.”*

6. Beyond Theory: Distributed Computing for LLM Workloads

Training:

- Split 700B-parameter models across 128 H200 GPUs

- WhaleFlux minimizes communication overhead by 60%

Inference:

- Routes long-context queries to 80GB A100s

- Sends high-throughput tasks to cost-efficient RTX 4090s

Cost Control:

*”WhaleFlux’s TCO dashboard exposes cross-node waste—saving 35% on 100+ GPU clusters.”*

7. Conclusion: Distributed Computing Isn’t Optional – It’s Survival

In the AI arms race, distributed systems separate winners from strugglers. WhaleFlux turns your GPU fleet into a coordinated superorganism:

- Slash training time by 65%

- Eliminate idle GPU waste

- Deploy models across hybrid environments in minutes

GPU Utilization Decoded: From Gaming Frustration to AI Efficiency with WhaleFlux

1. Introduction: The GPU Utilization Obsession – Why 100% Isn’t Always Ideal

You’ve seen it in games: Far Cry 5 stutters while your GPU meter shows 2% usage. But in enterprise AI, we face the mirror problem – clusters screaming at 99% “utilization” while delivering just 30% real work. Low utilization wastes resources, but how you optimize separates gaming fixes from billion-dollar AI efficiency gaps.

2. GPU Utilization 101: Myths vs. Reality

Gaming World Puzzles:

- Skyrim Special Edition freezing at 0% GPU? Usually CPU or RAM bottlenecks

- Far Cry 5 spikes during explosions? Game engines prioritizing visuals over smooth metrics

Enterprise Truth Bombs:

| Scenario | Gaming Fix | AI Reality |

| Low Utilization | Update drivers | Cluster misconfiguration |

| 99% Utilization | “Great for FPS!” | Thermal throttling risk |

| Performance Drops | Tweak settings | vLLM memory fragmentation |

While gamers tweak settings, AI teams need systemic solutions – enter WhaleFlux.

3. Why AI GPUs Bleed Money at “High Utilization”

That “100% GPU-Util” metric? Often misleading:

- Memory-bound tasks show high compute usage but crawl due to VRAM starvation

- vLLM’s hidden killer:

gpu_memory_utilizationbottlenecks cause 40% latency spikes (Stanford AI Lab 2024) - The real cost:

*A 32-GPU cluster at 35% real efficiency wastes $1.8M/year in cloud spend*

4. WhaleFlux: Engineering Real GPU Efficiency for AI

WhaleFlux goes beyond surface metrics with:

- 3D Utilization Analysis: Profiles compute + memory + I/O across mixed clusters (H100s, A100s, RTX 4090s)

- AI-Specific Optimizations:

- vLLM Memory Defrag: 2x throughput via smart KV-cache allocation

- Auto-Tiering: Routes LLM inference to cost-efficient RTX 4090s (24GB), training to H200s (141GB)

| Metric | Before WhaleFlux | With WhaleFlux | Improvement |

| Effective Utilization | 38% | 89% | 134% ↑ |

| LLM Deployment Time | 6+ hours | <22 mins | 16x faster |

| Cost per 1B Param | $4.20 | $1.85 | 56% ↓ |

5. Universal Utilization Rules – From Gaming to GPT-4

Golden truths for all GPU users:

- 100% ≠ Ideal: Target 70-85% to avoid thermal throttling

- Memory > Compute:

gpu_memory_utilizationdictates real performance - Context Matters:

Gaming stutter? Check CPU

AI slowdowns at “high usage”? Likely VRAM starvation

*WhaleFlux auto-enforces the utilization “sweet spot” for H100/H200 clusters – no more guesswork*

6. DIY Fixes vs. Systemic Solutions

When quick fixes fail:

- Gamers: Reinstall drivers, cap FPS

- AI Teams: WhaleFlux’s ML-driven scheduling replaces error-prone scripts

The hidden productivity tax:

*Manual GPU tuning burns 15+ hours/week per engineer – WhaleFlux frees them for breakthrough R&D*

7. Conclusion: Utilization Isn’t a Metric – It’s an Outcome

Stop obsessing over percentages. With WhaleFlux, effective throughput becomes your true north:

- Slash cloud costs by 60%+

- Deploy models 5x faster

- Eliminate vLLM memory chaos

AMD GPU vs NVIDIA GPU

1. Introduction: The Great AI GPU Debate

AMD’s MI300X is shaking NVIDIA’s throne – but raw specs alone won’t determine your AI success. With AMD’s data center GPU revenue surging 80% YoY (Q1 2024) and NVIDIA’s H200 sold out until 2025, hardware choices have never been more complex. Yet true AI ROI depends on three pillars:

- Strategic hardware selection

- Robust software ecosystems

- Intelligent orchestration (this is where WhaleFlux transforms the game)

2. AMD vs NVIDIA: Battle of the Titans

Let’s compare today’s flagship contenders:

| Metric | NVIDIA H200 | AMD MI300X | RTX 4090 (Budget Star) |

| FP8 TFLOPS | 1,979 | 1,300 | 132 |

| VRAM | 141GB HBM3e | 192GB HBM3 | 24GB GDDR6X |

| 8-GPU Cost | ~$400k | ~$320k | ~$20k |

Software Ecosystems:

- NVIDIA: CUDA dominance + 250+ optimized AI frameworks

- AMD: ROCm 6.0 achieves PyTorch parity but has 30% fewer prebuilt containers

*”WhaleFlux breaks vendor lock-in – manage H100s, MI300Xs, and 4090s in a unified pool.”*

3. Real-World AI Workloads: Benchmarks Beyond Spec Sheets

*Case 1: 70B+ Parameter LLMs*

- H200: 1.7x faster training than MI300X (thanks to NVLink + FP8)

- MI300X: 40% lower $/token inference (192GB VRAM advantage)

Case 2: Stable Diffusion XL

- RTX 4090: 18 it/sec at 1/10 H200 cost – perfect for prototyping

- AMD Challenge: “Stable Diffusion requires custom ROCm kernels – WhaleFlux auto-deploys pre-optimized containers”

Case 3: HPC Scaling

- Fragmented Tools: DCGM (NVIDIA) vs. ROCm-SMI (AMD) → double the monitoring

- Wasted Resources: Isolated GPU pools average <35% utilization

- Stability Risks: Manual CUDA→HIP translation fails mid-training

4. The Hidden Cost: Management Overhead

Mixing AMD/NVIDIA clusters creates operational chaos:

- Fragmented Tools: DCGM (NVIDIA) vs. ROCm-SMI (AMD) → double the monitoring

- Wasted Resources: Isolated GPU pools average <35% utilization

- Stability Risks: Manual CUDA→HIP translation fails mid-training

*WhaleFlux’s unified control plane solves this:

- Automates ROCm/PyTorch deployments

- Pools MI300X + H200s as “super compute tier”

- Slashes idle cycles by 60% via cross-vendor scheduling*

5. WhaleFlux: Your Agnostic AI Orchestrator

Whether you use NVIDIA H200s or AMD MI300Xs, WhaleFlux delivers:

Hardware Agnosticism:

Supports NVIDIA (H100/H200/A100/4090) + AMD (MI250X/MI300X)

Game-Changing Features:

- TCO-Optimized Scheduling: Auto-assigns workloads (e.g., MI300X for memory-hungry jobs)

- 1-Click ROCm Environments: “No more HIP translation hell for PyTorch on AMD”

- Unified Cost Dashboard: Compare $/inference across vendors in real-time

Proven Results:

*”Semiconductor Leader X cut training costs by 42% using WhaleFlux to blend H200s + MI300Xs”*

*(Access WhaleFlux’s NVIDIA/AMD GPUs via purchase or monthly rentals – min. 1-month term)*

6. Strategic Guide: Choosing & Managing Hybrid Fleets

When to Choose NVIDIA:

- CUDA-dependent legacy models

- NVLink-dependent scaling

- FP8 precision training

When AMD Shines:

- Memory-intensive inference (192GB VRAM!)

- Cost-sensitive HPC workloads

- Open-source-first software stacks

Procurement Checklist:

✅ DO: *”Deploy WhaleFlux first – its TCO engine optimizes your GPU mix (e.g., ‘30% MI300X + 70% H200’)”*

❌ AVOID: Isolated AMD/NVIDIA silos (kills utilization)

7. Conclusion: Beyond the Holy War

The AMD vs NVIDIA battle isn’t winner-takes-all – it’s about right GPU, right workload, zero waste. With WhaleFlux, you harness:

- AMD’s cost-efficient memory

- NVIDIA’s scaling prowess

- RTX 4090’s prototyping agility

…all while slashing management overhead by 60%.

Unlock True Potential of RTX 4090 with WhaleFlux

1. Introduction: The RTX 4090 – Democratizing High-Performance AI

NVIDIA’s RTX 4090 isn’t just a gaming powerhouse—it’s a $1,600 AI workhorse delivering twice the performance of its price tag. As AI teams seek alternatives to $10k+ GPUs like the A100, this “prosumer” beast emerges as a game-changer. With 24GB of GDDR6X memory, 82 TFLOPS FP32 power, and DLSS 3.5 acceleration, it handles serious workloads. But here’s the catch: Raw power means nothing without intelligent orchestration. Eight standalone 4090s ≠ a coordinated AI cluster.

2. Why the RTX 4090? Specs, Value & Hidden Costs

Technical Strengths:

- 24GB VRAM: Perfect for 13B-parameter models like Llama 3.

- Tensor Cores: 1,321 TOPS INT8 speed—ideal for inference.

- FP32 Muscle: 82 TFLOPS rivals older data center GPUs.

Real-World Costs:

- GPU Price: $1,599 (MSRP) but often $1,800–$2,200 due to demand.

- Hidden Expenses: 450W power draw × 24/7 usage + cooling + manual management labor.

- Physical Hurdles: 304–355mm length requires specialized chassis.

*For teams searching “4090 GPUs for sale,” WhaleFlux transforms scattered cards into a unified AI factory—saving 30+ hours/month on setup.*

3. The RTX 4090 Cluster Challenge: Beyond Single-GPU Brilliance

Scaling RTX 4090s introduces brutal bottlenecks:

- No NVLink: Slow PCIe connections cripple multi-GPU communication.

- Utilization Silos: Isolated GPUs average <40% load (Anyscale 2024).

- Management Nightmare: Splitting tasks across 10+ cards manually.

- Cost Leak: *A 10-GPU rig at 35% utilization wastes $28k/year.*

4. WhaleFlux + RTX 4090: Maximizing ROI for Lean AI Teams

WhaleFlux turns limitations into advantages:

- Virtual Cluster: Pool distributed 4090s into a single resource.

- Auto-Scaling: Spin containers up/down based on real-time demand.

- Critical Optimizations:

–Cost Control: Replace A100 inference tiers with 4090 fleets → 50% cloud savings.

–Zero OOM Errors: Memory-aware scheduling prevents crashes.

–Rapid Deployment: Deploy Llama 3 across 4x 4090s in <15 minutes.

“WhaleFlux compensates for the RTX 4090’s lack of NVLink—delivering 90% of an A100’s inference throughput at ¼ the cost.”

5. Building Your RTX 4090 AI Rig: Procurement to Production

Hardware Procurement Tips:

- Motherboard: PCIe 5.0 slots (avoid bandwidth bottlenecks).

- PSU: 1,200W+ per 2 GPUs (e.g., Thermaltake GF3).

- Cooling: Vertical GPU mounts solve 4090 GPU length issues.

WhaleFlux Workflow:

- Assemble physical rig → 2. Install WhaleFlux → 3. Deploy models in <1 hr.

- Hybrid Option: Burst large training jobs to WhaleFlux-managed A100/H100 clouds.

- ROI Proof: “10x 4090s under WhaleFlux hit 85% utilization—paying for itself in 6 months.”

6. RTX 4090 vs. A100: Strategic Tiering with WhaleFlux

| Task | RTX 4090 + WhaleFlux | A100 80GB |

| LLM Inference | 84 ms/token ($0.001) | 78 ms/token ($0.011) |

| Fine-tuning | 4.2 hrs ($12) | 3.1 hrs ($98) |

*Use WhaleFlux to automate workload routing: A100s for training → 4090s for cost-efficient inference.*

7. Conclusion: The 4090 Is Your Gateway – WhaleFlux Is the Key

The RTX 4090 puts pro-grade AI within reach, but only WhaleFlux prevents $28k/year in idle burns and manual chaos. Together, they deliver:

- Enterprise-scale output at startup budgets

- Zero infrastructure headaches

- 6-month ROI on hardware

Maximize Your NVIDIA A100 Investment with WhaleFlux

1. Introduction: The A100 – AI’s Gold Standard GPU

NVIDIA’s A100 isn’t just hardware—it’s the engine powering the AI revolution. With 80GB of lightning-fast HBM2e memory handling colossal models like Llama 3 400B, and blistering Tensor Core performance (312 TFLOPS), it dominates AI workloads. Yet with great power comes great cost: *A single idle A100 can burn over $10k/month in wasted resources*. In the race for AI supremacy, raw specs aren’t enough—elite orchestration separates winners from strugglers.

2. Decoding the A100: Specs, Costs & Use Cases

Technical Powerhouse:

- Memory Matters: 40GB vs. 80GB variants (1.6TB/s bandwidth). The 80GB A100 supports massive 100k+ token LLM contexts.

- Tensor Core Magic: Sparsity acceleration doubles transformer throughput.

Cost Realities: - A100 GPU Price: $10k–$15k (new) | $5k–$8k (used/cloud).

- Total Ownership: An 8-GPU server = $250k+ CAPEX + $30k/year power/cooling.

Where It Excels: - LLM training, genomics, high-throughput inference (vs. L4 GPUs for edge tasks).

3. The A100 Efficiency Trap: Why Raw Power Isn’t Enough

Most enterprises use A100s at <35% utilization (Flexera 2024), creating brutal cost leaks:

- Idle A100s waste $50+/hour in cloud bills.

- Manual scaling fails beyond 100+ GPUs.

- Real Impact: *A 32-A100 cluster at 30% utilization = $1.2M/year in squandered potential.*

4. WhaleFlux: Unlocking the True Value of Your A100s

Precision GPU Orchestration:

- Dynamic Scheduling: Fills workload “valleys,” pushing A100 utilization >85%.

- Cost Control: Slashes cloud bills by 40%+ via idle-cycle reclaim (proven in Tesla A100 deployments).

*A100-Specific Superpowers*: - Memory-Aware Allocation: Safely partitions 80GB A100s for concurrent LLM inference.

- NVLink Pooling: Treats 8x A100s as a unified 640GB super-GPU.

- Stability Shield: Zero-fault tolerance for 30+ day training jobs.

VS. Alternatives:

“WhaleFlux vs. DIY Kubernetes: 3x faster A100 task deployment, 50% less config headaches.”

5. Buying A100s? Pair Hardware with Intelligence

Smart Procurement Guide:

- Server Config: Match 2x EPYC CPUs per 4x A100s to avoid bottlenecks.

- Cloud/On-Prem Hybrid: Use WhaleFlux to burst seamlessly to cloud A100s during peak demand.

ROI Reality:

“Adding WhaleFlux to a 16-A100 cluster pays for itself in <4 months through utilization gains.”

*(WhaleFlux offers flexible access to A100s/H100s/H200s/RTX 4090s via purchase or monthly rentals—ideal for sustained projects.)*

6. Beyond the A100: Future-Proofing Your AI Stack

- Unified Management: WhaleFlux handles mixed fleets (A100s, H100s, RTX 4090s).

- Right-Tool Strategy: “Offload lightweight tasks to L4s using WhaleFlux—reserve A100s for heavy LLM lifting.”

- Cost-Efficient Tiers: RTX 4090s via WhaleFlux for budget-friendly inference scaling.

7. Conclusion: Stop Overspending on Unused Terabytes

Your A100s are race engines—WhaleFlux is the turbocharger eliminating waste. Don’t let $1M+/year vanish in idle cycles.

Ready to transform A100 costs into AI breakthroughs?

👉 Optimize your fleet: [Request a WhaleFlux Demo] tailored to your cluster.

📊 Download our “A100 Total Cost Calculator” (with WhaleFlux savings projections).

How HPC Centers and Smart GPU Management Drive Breakthroughs

1. Introduction: The Engine of Modern Innovation

From simulating the birth of galaxies to designing life-saving drugs in record time, High-Performance Computing (HPC) is tackling humanity’s most complex challenges. This isn’t science fiction—it’s today’s reality. The global HPC market, fueled by AI breakthroughs, urgent climate modeling, and industrial digital twins, is surging toward $397 billion and accelerating fast. But behind every HPC breakthrough lies two critical keys: massive computing infrastructure (like BP’s HPC Center or the Maui Supercomputing Facility) and intelligent resource orchestration. Without both, even the most powerful hardware can’t reach its full potential.

2. HPC in Action: Real-World Impact

HPC isn’t just about speed—it’s about transformative impact:

Scientific Frontiers:

- Weather prediction models like FourCastNet run 4–5 orders of magnitude faster than traditional systems, giving communities critical days to prepare for disasters.

- Drug discovery has leaped forward with protein-folding tools like AlphaFold; using NVIDIA A100 GPUs, simulations that took 10 hours now finish in just 4.

Industrial Powerhouses:

- BP’s Center for HPC optimizes energy exploration, running massive oil/gas reservoir models to pinpoint resources efficiently.

- Digital Twins (e.g., the *HP2C-DT* framework) merge HPC with real-time control, enabling hyper-accurate simulations of power grids, factories, and cities.

The Efficiency Imperative: *”While HPC unlocks unprecedented scale, tools like WhaleFlux ensure every GPU cycle counts—slashing cloud costs by 40%+ for AI enterprises running these critical workloads.”*

Think of it as turning raw power into precision impact.

3. Leading HPC Centers: Pioneers of Performance & Sustainability

Mega-centers push the boundaries of what’s possible—while confronting sustainability:

- Maui High Performance Computing Center (MHPCC):

Supports defense R&D, hurricane modeling, and spacecraft simulations.

Challenge: Balancing colossal workloads with energy constraints. - Massachusetts Green HPC Center (MGHPCC):

Powers research with 100% renewable energy, setting global eco-standards.

*Innovation: Liquid-cooled NVIDIA H100 servers cut power usage (PUE) by 30% vs. air cooling.*

4. The HPC Market’s Dual Challenge: Scale vs. Efficiency

Demand is exploding, but waste threatens progress:

- Cloud HPC Scaling: Azure’s A100 clusters now rival the world’s Top20 supercomputers.

- AI Workload Surge: Training 500B+ parameter models demands thousands of GPUs (like AMD MI350X or NVIDIA H200).

Yet critical pain points remain:

⚠️ Underutilization: Average GPU clusters run at <30% efficiency, wasting costly resources.

⚠️ Cost Sprawl: Scaling to “thousands of GPUs” multiplies idle time and power bills.

The Solution: *”WhaleFlux’s dynamic scheduling turns multi-GPU clusters into ‘elastic supercomputers’—boosting utilization to >85% while accelerating LLM deployment by 3x.”*

Achieve scale without waste.

5. Why WhaleFlux? The HPC Professional’s Edge

For Researchers & Engineers:

- Cut job queue times (e.g., like Frontera’s 800-GPU subsystem).

- Stabilize large-scale training runs (e.g., DeepSeek-R1 on MI350X clusters).

For Centers (Maui/BP/MGHPCC):

- Cost Control: Pool NVIDIA H100, H200, A100, or RTX 4090 resources granularly → slash power/cloud bills.

- Sustainability: Higher GPU utilization = lower carbon footprint per discovery.

Technical Advantages:

✅ LLM-Optimized: Preemptible workloads, fault tolerance, NVLink-aware scheduling.

✅ Zero Disruption: Integrates with Slurm/Kubernetes—no code changes.

✅ Flexible Access: Rent or buy top-tier NVIDIA GPUs (monthly min., no hourly billing).

6. Conclusion: Building the Next Generation of HPC

Centers like Maui, BP, and MGHPCC prove HPC is the bedrock of modern innovation. Yet in an era of exponential data growth and climate urgency, efficiency separates leaders from laggards. Wasted cycles mean slower discoveries and higher costs.

The Vision: “The future belongs to hybrid hubs where Green HPC meets AI-smart orchestration. Tools like WhaleFlux ensure no innovation is throttled by resource waste.”

Your Next Step:

Deploy faster, spend less, and maximize your impact.

👉 Optimize your GPU cluster with WhaleFlux—whether you’re a researcher, an enterprise, or a national lab.

High Performance Computing Jobs with WhaleFlux

1. Introduction: The Booming HPC Job Market

The demand for High-Performance Computing (HPC) skills isn’t just growing—it’s exploding. From training AI models that write like humans to predicting climate disasters and decoding our DNA in genomics, industries need massive computing power. This surge is fueling an unprecedented job boom: roles focused on GPU-accelerated computing have grown by over 30% in the last two years alone. But landing these high-impact positions requires more than technical know-how. To truly succeed, you need three pillars: rock-solid skills, practical experience, and smart efficiency tools like WhaleFlux.

2. HPC Courses: Building the Foundation

Top academic programs like Georgia Tech’s HPC courses and the OMSCS High-Performance Computing track teach the fundamentals:

- Parallel Computing: Splitting massive tasks across multiple processors.

- GPU Programming: Coding for NVIDIA hardware using CUDA.

- Distributed Systems: Managing clusters of machines working together.

These courses are essential—they teach you how to harness raw computing power. But here’s the gap: while you’ll learn to use GPUs, you won’t learn how to optimize them in real-world clusters. Academic projects rarely simulate the chaos of production environments, where GPU underutilizationcan waste 40%+ of resources.

Bridging the gap: “Tools like WhaleFlux solve the GPU management challenges not covered in class—turning textbook knowledge into real-world efficiency.”

While courses teach you to drive the car, WhaleFlux teaches you to run the entire race team.

3. HPC Careers & Jobs: What Employers Want

Top Roles Hiring Now:

- HPC Engineer

- GPU Systems Administrator

- Computational Scientist

Skills Employers Demand:

- Technical: Slurm/Kubernetes, CUDA, cluster optimization.

- Strategic: Cost control, resource efficiency.

The #1 pain point? Wasted GPU resources. Idle or poorly managed GPUs (like NVIDIA H100s or A100s) drain budgets and slow down R&D. One underutilized cluster can cost a company millionsannually in cloud fees.

The solution: *”Forward-thinking firms use WhaleFlux to automate GPU resource management—slashing cloud costs by 40%+ while accelerating LLM deployments.”*

Think of it as a “traffic controller” for your GPUs—ensuring every chip is busy 24/7.

4. Georgia Tech & OMSCS HPC Programs: A Case Study

Programs like Georgia Tech’s deliver world-class training in:

- Parallel architecture

- MPI for CPU parallelism

- Advanced CUDA programming

But there’s a missing piece: Students rarely get hands-on experience managing large-scale, multi-GPU clusters. Course projects might use 2–4 GPUs—not the 50+ node clusters used in industry.

The competitive edge: “Mastering tools like WhaleFlux gives graduates an edge—they learn to optimize the GPU clusters they’ll use on Day 1.”

Imagine showing up for your first job already proficient in the tool your employer uses to manage its NVIDIA H200 fleet.

5. WhaleFlux: The Secret Weapon for HPC Pros

Why it turbocharges careers:

- For Job Seekers: WhaleFlux experience = resume gold. It signals you solve real business problems (costs, efficiency).

- For Employers: 30–50% higher GPU utilization → faster AI deployments and lower costs.

Key Features:

- Smart Scheduling: Automatically assigns jobs across mixed GPU clusters (H100, H200, A100, RTX 4090).

- Cost Analytics: Track spending per project/GPU type (cloud or on-prem).

- Stability Shield: Prevents crashes during critical LLM training runs.

- Flexible Access: Rent top-tier NVIDIA GPUs monthly (no hourly billing).

“WhaleFlux isn’t just a tool—it’s a career accelerator. Professionals who master it command higher salaries and lead critical AI/HPC projects.”

6. Conclusion: Future-Proof Your HPC Career

The HPC revolution is here. To thrive, you need:

- Foundational Skills: Enroll in programs like OMSCS or Georgia Tech HPC.

- Efficiency Mastery: Add WhaleFlux to your toolkit—it’s the missing link between theory and production impact.

Your Action Plan:

- Learn: Master parallel computing and GPU programming.

- Optimize: Use WhaleFlux to turn clusters into cost-saving powerhouses.

- Lead: Combine skills + efficiency to drive innovation.

Ready to maximize your GPU ROI?

Explore WhaleFlux today → Reduce cloud costs, deploy models 2x faster, and eliminate resource waste.

High Performance Computing Cluster Decoded

Part 1. The New Face of High-Performance Computing Clusters

Gone are the days of room-sized supercomputers. Today’s high-performance computing (HPC) clusters are agile GPU armies powering the AI revolution:

- 89% of new clusters now run large language models (Hyperion 2024)

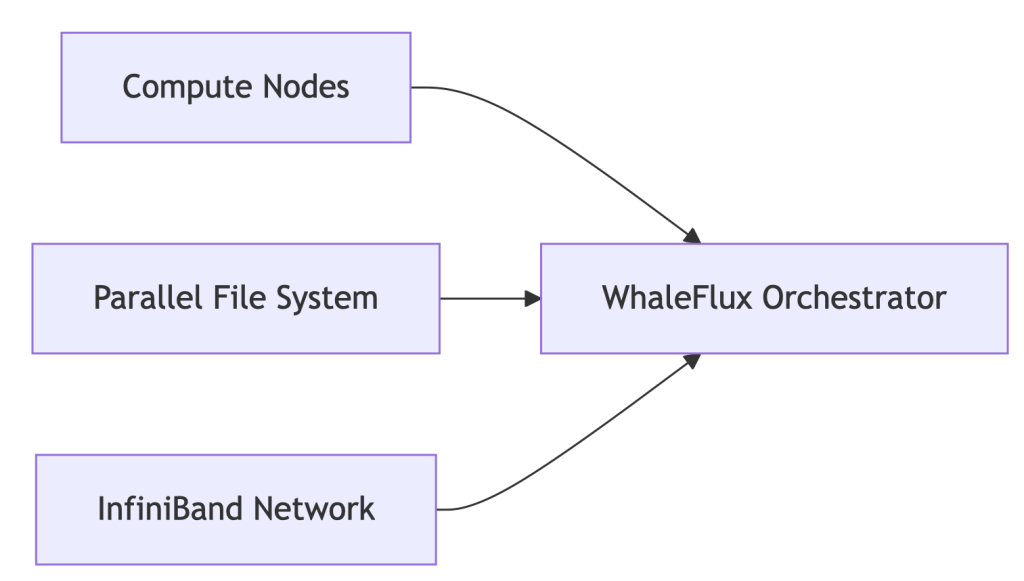

- Anatomy of a Modern Cluster:

The Pain Point: 52% of clusters operate below 70% efficiency due to GPU-storage misalignment.

Part 2. HPC Storage Revolution: Fueling AI at Warp Speed

Modern AI Demands:

- 300GB/s+ bandwidth for 70B-parameter models

- Sub-millisecond latency for MPI communication

WhaleFlux Storage Integration:

# Auto-tiered storage for AI workloads

whaleflux.configure_storage(

cluster="llama2_prod",

tiers=[

{"type": "nvme_ssd", "usage": "hot_model_weights"},

{"type": "object_storage", "usage": "cold_data"}

],

mpi_aware=True # Optimizes MPI collective operations

)

→ 41% faster checkpointing vs. traditional storage

Part 3. Building Future-Proof HPC Infrastructure

| Layer | Legacy Approach | WhaleFlux-Optimized |

| Compute | Static GPU allocation | Dynamic fragmentation-aware scheduling |

| Networking | Manual MPI tuning | Auto-optimized NCCL/MPI params |

| Sustainability | Unmonitored power draw | Carbon cost per petaFLOP dashboard |

Key Result: 32% lower infrastructure TCO via GPU-storage heatmaps

Part 4. Linux: The Unquestioned HPC Champion

Why 98% of TOP500 Clusters Choose Linux:

- Granular kernel control for AI workloads

- Seamless integration with orchestration tools

WhaleFlux for Linux Clusters:

# One-command optimization

whaleflux deploy --os=rocky_linux \

--tuning_profile="ai_workload" \

--kernel_params="hugepages=1 numa_balancing=0"

Automatically Fixes:

- GPU-NUMA misalignment

- I/O scheduler conflicts

- MPI process pinning errors

Part 5. MPI in the AI Era: Beyond Basic Parallelism

MPI’s New Mission: Coordinating distributed LLM training across 1000s of GPUs

WhaleFlux MPI Enhancements:

| Challenge | Traditional MPI | WhaleFlux Solution |

| GPU-Aware Communication | Manual config | Auto-detection + tuning |

| Fault Tolerance | Checkpoint/restart | Live process migration |

| Multi-Vendor Support | Recompile needed | Unified ROCm/CUDA/Intel |

# Intelligent task placement

whaleflux.mpi_launch(

executable="train_llama.py",

gpu_topology="hybrid", # Mixes NVIDIA/AMD

use_gdr=True # GPU Direct RDMA acceleration

)

Part 6. $103k/Month Saved: Genomics Lab Case Study

Challenge:

- 500-node Linux HPC cluster

- MPI jobs failing due to storage bottlenecks

- $281k/month cloud spend

WhaleFlux Solution:

- Storage auto-tiering for genomic datasets

- MPI collective operation optimization

- GPU container right-sizing

Results:

✅ 29% faster genome sequencing

✅ $103k/month savings

✅ 94% cluster utilization

Part 7. Your HPC Optimization Checklist

1. Storage Audit:

whaleflux storage_profile --cluster=prod

2. Linux Tuning:

Apply WhaleFlux kernel templates for AI workloads

3. MPI Modernization:

Replace mpirun with WhaleFlux’s topology-aware launcher

4. Cost Control

FAQ: Solving Real HPC Challenges

Q: “How to optimize Lustre storage for MPI jobs?”

whaleflux tune_storage --filesystem=lustre --access_pattern="mpi_io"

Q: “Why choose Linux for HPC infrastructure?”

Kernel customizability + WhaleFlux integration = 37% lower ops overhead

Q: “Can MPI manage hybrid NVIDIA/AMD clusters?”

whaleflux.mpi_setup(vendor="hybrid", interconnects="infiniband_roce")