Introduction

- Hook: Begin with a relatable scenario – your gaming rig’s fans are roaring, or your AI model training is slowing down unexpectedly. You check your GPU temperature, but is that number good or bad?

- Address the Core Question: Directly answer the most searched query: “What is a normal GPU temp?”

- Thesis Statement: This guide will explain normal and safe GPU temperature ranges for different activities (idle, gaming, AI compute), discuss why temperature management is crucial for performance and hardware longevity, and explore the unique thermal challenges faced by AI enterprises running multi-GPU clusters—and how to solve them.

Part 1. Defining “Normal”: GPU Temperature Ranges Explained

Context is Key:

Explain that “normal” depends on workload (idle vs. gaming vs. AI training).

The General Benchmarks:

- Normal GPU Temp While Idle: Typically 30°C to 45°C (86°F to 113°F).

- Normal GPU Temp While Gaming: Typically 65°C to 85°C (149°F to 185°F). Explain that high-end cards under full load are designed to run in this range.

- Normal GPU Temperature for AI Workloads: Similar to gaming but often sustained for much longer periods (days/weeks), making stability and cooling even more critical.

When to Worry:

Temperatures consistently above 90°C-95°C (194°F-203°F) under load are a cause for concern and potential thermal throttling.

Part 2. Why GPU Temperature Matters: Performance and Longevity

- Thermal Throttling: The most immediate effect. When a GPU gets too hot, it automatically reduces its clock speed to cool down, directly hurting performance and slowing down training jobs or frame rates.

- Hardware Longevity: Consistently high temperatures can degrade silicon and other components over many years, potentially shortening the card’s lifespan.

- System Stability: Extreme heat can cause sudden crashes, kernel panics, or system reboots, potentially corrupting long-running AI training sessions.

Part 3. Factors That Influence Your GPU Temperature

- Cooling Solution: Air coolers (2/3 fans) vs. liquid cooling. Blower-style vs. open-air designs.

- Case Airflow: Perhaps the most critical factor. A well-ventilated case with good fan intake/exhaust is vital.

- Ambient Room Temperature: You can’t cool a GPU below the room’s temperature. A hot server room means hotter GPUs.

- Workload Intensity: Ray tracing, 4K gaming, and training large neural networks push the GPU to 100% utilization, generating maximum heat.

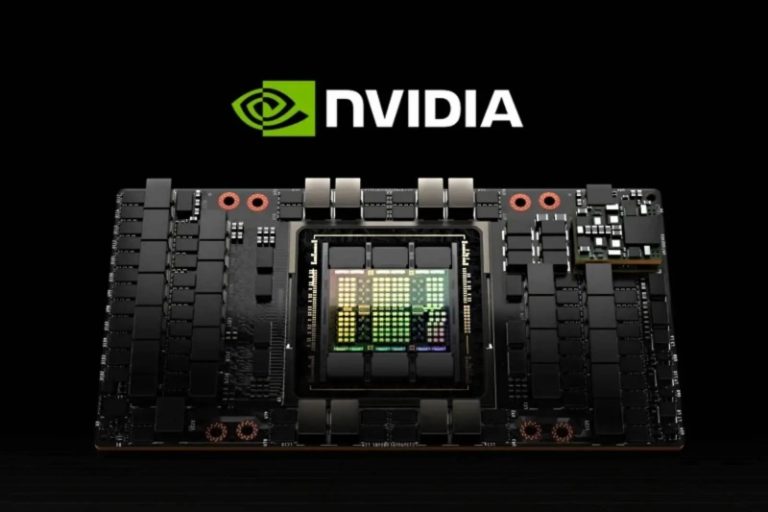

- GPU Manufacturer and Model: High-performance data center GPUs like the NVIDIA H100 or NVIDIA H200 are designed to run reliably at higher temperatures under immense, sustained loads compared to a consumer NVIDIA RTX 4090.

Part 4. How to Monitor Your GPU Temperature

- Built-in Tools: NVIDIA’s Performance Overlay (Alt+R), Task Manager (Performance tab).

- Third-Party Software: Tools like HWInfo, GPU-Z, and MSI Afterburner provide detailed, real-time monitoring and logging.

- For AI Clusters: Monitoring becomes a complex task requiring enterprise-level solutions to track dozens of GPUs simultaneously.

Part 5. The AI Enterprise’s Thermal Challenge: Managing Multi-GPU Clusters

- The Scale Problem: An AI company isn’t managing one GPU; it’s managing a cluster of high-wattage GPUs like the A100 or H100 packed tightly into server racks. The heat output is enormous.

- The Cost of Cooling: The electricity and infrastructure required for cooling become a significant operational expense.

- The Performance Risk: Thermal throttling in even one node can create a bottleneck in a distributed training job, wasting the potential of the entire expensive cluster.

- Lead-in to Solution: Managing this thermal load isn’t just about better fans; it’s about intelligent workload and resource management to prevent hotspots and maximize efficiency.

Part 6. Beyond Cooling: Optimizing Workloads with WhaleFlux

The Smarter Approach:

“While physical cooling is essential, a more impactful solution for AI enterprises is to optimize the workloads themselves to generate heat more efficiently and predictably. This is where WhaleFlux provides immense value.”

What is WhaleFlux:

Reiterate: “WhaleFlux is an intelligent GPU resource management platform designed for AI companies running multi-GPU clusters.”

How WhaleFlux Helps Manage Thermal Load:

- Intelligent Scheduling: Distributes computational jobs across the cluster to avoid overloading specific nodes and creating localized hotspots, promoting even heat distribution and better stability.

- Maximized Efficiency: By ensuring GPUs are utilized efficiently and not sitting idle (which still generates heat), WhaleFlux helps get more compute done per watt of energy consumed, which includes cooling costs.

- Hardware Flexibility: “Whether you purchase your own NVIDIA A100s or choose to rentH100 nodes from WhaleFlux for specific projects, our platform provides the management layer to ensure they run coolly, stably, and at peak performance. (Note: Clarify rental is monthly minimum.)“

The Outcome:

Reduced risk of thermal throttling, lower cooling costs, improved hardware longevity, and more stable, predictable performance for critical AI training jobs.

Conclusion

Summarize:

A “normal” GPU temperature is context-dependent, but managing it is critical for both gamers and AI professionals.

Reiterate the Scale:

For AI businesses, thermal management is a primary operational challenge that goes far beyond individual cooling solutions.

Final Pitch:

Intelligent resource management through a platform like WhaleFlux is not just about software logistics; it’s a critical tool for physical hardware health, cost reduction, and ensuring the performance of your expensive GPU investments.

Call to Action (CTA):

“Is your AI infrastructure running too hot? Let WhaleFlux help you optimize your cluster for peak performance and efficiency. Learn more about our GPU solutions and intelligent management platform today.”