Large Language Models (LLMs) have become the backbone of modern AI applications—but let’s be honest: training a fancy LLM doesn’t mean much if it can’t deliver real value to users. The true magic of LLMs happens when they generate a “sentence of inference”—the human-readable output that solves a problem, answers a question, or creates something useful. Think about a customer service chatbot responding to a user’s query, a content tool writing a product summary, or a coding assistant generating a line of code. These are all “sentence of inference” moments—and they’re where LLMs turn from technical experiments into business assets.

But here’s the catch: creating high-quality “sentence of inference” (fast, accurate, consistent) isn’t easy. Poor infrastructure can derail even the best LLM. If your GPU is too weak, responses take 5 seconds instead of 1—users will leave. If your cluster is mismanaged, half the time the LLM cuts off mid-sentence. And if you’re overpaying for cloud GPUs by the hour, costs spiral out of control. These issues don’t just hurt performance—they erase the value of your LLM entirely.

That’s where WhaleFlux comes in. As an intelligent GPU resource management tool built specifically for AI enterprises, WhaleFlux fills the infrastructure gap. It optimizes multi-GPU clusters to make LLM inference faster, more stable, and cheaper—so every “sentence of inference” your LLM generates is reliable, cost-effective, and ready to impress users. Let’s break down what “sentence of inference” really means, why it needs strong GPU infrastructure, and how WhaleFlux makes it all work.

Part 1. Foundational Concept 1: What Is a “Sentence of Inference” in Machine Learning?

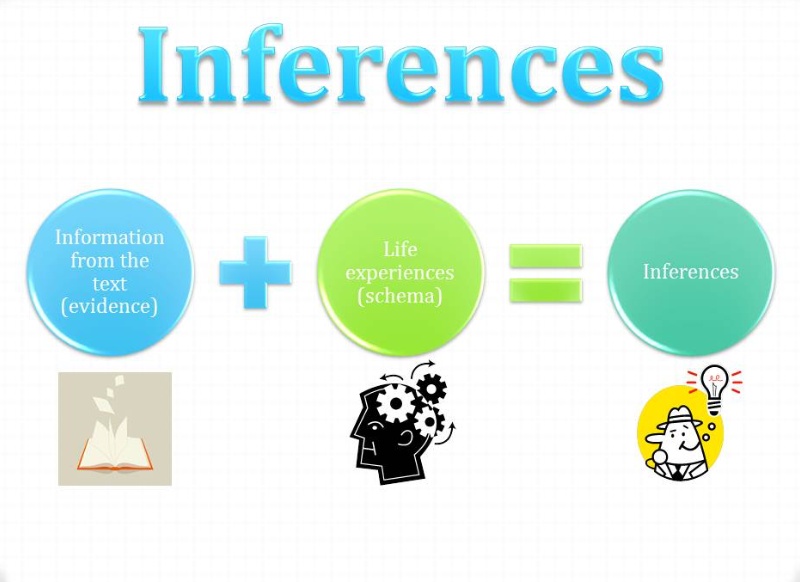

Let’s start with the basics: In machine learning, inference is when a trained model uses new data to make a prediction. For LLMs, that prediction is almost always a piece of human language—a sentence (or a few sentences) that responds to the user’s input. That’s a “sentence of inference”: the final, usable output of an LLM’s inference process.

It’s important to note that a “sentence of inference” isn’t just any text the LLM generates. It has to be meaningful—it needs to solve the user’s problem. For example:

- If a user asks, “What’s the weather in Paris tomorrow?” a bad “sentence of inference” might be, “The weather is something.” A good one is, “Paris will have a high of 18°C tomorrow with a 20% chance of rain—perfect for a walk along the Seine.”

- If a marketer inputs, “Write a short tagline for organic coffee,” a weak output is, “Coffee is good.” A strong one is, “Sip nature—our organic coffee is grown without pesticides, roasted fresh daily.”

The key trait of a great “sentence of inference” is that its quality directly ties to your inference infrastructure. You can have a state-of-the-art LLM (like GPT-4 or Llama 3), but if your GPU can’t handle its size, or your cluster can’t manage traffic, your “sentence of inference” will suffer: slow, inconsistent, or error-prone. Think of it like a sports car: a Ferrari won’t win a race if it’s stuck on a dirt road. Your LLM needs the right “road” (infrastructure) to perform—and that’s where tools like WhaleFlux come in.

Part 2. Foundational Concept 2: Example of Inference in a Sentence (LLM Use Cases)

To make this concrete, let’s walk through two common LLM use cases—each with a clear “example of inference in a sentence.” These are scenarios your team might already be working on, and they’ll show why infrastructure matters.

Use Case 1: Customer Support Chatbots

Every business deals with routine customer questions—password resets, order tracking, return policies. LLMs excel here because they can handle hundreds of these queries at once, 24/7.

- User Input: “I can’t log into my account—how do I reset my password?”

- Sentence of Inference: “I’m sorry you’re having trouble! To reset your password: 1) Go to the login page and click ‘Forgot Password’; 2) Enter the email linked to your account; 3) Follow the link in the verification email (it expires in 15 minutes). If you don’t see the email, check your spam folder or contact our support team at help@yourcompany.com.”

This output is helpful because it’s step-by-step, clear, and addresses potential follow-up questions (like missing emails). But to generate this every time a user asks—without delays or truncation—your LLM needs consistent GPU power. If your infrastructure is spotty, half the time the response might cut off after step 2, leaving the user frustrated.

Use Case 2: Content Generation for Marketing

Marketers use LLMs to create product pitches, social media posts, or blog outlines—saving hours of manual work.

- User Input: “Write a 1-sentence product pitch for an eco-friendly reusable tote bag that holds 20 pounds and folds into a pocket-sized pouch.”

- Sentence of Inference: “Our eco-friendly reusable tote bag holds 20 pounds of groceries (or gear!) and folds into a tiny pocket-sized pouch—ditch plastic bags without sacrificing convenience.”

This pitch works because it highlights key features (20-pound capacity, foldable) and the user’s benefit (no plastic, easy to carry). But to generate this quickly—so the marketer can use it in a campaign deadline—your LLM needs fast inference. If it takes 3 seconds to generate this sentence, the marketer’s workflow slows down.

The common thread here? Both examples rely on optimized GPU resources to deliver high-quality “sentence of inference.” A weak GPU means slow responses; a mismanaged cluster means inconsistent outputs. WhaleFlux solves this by providing the right GPU hardware and cluster management—so your LLM generates reliable “sentence of inference” every time.

Part 3. Why LLM Inference for “Sentence of Inference” Needs Robust GPU Infrastructure

You might be thinking: “Can’t I just use a single GPU or a basic cloud setup?” For small projects (like testing an LLM with 10 users), maybe. But for production—where you’re serving hundreds or thousands of users, and every “sentence of inference” matters—you need robust GPU infrastructure. Here’s why:

Challenge 1: LLMs Are Computationally Hungry

Modern LLMs have billions (even trillions) of parameters—the “rules” they learn from training data. A 70B-parameter LLM (like Llama 3 70B) needs a lot of memory and processing power to run inference. If you use a weak GPU (like a consumer-grade RTX 3060), the LLM will struggle to load all its parameters into memory. This leads to:

- Slow “sentence of inference” (5+ seconds per response).

- Truncated outputs (the LLM runs out of memory mid-sentence).

- Crashes during peak traffic (when 50 users ask questions at once).

Even mid-sized LLMs need powerful GPUs. For example, a 13B-parameter model needs at least 24GB of GPU memory to run inference efficiently—something only professional GPUs (like NVIDIA A100 or RTX 4090) can provide.

Challenge 2: Wasting GPU Capacity Drives Up Costs

Cloud providers (like AWS or GCP) sell GPU access by the hour—but this is risky for LLM inference. If you rent an NVIDIA H100 for $4/hour, but only use 30% of its capacity (because you can’t manage workloads), you’re wasting $2.80/hour. Over a month, that’s $2,016 in wasted money—money that could go to other parts of your AI project.

Waste also happens when you over-provision: renting 10 GPUs when you only need 6, just to avoid traffic spikes. This “safe” approach is expensive, and it’s hard to predict how many GPUs you’ll need on any given day.

Challenge 3: Inconsistency Kills User Trust

Imagine using a chatbot where 1 out of 5 responses are slow, 1 out of 10 are truncated, and 1 out of 20 crash. You’d stop using it—and so would your customers. Inconsistent “sentence of inference” erodes trust in your product.

This inconsistency usually comes from:

- Spotty cloud GPU availability (some cloud providers shut down “spot instances” suddenly if demand spikes).

- Poor cluster management (some GPUs are overloaded while others sit idle).

- Outdated software (drivers or frameworks that don’t work well with your LLM).

For LLM applications to succeed, “sentence of inference” needs to be reliable. Users should get the same fast, accurate response every time they interact with your LLM.

Part 4. How WhaleFlux Optimizes GPU Infrastructure for LLM Inference

Now that we’ve covered the challenges, let’s dive into how WhaleFlux solves them. WhaleFlux isn’t just a GPU provider—it’s an end-to-end solution for LLM inference infrastructure. It’s built to ensure your LLM generates high-quality “sentence of inference” while keeping costs low. Here’s how it works:

1. Tailored GPU Options for Every Inference Need

Not all LLMs are the same—so not all GPUs should be the same. WhaleFlux offers four NVIDIA GPU options, each optimized for different LLM sizes and workloads. This means you never overpay for a GPU that’s too powerful, or struggle with one that’s too weak.

- NVIDIA H100/H200: For large LLMs (70B+ parameters, like GPT-4 or Llama 3 70B). These GPUs have massive memory (80GB for H100, 141GB for H200) and fast processing speeds—perfect for high-throughput use cases (like a chatbot serving 1,000+ users). They ensure even the largest LLMs generate “sentence of inference” in under 2 seconds.

- NVIDIA A100: For mid-scale LLMs (13B-70B parameters, like Mistral 7B or Llama 3 13B). It balances performance and cost—ideal for teams scaling from small to large deployments. For example, an A100 can handle a 34B-parameter LLM with ease, making it great for content generation tools or internal chatbots.

- NVIDIA RTX 4090: For lightweight LLMs (1B-13B parameters, like DistilGPT-2 or Falcon 7B). It’s cost-effective and compact—perfect for low-traffic use cases (like a small business chatbot or a developer’s coding assistant).

Each GPU is pre-configured with the latest drivers, CUDA toolkit, and inference frameworks (like TensorRT or ONNX Runtime). This means you don’t waste time setting up software—you plug in your LLM, and it’s ready to generate “sentence of inference” immediately.

2. Multi-GPU Cluster Efficiency: Do More with Less

The biggest waste in LLM inference is underused GPUs. WhaleFlux’s core feature is its intelligent multi-GPU cluster management. It optimizes how workloads are distributed across your GPUs, so every GPU is used to its full potential.

For example:

- If you have 4 NVIDIA A100s and 100 concurrent users, WhaleFlux splits the inference requests evenly—each GPU handles 25 users, no more, no less. This avoids overloading one GPU (which causes slow responses) and underusing others (which wastes money).

- If you’re running a 70B-parameter LLM that’s too large for one GPU, WhaleFlux uses “model parallelism” to split the LLM across multiple GPUs. Each GPU handles a portion of the model’s parameters, working together to generate “sentence of inference” fast.

This efficiency means you get 30-50% more throughput from your GPUs compared to a manual setup. For example, 4 A100s with WhaleFlux can handle 200 users—while the same 4 GPUs without WhaleFlux might only handle 130. More users served, same hardware cost.

3. Flexible, Cost-Predictable Pricing: No More Surprise Bills

Cloud hourly billing is a nightmare for LLM inference. One month you might pay $1,000; the next, $3,000—because traffic spiked or the cloud provider raised prices. WhaleFlux fixes this with a simple, predictable pricing model:

- You can purchase GPUs outright (great for long-term projects) or rent them (ideal for short-term needs).

- No hourly billing—rental plans start at 1 month minimum. This means you know exactly how much you’ll pay each month (e.g., $1,200 for 2 NVIDIA A100s) —no surprises.

- No vendor lock-in: You can use your own software stack (PyTorch, FastAPI, Kubernetes) with WhaleFlux’s GPUs. You’re not tied to a single cloud provider, so you can switch tools or scale without penalties.

For teams on a budget, this is a game-changer. You can plan your infrastructure costs months in advance, and you never waste money on unused hourly GPU time.

Part 5. Practical Example: Using WhaleFlux to Power “Sentence of Inference” in a Customer Chatbot

Let’s put this all together with a real-world example. Imagine you’re an ML engineer at an e-commerce company. You’ve trained a 70B-parameter LLM to handle customer support—answering questions about orders, returns, and product details. Your goal is to launch it for 24/7 use, serving 500+ concurrent users during peak hours (like Black Friday).

Before WhaleFlux: Frustration and High Costs

You start with a cloud setup: 6 NVIDIA A100s rented by the hour ($3/hour each). Here’s what happens:

- Slow “sentence of inference”: During peak hours, responses take 3-4 seconds. Users complain on social media about “laggy chatbot.”

- Truncated outputs: 15% of responses cut off mid-sentence (e.g., “To return your order, go to—”) because the cloud GPUs occasionally shut down spot instances.

- High costs: Over a month, you pay $13,000 (6 GPUs × $3/hour × 730 hours) —but you only use 60% of the GPU capacity. You’re wasting $5,200.

Your team is stuck: The LLM works in testing, but it’s not ready for production. The “sentence of inference” quality is too low, and costs are spiraling.

With WhaleFlux: Fast, Consistent, and Affordable

You switch to WhaleFlux. Here’s the turnaround:

- Choose the right GPUs: WhaleFlux recommends 4 NVIDIA A100s (not 6) —enough to handle 500+ users with room to spare.

- Optimize the cluster: WhaleFlux’s multi-GPU management distributes requests evenly. Each GPU handles 125 users during peaks—no overloading.

- Predictable pricing: You rent the 4 A100s for $900/month each ($3,600 total for the month) —a 72% cost cut from the cloud setup.

The results?

- Fast responses: “Sentence of inference” takes 0.8-1.2 seconds—users stop complaining.

- Consistent outputs: Truncated responses drop to 0.5% (only from rare software glitches, not GPU issues).

- Happy team: Your DevOps team no longer spends hours troubleshooting cloud GPU crashes. They can focus on improving the LLM, not fixing infrastructure.

This is the power of WhaleFlux: It turns a failing LLM deployment into a successful one—by ensuring every “sentence of inference” is fast, reliable, and cost-effective.

Part 6. Best Practices for Maximizing “Sentence of Inference” Quality with WhaleFlux

To get the most out of WhaleFlux (and your LLM), follow these three best practices. They’re simple, actionable, and tailored to ML engineers and infrastructure teams.

1. Match GPU Type to LLM Size

WhaleFlux offers four GPUs—don’t guess which one you need. Match the GPU to your LLM’s parameter count to avoid overpaying or underperforming:

- 7B-13B parameters (e.g., Mistral 7B, Llama 3 8B): Use NVIDIA RTX 4090. It’s cost-effective and has enough memory (24GB) for these smaller LLMs.

- 13B-70B parameters (e.g., Llama 3 70B, Falcon 40B): Use NVIDIA A100. It balances memory (40GB) and speed—perfect for mid-scale LLMs.

- 70B+ parameters (e.g., GPT-4, Llama 3 400B): Use NVIDIA H100 or H200. Their large memory (80GB for H100, 141GB for H200) can handle the biggest LLMs without lag.

WhaleFlux’s team can help you choose if you’re unsure—just share your LLM size and user count, and they’ll recommend the right fit.

2. Leverage WhaleFlux’s Cluster Monitoring to Track Speed

“Sentence of inference” speed is critical—if it slows down, users notice. WhaleFlux has a built-in monitoring dashboard that tracks:

- Latency: How long it takes to generate each “sentence of inference” (aim for <1.5 seconds for real-time use cases).

- GPU utilization: How much of each GPU’s capacity is being used (aim for 70-80%—too low means waste, too high means slowdowns).

- Error rates: How often “sentence of inference” is truncated or fails (aim for <1%).

Set up alerts for anomalies—e.g., “Alert if latency >2 seconds” or “Alert if GPU utilization >90%”. This lets you fix issues before they affect users. For example, if latency spikes to 2.5 seconds, you can check the dashboard and see that one GPU is overloaded—WhaleFlux can automatically redistribute workloads to fix it.

3. Plan for Scalability with Flexible Rentals

Traffic to your LLM won’t stay the same. You might have 100 users in January, 500 in February (during a sale), and 300 in March. WhaleFlux’s monthly rental model lets you scale up or down easily:

- Peak traffic: Rent extra GPUs for a month (e.g., add 2 A100s for Black Friday).

- Slow periods: Return unused GPUs to cut costs (e.g., drop from 6 to 4 A100s in January).

This flexibility means you never pay for more GPUs than you need. It also lets you test new use cases—e.g., adding a content generation tool to your LLM—without committing to long-term hardware purchases.

Conclusion: Infrastructure = Quality “Sentence of Inference”

At the end of the day, LLMs are only as good as their inference infrastructure. A great LLM can’t generate high-quality “sentence of inference” on a weak GPU or a mismanaged cluster. The “sentence of inference” is where your LLM delivers value—and to make that value consistent, you need the right tools.

WhaleFlux simplifies this. It gives you tailored NVIDIA GPUs (H100, H200, A100, RTX 4090) optimized for LLM inference, intelligent multi-GPU cluster management to boost efficiency, and predictable monthly pricing to cut costs. It takes the headache out of infrastructure—so your team can focus on what matters: building LLMs that generate “sentence of inference” that users love.

Whether you’re launching a customer chatbot, a content tool, or a coding assistant, WhaleFlux ensures your LLM performs at its best. No more slow responses, no more truncated outputs, no more surprise bills—just reliable, cost-effective inference.

GPU Solution

Ready to make your LLM’s “sentence of inference” fast, consistent, and affordable? Here’s what to do next:

- Explore WhaleFlux’s GPU solutions: Visit our website to learn more about the NVIDIA H100, H200, A100, and RTX 4090—find the perfect fit for your LLM size and workload.

- Get a customized plan: Contact our team with your LLM parameters, user count, and goals. We’ll recommend how many GPUs you need and whether to rent or purchase.

- Start small, scale fast: Launch with a 1-month rental to test WhaleFlux’s performance. If you love it, expand—no long-term commitments required.

Don’t let poor infrastructure hold back your LLM. With WhaleFlux, every “sentence of inference” your LLM generates will be ready to deliver real value to your users.

FAQs

1. What exactly is a “Sentence of Inference” in Machine Learning, and why is it important?

The term “Sentence of Inference” is not a formal academic definition, but a practical conceptual metaphor. It refers to a single, complete unit of input data processed by a model to produce one prediction or output during the inference (prediction) phase. In Natural Language Processing (NLP), it can literally be a sentence. In computer vision, it’s an image; in speech, an audio clip. Its importance lies in being the fundamental unit of work for measuring performance. Key metrics like latency (time to process one “sentence”) and throughput (“sentences” processed per second) are defined by it. Efficiently handling each “sentence” is critical for user experience and system cost, especially when serving Large Language Models (LLMs) which process lengthy text “sentences”. The computational demand for low-latency inference on complex “sentences” directly dictates the need for high-performance infrastructure, such as the NVIDIA GPU clusters managed by WhaleFlux to ensure stable and fast processing.

2. How does the complexity or length of a “Sentence of Inference” impact LLM performance and hardware requirements?

The complexity (e.g., number of tokens in text, resolution of an image) of a “Sentence of Inference” has a direct, often non-linear impact on performance. For LLMs:

- Longer Sequences consume more GPU memory (due to the KV cache) and increase computational time, raising latency.

- Complex Queries (requiring multi-step reasoning) may engage more of the model’s layers intensively.

This means that serving long or complex “sentences” reliably requires GPUs with ample, high-bandwidth memory (like the NVIDIA H100 or A100) and optimized inference software to manage resources efficiently. A platform like WhaleFlux is crucial here, as it intelligently allocates such demanding inference workloads across suitable NVIDIA GPUs in its cluster, preventing memory overflows and ensuring consistent latency regardless of “sentence” complexity.

3. In the context of batch processing, how is a “Sentence of Inference” different from a “Batch”?

This is a key distinction for optimizing throughput. A “Sentence of Inference” is the singular unit (e.g., one user query). A Batch is a group of these “sentences” processed simultaneously by the model to maximize hardware utilization. The relationship is:

- Latency is primarily affected by the time to process the slowest “sentence” in a batch.

- Throughput is maximized by creating large, efficient batches.

The challenge is dynamic batching—grouping incoming “sentences” of varying lengths/complexities without causing excessive delay. This requires sophisticated orchestration. WhaleFlux aids this at the infrastructure layer by providing the high-performance, consistent NVIDIA GPU environment (e.g., A100/H100 clusters) needed for inference servers to implement efficient dynamic batching, ensuring high throughput without sacrificing latency for individual “sentences.”

4. What are common strategies to optimize the processing of a single “Sentence of Inference” for lower latency?

Optimizing for a single “sentence” focuses on minimizing the computation path:

- Model Optimization: Techniques like quantization (e.g., converting weights to FP16/INT8) reduce the computational load per token.

- Kernel Optimization: Using optimized inference runtimes (like TensorRT-LLM) with fused kernels.

- Caching: Leveraging attention key-value (KV) caches for sequential interactions.

- Right-Sizing Hardware: Using a GPU with sufficient memory bandwidth and compute to handle peak “sentence” complexity without stalling. For instance, an NVIDIA RTX 4090 may suffice for smaller models, while massive “sentences” for enterprise LLMs demand the memory bandwidth of an H100 or H200.

WhaleFlux enables this optimization cycle by allowing teams to easily profile their “sentence” latency on different NVIDIA GPU types and deploy the optimized model on the right hardware, all within a managed environment that removes infrastructure guesswork.

5. How does a platform like WhaleFlux help manage the cost and stability when serving millions of diverse “Sentences of Inference”?

Serving millions of diverse “sentences” creates variable, unpredictable load on GPU resources. WhaleFlux addresses the resulting cost and stability challenges through:

- Intelligent Scheduling & Packing: It dynamically packs diverse inference “sentences” (short and long) from multiple models or users onto the same NVIDIA GPU cluster (using A100s, H100s, etc.), maximizing aggregate utilization and preventing expensive resources from sitting idle.

- Performance Stability: By monitoring hardware health and workload, it prevents resource contention that could cause latency spikes for critical “sentences,” ensuring a stable quality of service.

- Predictable Cost Structure: Unlike volatile hourly cloud billing, WhaleFlux’s monthly rental/purchase model for NVIDIA GPUs translates high, efficient utilization into a predictable cost per “sentence” processed, significantly lowering the Total Cost of Ownership (TCO) for large-scale inference workloads.