In the fields of artificial intelligence, high-performance computing, and graphics processing, the GPU (Graphics Processing Unit) has become an indispensable core hardware component. Evolving from initial graphics rendering to today’s general-purpose parallel computing, GPUs excel in tasks like deep learning, scientific simulation, and real-time rendering thanks to their massive parallel processing power. This article provides an in-depth analysis of the NVIDIA RTX 4090’s performance characteristics and ideal use cases, discusses key factors enterprises should consider when selecting GPUs, and introduces how intelligent tools can optimize GPU resource management.

What is a GPU and Why is it So Important?

A GPU (Graphics Processing Unit) is a specialized microprocessor designed for handling graphics and parallel computations. Compared to a CPU (Central Processing Unit), a GPU contains thousands of smaller cores capable of executing a vast number of simple tasks simultaneously, making it ideal for highly parallel computational workloads. Initially used primarily for gaming and graphics rendering, the role of GPUs has expanded significantly with the development of General-Purpose computing on GPUs (GPGPU), playing an increasingly critical role in AI training, big data analytics, and scientific computing.

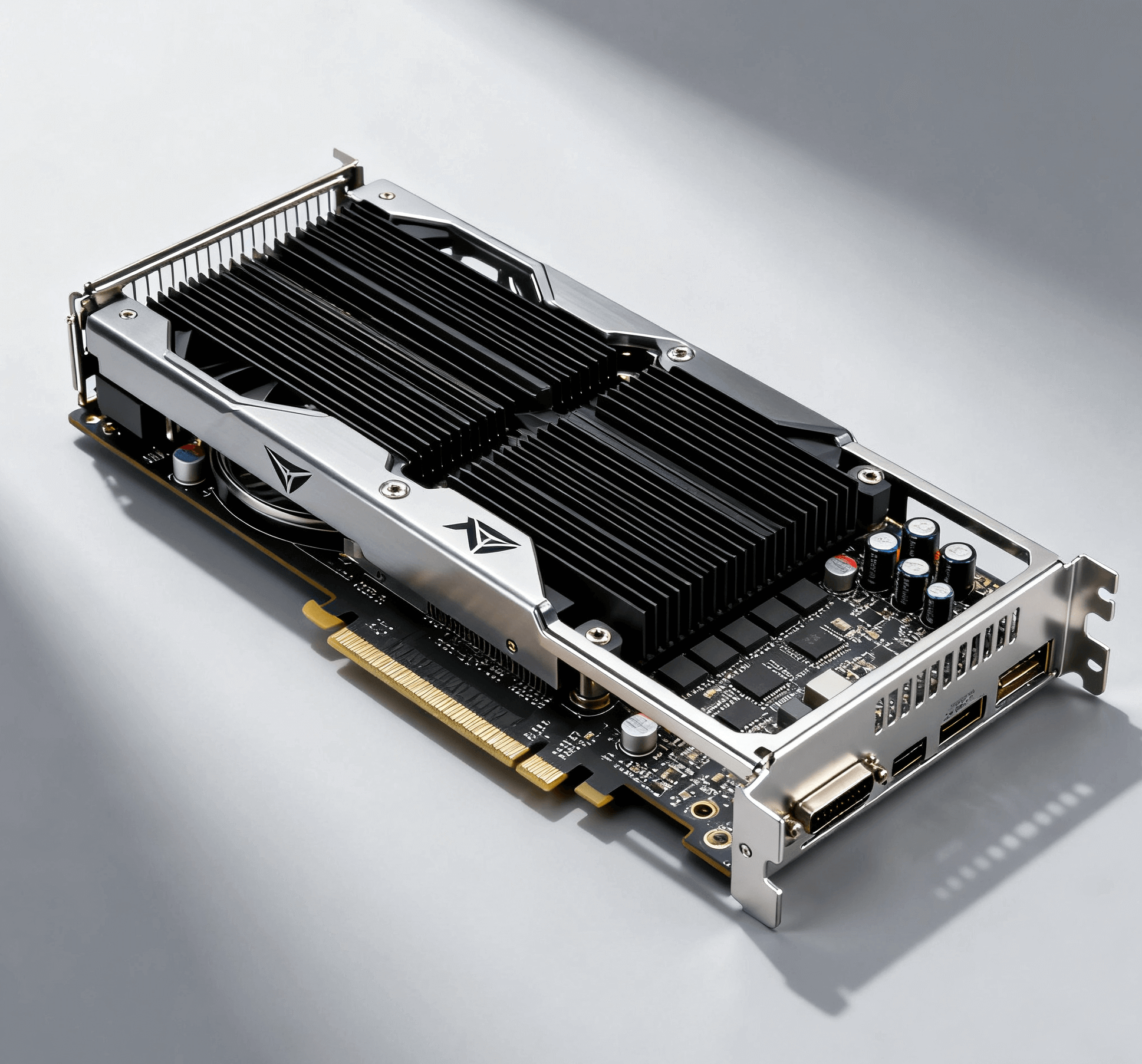

NVIDIA RTX 4090: A Hardware Deep Dive of a Performance Powerhouse

The NVIDIA GeForce RTX 4090, the flagship product based on the Ada Lovelace architecture, was released in September 2022 and continues to dominantly lead the high-end consumer market. Its hardware specifications are impressive, as detailed in the table below:

| Parameter Category | Specification Details | Significance / What It Means |

|---|---|---|

| Architecture | Ada Lovelace | New generation architecture, improving energy efficiency and computational density. |

| CUDA Cores | 16,384 | Provides powerful parallel compute capability, suitable for AI training and scientific simulation. |

| Tensor Cores | 512 (4th Gen) | Optimized for AI inference & training; supports FP8 precision, enhancing deep learning efficiency. |

| RT Cores | 128 (3rd Gen) | Enhances ray tracing performance for real-time rendering and virtual reality. |

| VRAM | 24GB GDDR6X | Supports large-scale data processing and complex model training. |

| Memory Bandwidth | 1008 GB/s | High bandwidth ensures efficient data throughput, reducing training bottlenecks. |

| FP32 Performance (TFLOPS) | ~83 TFLOPS | Powerful single-precision floating-point performance, suitable for scientific computing. |

| FP16 Performance (TFLOPS) | ~330 TFLOPS (with Tensor Core acceleration) | Excellent half-precision performance, accelerates AI model training. |

| Process Node | TSMC 4nm | Improved energy efficiency; Typical Board Power: 450W. |

| NVLink Support | No (Multi-GPU communication relies solely on PCIe bus, offering lower bandwidth and efficiency compared to NVLink) | Multi-GPU collaboration is limited; best suited for single-card high-performance scenarios. |

In AI tasks, the RTX 4090 performs exceptionally well. For instance, its high memory bandwidth and Tensor Cores can effectively accelerate token generation speed in large language model inference, like with Llama-3. Similarly, for scientific research, such as brain-computer interface decoding or geological hazard identification, a single RTX 4090 configuration is often sufficient for medium-scale data training and inference.

Ideal Use Cases: Why Would an Enterprise Need the RTX 4090?

The RTX 4090 is not just a gaming graphics card; it’s a powerful tool for enterprise applications. Its primary use cases include:

- AI & Machine Learning:

For small to medium-sized AI teams, the RTX 4090’s 24GB of VRAM is adequate for training and fine-tuning models under ~10B parameters (e.g., BERT or smaller LLaMA variants). For inference tasks, its 4th Gen Tensor Core support for FP8 precision, within compatible software frameworks, can help increase computational throughput and reduce memory footprint during inference. Compared to dedicated data center GPUs like the A100, the RTX 4090 offers compelling single-card performance and cost-effectiveness for SMEs and research teams requiring high performance per card with budget constraints. - Content Creation & Rendering:

In 3D modeling, video editing, and real-time rendering, the RTX 4090’s CUDA cores and RT cores accelerate workflows in tools like Blender and Unreal Engine, supporting 8K resolution output. - Scientific Research & Simulation:

In fields like bioinformatics and fluid dynamics, the RTX 4090’s parallel compute capability is valuable for simulations and data analysis, such as genetic sequence processing or climate modeling. It is important to note that the RTX 4090’s double-precision floating-point (FP64) performance is limited, making it less suitable for traditional HPC tasks with stringent FP64 requirements. - Edge Computing & Prototyping:

For AI applications requiring localized deployment (e.g., autonomous vehicle testing or medical image analysis), the RTX 4090 provides desktop-level high-performance compute, avoiding reliance on cloud resources.

However, the RTX 4090 is not a universal solution. For ultra-large-scale model training (like trillion-parameter LLMs), its VRAM capacity and PCIe-based multi-GPU communication can become bottlenecks, necessitating multi-card clusters or professional data center GPUs like the H100.

Key Considerations for Enterprises Choosing a GPU

When selecting GPUs, enterprises need to comprehensively evaluate the following factors:

- Performance vs. Cost Balance: The RTX 4090 offers excellent single-card performance, but performance-per-dollar might be different compared to multi-card mid-range configurations. Enterprises should choose hardware based on workload type (training vs. inference) and budget.

- VRAM Capacity & Bandwidth: VRAM size (e.g., 24GB) determines the maximum model size that can be handled, while bandwidth (e.g., 1008 GB/s) impacts data throughput efficiency. High bandwidth is crucial for training with large batch sizes.

- Software Ecosystem & Compatibility: NVIDIA’s CUDA and TensorRT ecosystems provide a rich toolchain for enterprises, but attention must be paid to framework support (like PyTorch, TensorFlow) and driver updates.

- Power Consumption & Thermal Management: The RTX 4090’s 450W TDP requires efficient cooling solutions, which can increase operational costs in data center deployments.

- Scalability & Multi-GPU Cooperation: For tasks requiring multiple GPUs (e.g., distributed training), NVLink compatibility and cluster management tools need consideration. The lack of NVLink support on the RTX 4090 is a key limitation to evaluate for multi-card applications.

- Supply Chain & Long-Term Support: Global GPU supply chain fluctuations can impact procurement. Enterprises should prioritize stable suppliers offering solutions with long-term maintenance.

Optimizing GPU Resource Management: WhaleFlux’s Intelligent Solution

For AI companies, purchasing hardware outright isn’t the only option. Flexible resource management tools can significantly improve utilization efficiency and reduce costs. Beyond direct hardware procurement, leveraging resource management technologies like GPU virtualization is key for enterprises to enhance resource utilization. WhaleFlux is an intelligent GPU resource management platform designed specifically for AI businesses. It helps reduce cloud computing costs and improves the deployment speed and stability of large language models by optimizing the utilization efficiency of multi-GPU clusters.

WhaleFlux supports various NVIDIA GPUs, including the H100, H200, A100, and RTX 4090. Users can purchase or rent resources based on need (minimum rental period one month). Unlike hourly-billed cloud services, WhaleFlux’s long-term rental model is better suited for medium-sized enterprises and research institutions, providing more stable resource allocation and cost control. For example:

- For intermittent training tasks, enterprises can rent an RTX 4090 cluster for model fine-tuning, avoiding idle resource waste.

- For inference services, WhaleFlux’s dynamic resource allocation can automatically scale instance sizes, ensuring stability under high concurrency.

Through centralized management tools, enterprises can monitor GPU utilization, temperature, and workloads, enabling intelligent scheduling and energy consumption optimization. This not only reduces hardware investment risk but also accelerates the deployment cycle for AI projects.

Conclusion

The NVIDIA RTX 4090, with its exceptional parallel computing capability and broad applicability, represents an ideal choice for enterprise AI and graphics processing. However, hardware is just the foundation; efficient resource management is key to unlocking its full potential. Whether through direct procurement or rental via platforms like WhaleFlux, enterprises should be guided by actual needs, balancing performance, cost, and scalability to maintain a competitive edge.

Looking ahead, as new technologies like the Blackwell architecture become widespread, the performance boundaries of GPUs will expand further. But regardless of changes, the core principle for enterprises remains the same: using the right tools for the right job.