Introduction: The New Workhorse for AI Inference

The artificial intelligence landscape is undergoing a significant shift as organizations move from experimental models to production-scale deployment. While much attention focuses on the high-end GPUs powering cutting-edge research, a growing need has emerged for specialized inference engines that balance performance, efficiency, and cost-effectiveness. Enter NVIDIA’s L4 and L40 GPUs – purpose-built solutions designed specifically for modern AI workloads beyond traditional gaming or rendering applications.

These GPUs represent a new category of accelerators optimized for the practical realities of production AI environments where efficiency, scalability, and total cost of ownership matter just as much as raw performance. They fill a crucial gap between consumer-grade cards and ultra-expensive data center behemoths, offering enterprise-grade features at accessible price points.

Whether you’re evaluating L4 vs T4 configurations or planning L40 cluster deployments, understanding these GPUs’ capabilities is essential for making informed infrastructure decisions. For teams seeking optimized access to these processors alongside higher-end options like H100, platforms like WhaleFlux provide integrated solutions that simplify deployment and maximize utilization across diverse workload requirements.

Part 1. NVIDIA L4 GPU Deep Dive: Specs and Capabilities

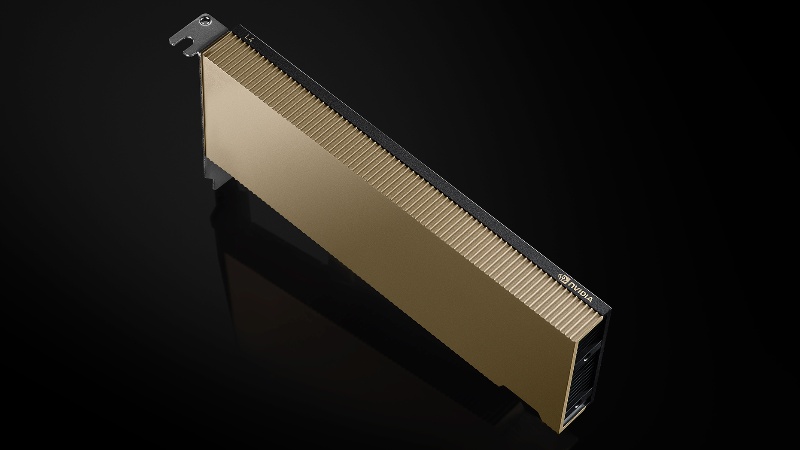

The NVIDIA L4 GPU represents a significant leap forward in efficiency-oriented acceleration. Built on the Ada Lovelace architecture, this compact power-efficient processor delivers impressive capabilities in a single-slot form factor consuming just 72W – making it suitable for dense server configurations and edge deployment scenarios.

At the heart of the L4’s capability is its 24GB GDDR6 memory with 300 GB/s bandwidth, providing ample capacity for most inference workloads and moderate-sized models. This substantial L4 GPU memory configuration enables handling of multiple inference streams simultaneously while maintaining low latency responses. The card features 18,176 CUDA cores and 142 third-generation RT cores, delivering up to 30.3 TFLOPS of FP32 performance for traditional computing tasks.

For AI workloads, the L4 includes 568 fourth-generation Tensor Cores that provide 242 TFLOPS of tensor processing power with support for FP8, FP16, and BF16 precision formats. This makes it particularly effective for transformer-based models and other modern AI architectures that benefit from mixed-precision computation.

The L4’s design purpose centers around cloud-native AI inference, video processing, and enterprise AI applications. Its single-slot, low-power design enables high-density deployments in standard servers, while its comprehensive media engine supports up to 8K video encode and decode capabilities – making it ideal for video analytics and content processing workloads that combine AI with media manipulation.

Part 2. NVIDIA L40S GPU: The Enhanced Successor

The NVIDIA L40S GPU builds upon the L4 foundation with enhanced capabilities that bridge the gap between efficient inference and more demanding computational tasks. While sharing the same architectural foundation as the L4, the L40S delivers substantially improved performance across all metrics, making it suitable for a broader range of AI workloads.

The most significant enhancement comes in memory bandwidth and capacity. The L40S features 48GB of GDDR6 memory with 864 GB/s bandwidth – more than double the L4’s memory throughput. This expanded capacity enables handling of larger models and more complex multi-modal applications that require substantial memory resources. The card also increases computational throughput with 21.1 TFLOPS of FP32 performance and 1.1 Petaflops of tensor processing power.

Beyond raw performance improvements, the L40S enhances ray tracing capabilities with 165 third-generation RT cores delivering 191 TFLOPS of ray tracing performance. This makes it particularly suitable for graphics-heavy AI applications such as neural rendering, simulation, and virtual environment training where traditional computing and AI intersect.

The NVIDIA L40 GPU positions itself as a universal data center GPU capable of handling AI training and inference, graphics workloads, and high-performance computing tasks. Its balanced performance profile makes it ideal for organizations seeking a single GPU architecture that can serve multiple use cases without requiring specialized hardware for each workload type.

Part 3. L4 vs T4 GPU: A Practical Comparison

The transition from previous-generation GPUs to current offerings requires careful evaluation of performance, efficiency, and cost considerations. The L4 vs T4 GPU comparison illustrates the substantial advancements made in just one generation of GPU technology.

The NVIDIA T4, based on the Turing architecture, has been a workhorse for inference workloads since its introduction. With 16GB of memory and 320 tensor cores, it delivers 8.1 TFLOPS of FP32 performance and 65 TFLOPS of tensor performance. While capable for its time, the L4 vs T4comparison reveals dramatic improvements in the newer architecture.

Memory capacity increases from 16GB to 24GB, while memory bandwidth improves from 320 GB/s to 300 GB/s (though the L4 uses more efficient GDDR6 technology). More significantly, tensor performance sees a nearly 4x improvement from 65 TFLOPS to 242 TFLOPS, while power efficiency improves from 70W to 72W despite the substantial performance gains.

The performance per watt analysis strongly favors the L4, which delivers approximately 3.36 TFLOPS per watt compared to the T4’s 1.16 TFLOPS per watt – nearly a 3x improvement in computational efficiency. This efficiency translates directly to reduced operating costs and improved sustainability metrics for large-scale deployments.

For various AI workloads, the L4 demonstrates superior cost-effectiveness, particularly for transformer inference, computer vision tasks, and recommendation systems. The T4 remains viable for less demanding applications but struggles with newer, larger models that benefit from the L4’s enhanced tensor capabilities and memory capacity.

Part 4. Real-World Applications for L-Series GPUs

The practical value of L-series GPUs becomes apparent when examining their real-world applications across various AI domains:

AI Inference represents the primary use case, with L4 and L40 GPUs excelling at handling multiple LLM inference streams simultaneously. Their efficient architecture enables serving hundreds of concurrent requests while maintaining low latency, making them ideal for production environments where response time directly impacts user experience. The substantial memory capacity allows for keeping multiple models memory-resident, enabling rapid switching between different AI services without reloading weights.

Edge Deployment benefits significantly from the L4’s power-efficient operation. Its 72W thermal design power enables deployment in environments with limited cooling and power infrastructure, while still delivering substantial computational capabilities. This makes it suitable for retail analytics, industrial IoT, and smart city applications where AI processing needs to occur close to the data source.

Multi-Modal AI applications leverage the L-series GPUs’ balanced performance profile to handle vision-language models that process both image and text data. The substantial memory capacity proves particularly valuable for these models, which often require storing large vision encoders alongside language model weights.

Video Analytics represents another strength, combining the GPUs’ AI capabilities with advanced media processing engines. The ability to simultaneously decode multiple video streams while running AI analysis enables real-time processing of surveillance footage, content moderation, and broadcast automation without requiring separate hardware for video processing and AI inference.

Part 5. Implementation Challenges with L4/L40 GPUs

Despite their impressive capabilities, implementing L-series GPUs effectively presents several challenges that organizations must address:

Configuration Complexity involves optimizing the hardware and software stack for specific AI workloads. Unlike consumer GPUs that may work adequately with default settings, maximizing L4/L40 performance requires careful tuning of power limits, memory allocation, and cooling solutions. Different AI frameworks and models may require specific configuration optimizations to achieve peak performance, necessitating extensive testing and validation.

Cluster Management becomes increasingly complex when scaling across multiple nodes. Ensuring efficient workload distribution, maintaining consistent performance across all GPUs, and handling failover scenarios require sophisticated orchestration systems. Without proper management tools, organizations risk underutilizing their investment or experiencing unpredictable performance variations.

Cost Optimization requires balancing performance requirements with budgetary constraints. While L-series GPUs offer favorable price-performance ratios compared to higher-end options, maximizing return on investment still requires careful capacity planning and workload right-sizing. Overprovisioning leads to wasted resources, while underprovisioning can impact service quality and slow down development cycles.

Integration Overhead involves ensuring compatibility with existing infrastructure and workflows. Many organizations have established systems for model development, deployment, and monitoring that may require modification to support new GPU architectures. The transition from previous-generation hardware often reveals unexpected compatibility issues with drivers, frameworks, or management tools.

Part 6. How WhaleFlux Simplifies L-Series GPU Deployment

While L4 and L40 GPUs offer excellent price-performance characteristics, maximizing their value requires expert deployment and management – this is where WhaleFlux delivers comprehensive solutions that address implementation challenges.

Optimized Configuration begins with pre-configured L4/L40 clusters tuned specifically for AI workloads. WhaleFlux systems undergo extensive testing and validation to ensure optimal performance across various model types and frameworks. This pre-configuration eliminates the guesswork from hardware setup and ensures customers receive systems that deliver maximum performance from day one.

Intelligent Orchestration enables automatic workload distribution across mixed GPU fleets that may include L4, L40, H100, and other processors. WhaleFlux’s management platform analyzes model requirements and current system to place workloads on the most appropriate hardware, ensuring efficient resource utilization while meeting performance requirements. This intelligent placement is particularly valuable for organizations running diverse AI workloads with varying computational demands.

Cost-Effective Access through WhaleFlux’s monthly rental options provides flexibility without hourly billing complexity. The minimum one-month commitment ensures stability for production workloads while avoiding the cost unpredictability of hourly cloud pricing. This model is particularly advantageous for organizations with steady inference workloads that benefit from dedicated hardware but don’t warrant outright purchase.

Performance Monitoring delivers real-time optimization for memory usage and power efficiency. WhaleFlux’s dashboard provides visibility into GPU utilization, memory allocation, and power consumption, enabling proactive optimization and capacity planning. The system can identify underutilized resources and recommend configuration adjustments to improve efficiency and reduce costs.

Part 7. Choosing the Right GPU for Your AI Workloads

Selecting the appropriate GPU architecture requires careful consideration of workload characteristics, performance requirements, and budgetary constraints:

Choose L4/L40 for medium-scale inference applications, budget-conscious projects, and edge deployments. These GPUs deliver excellent performance for most production inference workloads while maintaining favorable power efficiency and total cost of ownership. They’re particularly suitable for organizations running multiple moderate-sized models or handling high-volume inference requests where cost per inference matters significantly.

Upgrade to H100/A100 for large-scale training, massive LLMs, and research workloads requiring the highest computational performance. These flagship GPUs provide the memory bandwidth and computational throughput needed for training billion-parameter models and performing complex research experiments. Their higher cost is justified for workloads where time-to-result directly impacts business outcomes or competitive advantage.

Hybrid Approaches using WhaleFlux to mix L4 for inference with H100 for training provide an optimal balance of performance and efficiency. This configuration allows organizations to leverage each architecture’s strengths – using high-end GPUs for computationally intensive training while deploying cost-effective L4 processors for production inference. WhaleFlux’s management platform simplifies the operation of these heterogeneous environments by automatically routing workloads to appropriate hardware based on their characteristics.

Conclusion: Smart GPU Selection for AI Success

The NVIDIA L4 and L40 GPUs represent a significant advancement in accessible AI acceleration, offering a compelling combination of performance, efficiency, and value. These processors fill a crucial gap in the AI infrastructure landscape, providing enterprise-grade capabilities at accessible price points for production inference workloads.

However, realizing their full potential requires more than just purchasing hardware – proper deployment, configuration, and management are essential for maximizing performance and return on investment. The complexity of optimizing these systems for specific workloads often outweighs the benefits for organizations lacking specialized expertise.

This is where purpose-built solutions like WhaleFlux deliver exceptional value by simplifying deployment and ensuring optimal performance. Through pre-configured systems, intelligent orchestration, and comprehensive management tools, WhaleFlux transforms capable hardware into efficient AI infrastructure that just works. By handling the complexity behind the scenes, WhaleFlux enables organizations to focus on developing AI solutions rather than managing infrastructure.

As AI continues to evolve from experimental technology to production-critical infrastructure, making smart GPU selections and deployment decisions becomes increasingly important. The organizations that succeed will be those that leverage the right combination of hardware and management solutions to balance performance, cost, and operational complexity.

WhaleFlux-Your Wise Choice

Ready to deploy L4 or L40 GPUs for your AI projects? Explore WhaleFlux’s optimized GPU solutionswith expert configuration and management. Our pre-configured systems ensure maximum performance from these efficient processors while our management platform simplifies operation and optimization.

Contact our team today for a customized recommendation on L-series GPUs for your specific workload requirements. We’ll help you design an optimal AI infrastructure that balances performance, cost, and complexity – whether you need dedicated L4/L40 systems or a hybrid approach combining them with higher-end processors.