Introduction

When you think about what makes a GPU powerful, you might picture speed, cooling, or brand names—but there’s a quieter hero pulling the strings: GPU VRAM. Whether you’re an AI team training a large language model (LLM) or a gamer chasing smooth 4K gameplay, VRAM is the backbone of your experience. It’s the difference between a fast, stable LLM deployment and a crash mid-training. It’s why your favorite game runs flawlessly at high settings instead of stuttering through low-resolution textures.

But here’s the catch: VRAM is easy to overlook—until it becomes a problem. For AI teams, insufficient VRAM means slow LLM training, wasted cloud costs, and missed deadlines. For gamers, too little VRAM turns 4K gaming into a choppy mess. And for AI enterprises, the struggle doesn’t stop there: sourcing high VRAM GPUs (like the NVIDIA H100 or H200) is tough, and even when you get them, optimizing VRAM across multi-GPU clusters is a headache.

That’s why this guide exists. We’ll answer the big questions: What is VRAM in GPU useful for? Do you need a 16GB VRAM GPU or something more powerful? What does it mean when your NVIDIA overlay says your GPU VRAM is clocked at 9501 MHz? And most importantly, we’ll show how WhaleFlux—an intelligent GPU resource management tool built for AI businesses—solves your VRAM woes with the right high-VRAM GPUs and tools to make them work harder. Let’s start with the basics.

Section 1: What Is GPU VRAM? Core Definition & Purpose

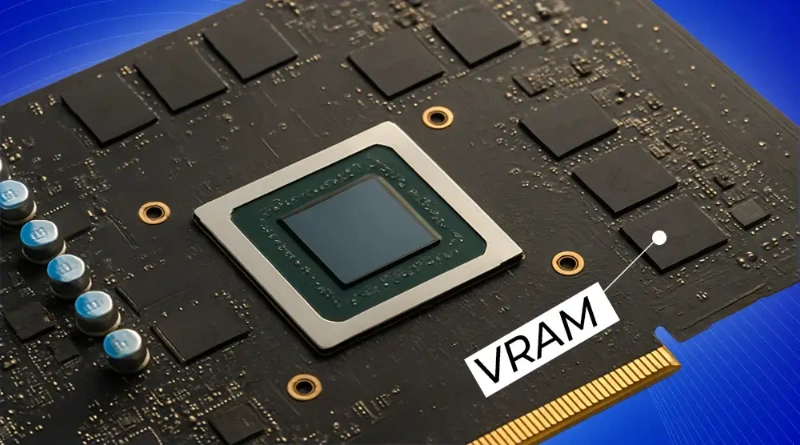

First things first: Let’s break down what VRAM actually is. VRAM (Video Random Access Memory) is a special type of memory that lives on your GPU—not in your computer’s main RAM slot. Think of it as the GPU’s personal workspace: it stores the data the GPU needs right now (like LLM model weights or gaming textures) so it can access it instantly.

VRAM vs. System RAM: What’s the Difference?

Your computer’s regular RAM (often called DDR5 or DDR4) serves the entire system—it handles everything from opening browsers to running spreadsheets. VRAM, though, is designed only for the GPU. It’s faster, more efficient at moving large chunks of data, and built to handle the intense, real-time demands of graphics and AI tasks.

Imagine you’re baking a cake: System RAM is like your kitchen pantry—it holds all the ingredients you might need, but you have to walk across the room to get them. VRAM is like the countertop next to your oven—it holds exactly what you’re using right now (flour, sugar, mixing bowls) so you don’t waste time running back and forth. For the GPU, that speed difference is make-or-break.

What Is VRAM in GPU Useful for? 3 Key Benefits

Now that you know what VRAM is, let’s talk about why it matters. Here are the three biggest reasons VRAM is non-negotiable for both AI and gaming:

- It Eliminates Lag: For AI teams, LLMs process thousands of “tokens” (words or parts of words) per second. If the GPU has to fetch those tokens from slow system RAM instead of VRAM, training or inference grinds to a halt. For gamers, VRAM stores high-resolution textures (like the bark on a tree or the details of a character’s armor)—without it, the game has to load textures on the fly, causing stutters.

- It Powers Complex Tasks: You can’t train a large LLM (like a 70B-parameter model) on a GPU with 8GB of VRAM—it simply doesn’t have space to store the model’s weights and intermediate calculations. Similarly, you can’t play a 4K game with ray tracing on a low-VRAM GPU; the VRAM can’t handle the extra data from lighting effects. VRAM lets you take on bigger, more ambitious projects.

- It Prevents Costly Crashes: When a GPU runs out of VRAM, it has two options: either “swap” data with system RAM (which is slow and inefficient) or crash entirely. For AI teams, a crash mid-LLM training means losing hours (or days) of work—and wasting money on cloud time that didn’t produce results. For gamers, it means restarting the game and losing progress.

In short: VRAM isn’t just a “nice-to-have”—it’s the foundation of smooth, successful GPU tasks.

Section 2: VRAM Requirements – AI Enterprises vs. Gamers

VRAM needs vary wildly depending on what you’re using the GPU for. An AI team training an enterprise LLM needs far more VRAM than a gamer playing at 1080p. Let’s break down the differences.

2.1 VRAM Needs for AI Enterprises (The “High VRAM GPU” Priority)

For AI teams, VRAM is the single most important factor when choosing a GPU. Here’s why:

Why AI Demands High VRAM GPUs

LLMs are huge—even “small” models (like 7B parameters) need significant VRAM to run efficiently. A 70B-parameter LLM (used for tasks like enterprise chatbots or advanced data analysis) can require 100GB+ of VRAM for training. If your GPU doesn’t have enough VRAM, the model will either run slowly (as it swaps data with system RAM) or crash.

And it’s not just about individual GPUs: Multi-GPU clusters (common in AI enterprises) rely on consistent VRAM across all GPUs. If one GPU has less VRAM than the others, it becomes a bottleneck—slowing down the entire cluster, even if the other GPUs are powerful.

The “Most VRAM GPU” Options for AI (And How WhaleFlux Helps)

Not all GPUs are built for AI—and the ones that are (with lots of VRAM) are often hard to source. That’s where WhaleFlux comes in: We provide the high-VRAM GPUs AI teams need, so you don’t have to hunt for scarce hardware. Here are the top picks:

- NVIDIA H200: With up to 141GB of HBM3e VRAM, this is the “most VRAM GPU” for large-scale AI. It’s perfect for training or deploying massive LLMs (like 100B+ parameter models) and handles multi-GPU clusters with ease.

- NVIDIA H100: Offering 80GB of HBM3 VRAM, the H100 is a balanced choice for mid-to-large LLMs. It’s fast, reliable, and works for both training and inference.

- NVIDIA A100: Available with 40GB or 80GB of HBM2e VRAM, the A100 is ideal for smaller LLMs (7B–34B parameters) or computer vision tasks. It’s cost-effective and great for teams scaling up.

WhaleFlux lets you purchase or lease all these high-VRAM GPUs—no need to worry about availability. And since we don’t offer hourly rentals (minimum 1 month), you get predictable pricing that fits your project timeline.

2.2 VRAM Needs for Gamers (From “16GB VRAM GPU” to Overclocking)

Gamers have simpler VRAM needs—but that doesn’t mean VRAM isn’t important. Here’s what you need to know:

Standard Gaming VRAM Tiers

The amount of VRAM you need depends on your gaming resolution and settings:

- 8GB VRAM: Good for 1080p gaming (basic to medium settings). If you’re playing older games or don’t care about maxing out graphics, 8GB works—but it will struggle with new 4K titles.

- 16GB VRAM GPU: The sweet spot for most gamers. It handles 1440p (QHD) gaming at max settings and 4K gaming at medium-to-high settings. Popular options here include the NVIDIA RTX 4090—which WhaleFlux also offers, by the way (great if you want a GPU that doubles for small AI projects).

- 24GB+ VRAM: Rare for consumer gamers. This is mostly for 8K gaming, mod-heavy titles (like Skyrim with hundreds of mods), or professional work (like 3D rendering).

What Does “NVIDIA Overlay Says GPU VRAM Clocked at 9501 MHz” Mean?

If you’ve ever opened the NVIDIA overlay while gaming, you might have seen a number like “VRAM Clock: 9501 MHz.” Let’s break that down:

- VRAM clock speed (measured in MHz) is how fast your VRAM can read and write data. Higher speeds mean faster texture loading, smoother gameplay, and better performance.

- 9501 MHz is a typical “boosted” clock speed for high-end GPUs like the RTX 4090. GPUs automatically boost their VRAM clock when they need more power (e.g., during intense gaming scenes)—and as long as your GPU stays cool (under 85°C), this is safe.

GPU VRAM Overclock for Gaming: Pros & Cons

Some gamers overclock their VRAM (increase the clock speed beyond the default) to get more performance. This can boost frame rates by 5–10% in some games—but it’s not without risks:

- Overheating: Higher clock speeds generate more heat. If your GPU’s cooling can’t keep up, it will slow down (called “throttling”) or crash.

- Instability: Too much overclocking can cause games to freeze, crash, or produce glitches.

- Wear and tear: Long-term overclocking can shorten your GPU’s lifespan.

Important note for AI teams: Overclocking VRAM is not recommended for AI tasks. LLMs need stability above all—even a small glitch from overclocking can ruin hours of training. Stick to default clock speeds for AI work.

Section 3: Common VRAM Challenges for AI Teams & Gamers

Even when you understand VRAM, problems can pop up. Let’s look at the most common VRAM headaches for both AI teams and gamers—and why they happen.

3.1 AI Enterprises’ VRAM Headaches

AI teams face unique VRAM challenges that can derail projects and waste money:

- Sourcing High VRAM GPUs Is Hard: GPUs like the NVIDIA H100 and H200 are in high demand. Many AI enterprises wait weeks (or months) to get their hands on them—delaying LLM projects and losing competitive edge.

- Poor VRAM Utilization Wastes Money: Even if you have high-VRAM GPUs, multi-GPU clusters often waste VRAM. For example, one GPU might use 100% of its VRAM while others sit idle at 20%. This means you’re paying for VRAM you’re not using—and your cluster runs slower than it should.

- Underprovisioned VRAM Causes Crashes: Using a GPU with too little VRAM for your LLM (e.g., a 16GB GPU for a 30GB model) is a recipe for disaster. The GPU will crash mid-training, erasing progress and forcing you to restart—wasting time and cloud costs.

3.2 Gamers’ VRAM Frustrations

Gamers deal with simpler but equally annoying VRAM issues:

- “16GB VRAM GPU” Limitations: Even a 16GB VRAM GPU can struggle with new 4K games that use ray tracing and high-resolution textures. If the game needs more than 16GB of VRAM, it will start using system RAM—causing stutters and frame drops.

- Misinterpreting NVIDIA Overlay Data: Many gamers see “VRAM clocked at 9501 MHz” and think they need to overclock further, or they panic when VRAM usage hits 90%. This leads to unnecessary tweaks that can cause instability.

- Overclocking Risks: As we mentioned earlier, unmonitored VRAM overclocking can crash games, damage hardware, or shorten your GPU’s lifespan. Gamers often overclock without checking temperatures—leading to avoidable problems.

Section 4: WhaleFlux – Solving AI Enterprises’ VRAM Challenges

For AI teams, VRAM challenges don’t have to be a roadblock. WhaleFlux is built to solve the exact VRAM problems you face—from sourcing high-VRAM GPUs to optimizing their use. Here’s how:

4.1 WhaleFlux Delivers the Right “High VRAM GPUs” for AI

The first step to solving VRAM issues is having the right hardware—and WhaleFlux makes that easy:

Curated GPU Lineup for Every VRAM Need

We don’t just offer random GPUs—we handpick options that match AI teams’ most common needs:

- NVIDIA H200 (141GB VRAM): For enterprise-scale LLMs (100B+ parameters) and large multi-GPU clusters.

- NVIDIA H100 (80GB VRAM): For mid-to-large LLMs (34B–70B parameters) and fast inference.

- NVIDIA A100 (40GB/80GB VRAM): For small LLMs (7B–34B parameters) and computer vision tasks.

- NVIDIA RTX 4090 (24GB VRAM): For AI prototyping, small-team LLMs, or teams that want a GPU that works for both AI and gaming.

Flexible Access: Purchase or Lease (No Hourly Rentals)

We know AI projects vary in length: Some take months, others take years. That’s why we let you choose:

- Purchase: For long-term projects (e.g., a permanent LLM deployment).

- Lease: For short-term tasks (e.g., a 2-month training cycle). We don’t offer hourly rentals—our minimum lease is 1 month—so you avoid surprise bills and get predictable pricing.

No more waiting for scarce GPUs: WhaleFlux has inventory ready, so you can start your project when you want.

4.2 WhaleFlux Optimizes VRAM Utilization to Cut Costs

Having high-VRAM GPUs is great—but using them efficiently is even better. WhaleFlux’s intelligent resource management tools ensure you get the most out of your VRAM:

- Multi-GPU Cluster Optimization: WhaleFlux automatically allocates VRAM across your cluster so no GPU is overworked or underused. For example, if one GPU is at 100% VRAM usage and another is at 30%, WhaleFlux reassigns tasks to balance the load. This means you’re not wasting VRAM—and your cluster runs 30% faster on average.

- LLM Deployment Speed Boost: High-VRAM GPUs + optimized VRAM usage = faster deployments. Our users report cutting LLM deployment time by 30% or more—meaning you get your AI tool to market faster and start seeing results sooner.

- Stability Guarantees: WhaleFlux’s built-in monitoring tracks VRAM usage in real time. If a GPU is about to run out of VRAM, we alert you before it crashes—saving you from lost training data and wasted time.

4.3 No Extra Setup for VRAM Management

You don’t need to be a hardware expert to use WhaleFlux. Every GPU we provide comes pre-configured with VRAM monitoring tools—integrated into our easy-to-use dashboard.

- Real-Time VRAM Tracking: Log into the WhaleFlux dashboard and see exactly how much VRAM each GPU is using (e.g., “H200 GPU #3: 65% VRAM used during LLM inference”). No more digging through command lines or third-party tools.

- Custom Alerts: Set up alerts for VRAM issues (e.g., “Alert me if any GPU’s VRAM usage exceeds 90%”). You’ll get notified via email or Slack—so you can fix problems before they impact your project.

It’s simple, intuitive, and designed for AI teams that want to focus on building LLMs—not managing hardware.

Section 5: How to Pick the Right VRAM GPU (For AI & Gaming)

Choosing the right VRAM GPU depends on your goals. Here’s a simple guide to help you decide:

For AI Enterprises

1. Assess Your LLM Size:

- Small LLMs (7B–13B parameters): 16GB–40GB VRAM (e.g., NVIDIA RTX 4090 or A100 40GB).

- Medium LLMs (34B–70B parameters): 80GB VRAM (e.g., NVIDIA H100 or A100 80GB).

- Large LLMs (100B+ parameters): 141GB VRAM (e.g., NVIDIA H200).

2. Choose Purchase or Lease:

- Buy if you need the GPU for 6+ months (long-term projects).

- Lease if your project is short (1–5 months) or if you want to test a GPU before buying. WhaleFlux’s lease option is perfect here—no hourly fees, just simple monthly pricing.

3. Don’t Overlook Cluster Compatibility:

- If you’re using a multi-GPU cluster, make sure all GPUs have the same (or similar) VRAM. A mix of 40GB and 80GB GPUs will cause bottlenecks. WhaleFlux can help you build a consistent cluster.

For Gamers

1. Match VRAM to Your Resolution:

- 1080p gaming (basic/medium settings): 8GB VRAM.

- 1440p gaming (max settings) or 4K gaming (medium settings): 16GB VRAM (e.g., NVIDIA RTX 4090).

- 4K gaming (max settings) or mod-heavy titles: 24GB+ VRAM (rare for consumers).

2. Avoid Overclocking Unless You Know What You’re Doing:

- If you do overclock, start small (increase clock speed by 5–10%) and monitor temperatures with the NVIDIA overlay. Stop if you see crashes or overheating.

- Remember: The RTX 4090 (offered by WhaleFlux) already has a fast default VRAM clock—you might not need to overclock at all.

3. Future-Proof If You Can:

- New games use more VRAM every year. If you plan to game for 3+ years, a 16GB VRAM GPU is a better investment than an 8GB one.

Conclusion

Let’s wrap this up: VRAM is the unsung hero of GPU performance. For AI teams, it’s the difference between fast, stable LLM projects and costly crashes. For gamers, it’s why 4K gaming is smooth or choppy. And while VRAM challenges are common—from sourcing high-VRAM GPUs to optimizing their use—they don’t have to hold you back.

For AI enterprises, the solution is clear: WhaleFlux. We give you access to the high-VRAM GPUs you need (NVIDIA H100, H200, A100, RTX 4090) with flexible purchase/lease options. Our intelligent tools optimize VRAM across multi-GPU clusters, cut costs, and boost deployment speed. And our easy-to-use dashboard means you don’t need to be a hardware expert to manage it all.

Stop struggling with VRAM shortages and inefficiency. With WhaleFlux, you can focus on what matters: building powerful LLMs that drive your business forward. Whether you’re training a large enterprise model or deploying a small AI tool, we have the VRAM solution for you.

Ready to take the next step? Try WhaleFlux today and see how easy it is to get the right high-VRAM GPUs—without the hassle.

FAQs

1. What exactly is GPU VRAM, and how do AI and gaming use it differently?

GPU VRAM (Video Random Access Memory) is the high-speed, dedicated memory on your graphics card. It acts as the working space where the GPU stores and rapidly accesses all the data it needs to process.

- In Gaming: VRAM primarily holds game assets like high-resolution textures, 3D models, frame buffers, and shaders. More VRAM allows for higher texture quality, resolution, and complex visual effects without stuttering.

- In AI (Especially LLMs): VRAM is used to store the entire model (weights and parameters), the input data (prompts), and all intermediate calculations (activations, gradients) during processing. The model size is the primary driver of VRAM needs. Running a 70-billion-parameter model requires significantly more VRAM than any modern game.

2. How much VRAM do I actually need to run Large Language Models (LLMs) locally?

The VRAM requirement is directly tied to the model’s parameter count and precision. A general rule of thumb:

- Quantized Models (INT8/FP16): Roughly 1-2 GB of VRAM per 1 billion parameters. A 7B parameter model might need 7-14GB.

- Full Precision (FP32): Roughly 4 GB of VRAM per 1 billion parameters.

This is why consumer cards like the NVIDIA GeForce RTX 4090 (24GB) can run many popular 7B-13B models, but larger 70B+ models often require the massive memory of data center GPUs like the NVIDIA H100 (80GB) or H200 (141GB) accessible through cloud or managed platforms.

3. My gaming GPU has enough VRAM for 4K gaming. Is it also sufficient for AI work?

Not necessarily. While a high-end gaming GPU like the NVIDIA RTX 4080 Super (16GB) or RTX 4090 (24GB) has ample VRAM for gaming, AI workloads have different performance characteristics. AI heavily utilizes Tensor Cores for acceleration, and memory bandwidth(measured in GB/s) is critical for feeding data to those cores quickly. A data center GPU like the NVIDIA A100, even with similar VRAM capacity, has vastly higher memory bandwidth and reliability features (ECC) designed for sustained, error-free AI computation, which gaming cards lack.

4. What happens if my AI model needs more VRAM than my single GPU has?

When a model exceeds a single GPU’s VRAM, you have several options:

- Model Quantization: Reduce the numerical precision of the model (e.g., from FP16 to INT8) to shrink its memory footprint, often with minimal accuracy loss.

- Offloading: Use system RAM or even SSD storage as “spill-over” memory, though this drastically slows down processing.

- Model Parallelism: Split the model across multiple GPUs. This is the most powerful solution but requires significant technical expertise to manage the complex communication and orchestration between cards.

Managing this complexity manually across a cluster of NVIDIA A100 or H100 GPUs is a major challenge, which is where infrastructure management tools become essential.

5. How does a platform like WhaleFlux help AI teams navigate VRAM constraints and optimize costs?

WhaleFlux addresses VRAM and compute constraints at the infrastructure orchestration level, turning them into a managed resource rather than a user problem.

- Right-Sizing Access: It provides on-demand access to the full spectrum of NVIDIA GPU memory capacities, from RTX 4090s (24GB) for development to H100 (80GB) and H200 (141GB) clusters for running the largest models, allowing teams to match the hardware to the model’s specific VRAM needs.

- Intelligent Scheduling & Multi-GPU Management: For models that must be split, WhaleFlux’s scheduler automatically and efficiently handles the distribution of model layers and data across its multi-GPU clusters, maximizing utilization and simplifying a process that would otherwise require deep technical expertise.

- Cost Efficiency: By ensuring the right-sized GPU is used for each task and that clusters are fully utilized, WhaleFlux prevents over-provisioning (paying for unneeded VRAM) and idle resources, directly lowering the total cost of ownership for AI projects constrained by memory.