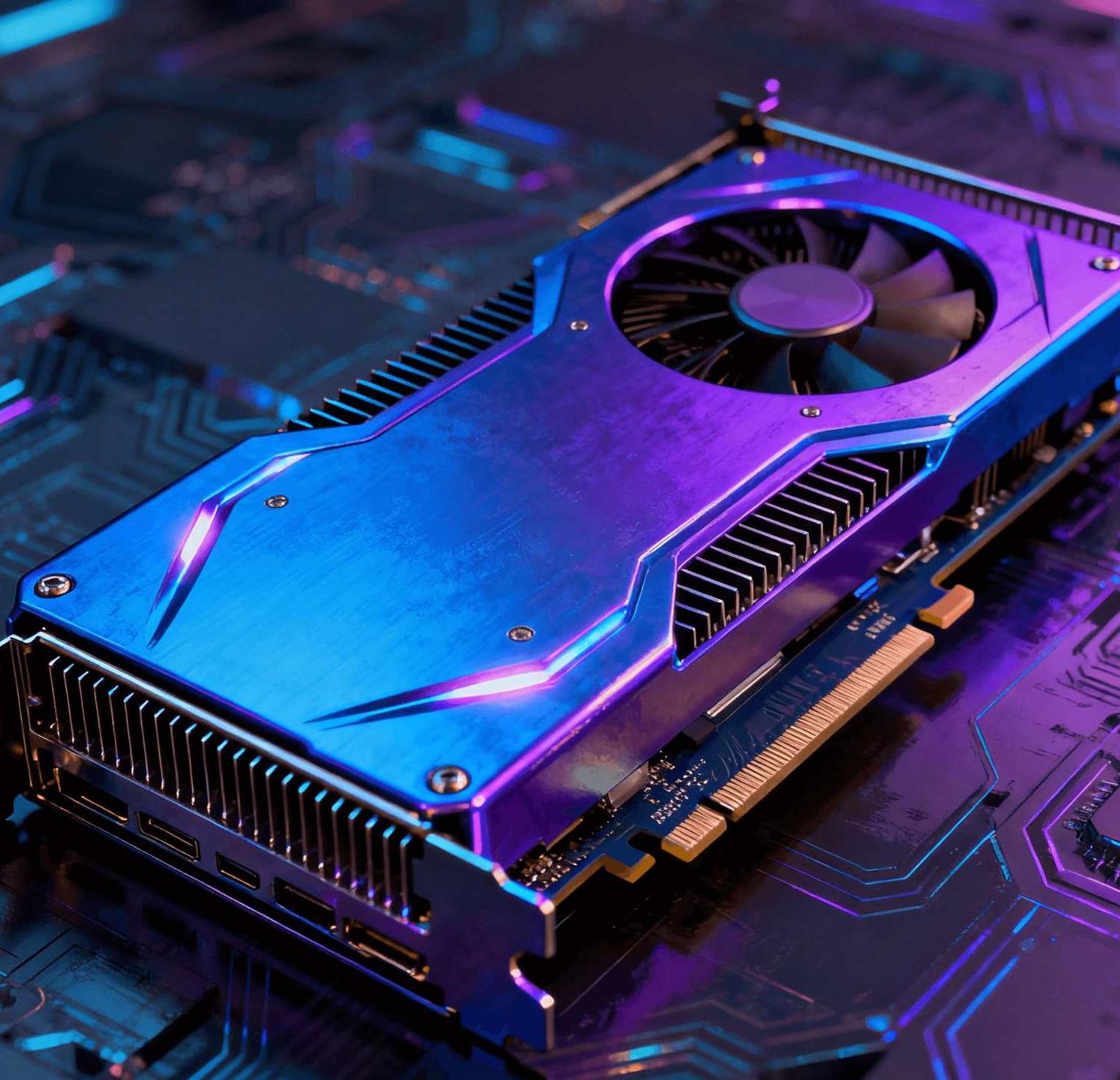

What is a GPU?

A GPU (Graphics Processing Unit) is a hardware component in computers specifically designed for graphics processing and parallel computing. Unlike CPUs, GPUs feature a highly parallelized architectural design, enabling them to handle thousands of computational tasks simultaneously. They excel at processing images, videos, animations, and large-scale data computations. Modern GPUs are not only used for graphics rendering but also find widespread application in scientific computing, artificial intelligence, deep learning, and high-performance computing.

GPUs are primarily categorized into two types: integrated GPUs and discrete GPUs. Integrated GPUs are typically built into motherboards or CPUs, offering lower power consumption but limited performance. In contrast, discrete GPUs come with dedicated memory (VRAM) and cooling systems, delivering significantly stronger computing capabilities. With technological advancements, GPUs have become indispensable core components in modern computing systems.

Why is GPU Health Check Necessary?

1. Ensure System Stability

GPUs generate substantial heat under high loads. Excessively high temperatures can lead to hardware damage or system crashes. Regular health checks monitor critical parameters such as GPU temperature and power consumption, ensuring the device operates within safe limits. For data centers and enterprise environments, GPU failures may cause service outages, resulting in significant financial losses.

2. Prevent Performance Degradation

Over prolonged operation, GPUs may experience performance degradation, such as VRAM errors or reduced clock speeds. Health checks enable timely detection of these issues and facilitate corrective actions, preventing computational tasks from failing due to hardware problems. This is particularly critical in AI training and scientific computing scenarios, where the stability of GPU performance directly impacts task efficiency.

3. Extend Device Lifespan

Regular GPU health monitoring helps prolong device lifespan. By detecting early signs of failure (e.g., ECC errors, abnormal temperatures), maintenance can be performed before issues escalate, reducing hardware replacement costs. Cloud service providers typically implement minute-level health monitoring for GPU devices to ensure resource reliability and availability.

4. Optimize Resource Allocation

In multi-GPU environments, health checks help identify underperforming devices, enabling optimized workload distribution. System administrators can use GPU health status to decide whether to include a device in computing partitions or flag it for maintenance.

Key Metrics for Measuring GPU Health

1. Temperature Monitoring

GPU core temperature and VRAM temperature are fundamental health indicators. Generally, the GPU core temperature should remain below 105°C, and VRAM temperature should stay under 85°C. Excessive temperatures trigger thermal throttling, which leads to performance reduction.

2. Utilization Metrics

GPU utilization includes compute unit usage, VRAM usage, and encoder/decoder usage. A healthy GPU should maintain stable utilization under high loads without abnormal fluctuations. Unusual utilization patterns may indicate software configuration issues or hardware failures.

3. Error Detection

ECC (Error Correction Code) error counts are critical for assessing GPU health. Excessive VRAM ECC errors may signal underlying hardware problems requiring further inspection. XID errors and NVLink errors are also key indicators requiring attention.

4. Power Consumption Monitoring

GPU power consumption reflects the device’s energy efficiency and operational status. Abnormal power fluctuations may indicate power supply issues or hardware failures. Most GPUs have predefined power limits; exceeding these limits can compromise device stability.

5. Clock Speeds

The stability of core clock and VRAM clock speeds is a key indicator of GPU health. Abnormal speed reductions may result from overheating or insufficient power supply.

6. Link Status

For multi-GPU systems, NVLink or PCIe link status is crucial. Link disruptions or reduced bandwidth severely impact the efficiency of multi-card collaborative computing.

Best Practices for Implementing GPU Health Checks

Regular Monitoring

Establish a minute-level monitoring mechanism to continuously collect GPU metrics such as temperature, utilization, and error counts. Cloud service providers typically retrieve monitoring data by accessing GPU driver libraries (e.g., libnvidia-ml.so.1 or nvml.dll).

Use Professional Tools

Leverage specialized tools like NVIDIA DCGM, NVML, or vendor-provided monitoring software for comprehensive health checks. These tools offer detailed diagnostic information, including internal GPU status and error logs.

Establish Early Warning Systems

Set reasonable threshold-based alerts to notify administrators promptly when GPU health metrics exceed normal ranges. Common alert triggers include sustained temperatures above 80°C and increasing ECC error counts.

Logging and Analysis

Record historical health data for analysis to identify long-term trends and potential issues. Comparing data across different time periods helps detect early signs of performance degradation.

Conclusion

GPU health checks are a critical step in ensuring the stable operation of computing systems. By establishing a robust monitoring framework and regularly inspecting key health metrics, potential issues can be detected and resolved promptly, safeguarding GPU performance and reliability. As GPUs become increasingly integral across industries, the importance of health checks will continue to grow. Whether for individual users or enterprise environments, prioritizing GPU health checks is essential to keeping computing resources in optimal condition.