I. Introduction: The Power of Image Inference in Today’s AI

Look around. Artificial intelligence is learning to see. It’s the technology that allows a self-driving car to identify a pedestrian, a factory camera to spot a microscopic defect on a production line, and a medical system to flag a potential tumor in an X-ray. This capability—where AI analyzes and extracts meaning from visual data—is called image inference, and it’s fundamentally changing how industries operate.

Image inference moves AI from the laboratory into the real world, transforming pixels into actionable predictions. However, this power comes with a significant computational demand. Processing high-resolution images or analyzing thousands of video streams in real-time requires immense, reliably managed computing power. For businesses, the challenge isn’t just building a accurate model; it’s deploying it in a way that is fast, stable, and doesn’t consume the entire budget. This is where the journey from a promising algorithm to a profitable application begins.

II. What is Image Inference and How Does it Work?

A. The Lifecycle of an Image for Inference

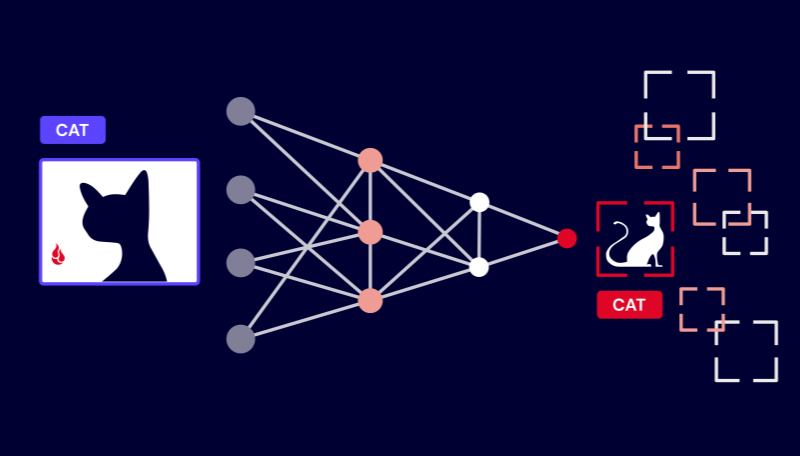

The journey of an image through an AI system is a fascinating, multi-stage process. It begins the moment a picture is captured, whether by a smartphone, a security camera, or a medical scanner. This raw image is then preprocessed—resized, normalized, and formatted into a structure the AI model can understand. Think of this as preparing a specimen for a microscope.

Next comes the core act of inference image analysis. The preprocessed image is fed into a pre-trained deep learning model, such as a Convolutional Neural Network (CNN). The model’s layers of artificial neurons work in concert to detect patterns, features, and objects, ultimately producing an output. This could be a simple label (“cat”), a bounding box around an object of interest, a segmented image highlighting specific areas, or even a new, generated image. This entire pipeline—from upload to insight—must be optimized for speed and efficiency to be useful.

B. Key Requirements for Effective Image Inference

For an image inference system to be successful in a business environment, it must excel in three critical areas:

Speed (Low Latency):

In applications like autonomous driving or interactive video filters, delays are unacceptable. Low latency—the time between receiving an image and delivering a prediction—is non-negotiable for real-time decision-making.

Accuracy:

The entire system is pointless if the predictions are wrong. The deployed model must maintain the high accuracy it achieved during training, which requires stable, consistent computational performance without errors or interruptions.

Cost-Efficiency at Scale:

A model that works perfectly for a hundred images becomes a financial nightmare at a million images. The infrastructure must be designed to process vast quantities of visual data at a sustainable cost-per-image, enabling the business to scale without going bankrupt.

III. The GPU: The Engine of Modern Image Inference

A. Why GPUs are Ideal for Image Workloads

At the heart of every modern image inference system is the Graphics Processing Unit (GPU). Originally designed for rendering complex video game graphics, GPUs have a particular talent that makes them perfect for AI: parallel processing. Unlike a standard CPU that excels at doing one thing at a time very quickly, a GPU is designed to perform thousands of simpler calculations simultaneously.

An image is essentially a massive grid of pixels, and analyzing it requires performing similar mathematical operations on each of these data points. A GPU can handle this enormous workload in parallel, dramatically speeding up the image inference process. Trying to run a complex vision model on CPUs alone would be like having a single cashier in a busy supermarket; the line would move impossibly slowly. A GPU, in contrast, opens all the checkouts at once.

B. Matching NVIDIA GPUs to Your Image Inference Needs

Not all visual AI tasks are the same, and fortunately, not all GPUs are either. Selecting the right hardware is crucial for balancing performance and budget:

NVIDIA A100/H100/H200:

These are the data center powerhouses. They are designed for large-scale, complex inference image tasks that require processing huge batches of high-resolution images simultaneously. Think of a medical imaging company analyzing thousands of high-resolution MRI scans overnight. The massive memory and computational throughput of these GPUs make such workloads feasible.

NVIDIA RTX 4090:

This GPU serves as an excellent, cost-effective solution for real-time video streams, prototyping, and deploying smaller models. For a startup building a real-time content moderation system for live video, the RTX 4090 offers a compelling balance of performance and affordability.

IV. Overcoming Bottlenecks in Image Inference Deployment

A. Common Challenges with Image Workloads

Despite having powerful GPUs, companies often hit significant roadblocks when deploying image inference at scale:

Unpredictable Latency Spikes:

When multiple inference requests hit a poorly managed system at once, they can create a traffic jam. A single request for a complex analysis can block others, causing delays that ruin the user experience in real-time applications.

Inefficient GPU Usage and High Costs:

GPUs are expensive, and many companies fail to use them to their full potential. It’s common to see GPUs sitting idle for periods or not processing images at their maximum capacity, leading to a high cost-per-inference without delivering corresponding value.

The Batch vs. Real-Time Dilemma:

Managing the infrastructure for different types of workloads—such as scheduled batch processing of millions of product images versus live analysis of security camera feeds—adds another layer of operational complexity.

B. The WhaleFlux Solution: Smart Management for Visual AI

These bottlenecks aren’t just hardware problems; they are resource management problems. This is precisely the challenge WhaleFlux is built to solve. WhaleFlux is an intelligent GPU resource management platform that acts as a high-efficiency orchestrator for your visual AI workloads, ensuring your computational power is used effectively, not just expensively.

V. How WhaleFlux Optimizes Image Inference Pipelines

A. Intelligent Scheduling for Consistent Performance

WhaleFlux’s core intelligence lies in its smart scheduling technology. Instead of allowing inference requests to collide and create bottlenecks, the platform dynamically allocates them across the entire available GPU cluster. This ensures that no single GPU becomes overwhelmed, maintaining consistently low latency even during traffic spikes. For a real-time application like autonomous vehicle perception, this consistent performance is not just convenient—it’s critical for safety.

B. A Tailored GPU Fleet for Every Visual Task

We provide access to a curated fleet of industry-leading NVIDIA GPUs, including the H100, H200, A100, and RTX 4090. This allows you to precisely match your hardware to your specific images for inference needs. You can deploy powerful A100s for your most demanding batch analysis jobs while using a cluster of cost-effective RTX 4090s for high-volume, real-time video streams.

To provide the stability required for 24/7 image processing services, we offer flexible purchase or rental options with a minimum one-month term. This approach eliminates the cost volatility of per-second cloud billing and provides a predictable infrastructure cost, making financial planning straightforward.

C. Maximizing Throughput and Minimizing Cost

The ultimate financial benefit of WhaleFlux comes from maximizing GPU utilization. By ensuring that every GPU in your cluster is working efficiently and minimizing idle time, WhaleFlux directly drives down your cost-per-image. This “images-per-dollar” metric is crucial for profitability. When you can process more images with the same hardware investment, you unlock new opportunities for scale and growth, making large-scale visual AI projects economically viable.

VI. Conclusion: Building Scalable and Reliable Visual AI with WhaleFlux

The ability to reliably understand and interpret visual data is a transformative competitive advantage. Robust and efficient image inference is the key that unlocks this value, turning passive pixels into proactive insights.

The path to deploying scalable visual AI doesn’t have to be fraught with performance nightmares and budget overruns. WhaleFlux provides the managed GPU infrastructure and intelligent orchestration needed to deploy your image-based models with confidence. We provide the tools to ensure your AI is not only accurate but also fast, stable, and cost-effective.

Ready to power your visual AI applications and build a more intelligent business? Explore how WhaleFlux can optimize your image inference pipeline and help you see the world more clearly.

FAQs

1. What makes image inference a critical but resource-intensive task for business AI applications?

Image inference, the process of using trained models to analyze and extract information from new images, is fundamental to countless business applications—from automated visual quality inspection in manufacturing to real-time product recognition in retail and medical image analysis. However, these tasks are computationally demanding. Models must process high-dimensional pixel data in real-time or near real-time to deliver business value, placing significant strain on infrastructure. Choosing the right optimization strategy and hardware is therefore not just a technical concern, but a direct driver of operational efficiency, scalability, and cost-effectiveness . The goal for Business AI is to transform raw pixels into reliable predictions as fast and as affordably as possible.

2. What are some key pre-processing and architectural techniques to optimize image inference performance before scaling?

Performance optimization begins long before deployment. Key techniques include:

- Intelligent Pre-processing: Instead of feeding entire images into a model, a common and effective strategy is to first use an object detection network to crop the image around the region of interest . This focuses the model’s computational power on relevant pixels, reduces input size (e.g., cropping can remove over 50% of irrelevant pixels in some medical images), and can significantly improve accuracy by normalizing the scale of the subject .

- Task-Specific Architecture Design: For tasks combining images with other data (like text or sensor readings), feeding raw pixels directly into a standard neural network is inefficient. A better approach is to design specialized model architectures that process image features and other high-level data through separate pathways before combining them, leading to more meaningful learning and better inference .

- Method Selection (Prompt vs. Fine-Tuning): For many business use cases, you may not need to train a model from scratch. For simpler, well-defined tasks, prompt engineering on a powerful pre-trained vision model can be a low-cost, rapid solution. For complex, high-precision, or long-term deployment needs, Supervised Fine-Tuning (SFT) on domain-specific data is necessary to achieve the required accuracy and output stability .

3. What are the main GPU resource management challenges when deploying image inference at scale?

Scaling image inference efficiently is hindered by several key challenges in GPU cluster management:

- Resource Fragmentation and Low Utilization: Static allocation of GPUs often leads to severe inefficiency. While some GPUs are overloaded with inference requests (creating “hot spots”), others sit idle (“cold spots”), dragging down the cluster’s average utilization—a common problem where rates can fall below 50% .

- Diverse and Dynamic Workloads: An inference cluster must handle a mix of tasks with different requirements: high-throughput batch processing, low-latency real-time requests, and varying model sizes. Managing these priorities without intelligent scheduling leads to long job queues, missed latency targets, and wasted resources .

- Complex Orchestration Overhead: Manually managing the lifecycle of hundreds of inference jobs across a multi-GPU cluster, ensuring health checks, load balancing, and recovery from failures, becomes a major operational burden that distracts teams from core AI development .

4. How do advanced scheduling and load balancing strategies address these GPU challenges?

Modern resource management systems employ sophisticated strategies to “tame” the cluster:

- Dynamic, Graph-Based Scheduling: Advanced schedulers treat the cluster’s resources (CPUs, GPUs, memory, network) as a interconnected graph. This allows for fine-grained, topology-aware scheduling, placing tasks on GPUs that are physically or network-optimally close to their data, minimizing communication delays and maximizing throughput .

- Priority and Preemptive Scheduling: Systems can implement weighted fair queueing or priority-based scheduling to ensure critical business inference jobs (e.g., real-time customer-facing apps) are served before less urgent batch jobs . In some cases, low-priority tasks can be preempted to free up resources for high-priority ones, with checkpoints enabling seamless resumption later .

- Intelligent Load Balancing: Beyond simple round-robin, schedulers perform least-load-first allocation, directing new inference requests to the GPU with the most available memory and lowest compute utilization. For distributed inference jobs, they also handle model or data parallelism to split work efficiently across multiple GPUs .

5. How does a specialized platform like WhaleFlux provide an integrated solution for efficient, large-scale image inference?

WhaleFlux is an intelligent GPU resource management tool designed specifically to solve the end-to-end challenges of deploying AI models like image inference systems. It moves beyond mere hardware provision to deliver optimized efficiency.

- Unified Optimization Layer: WhaleFlux integrates the advanced scheduling and load balancing strategies mentioned above into a seamless platform. It actively monitors the cluster, packing inference jobs intelligently to eliminate idle time and resource fragmentation, thereby dramatically increasing the utilization of valuable NVIDIA GPUs (such as the H100, A100, or RTX 4090) .

- Stability and Cost Efficiency for Business AI: By ensuring stable, high-throughput execution of inference workloads, WhaleFlux allows businesses to serve more predictions with fewer hardware resources. This directly translates to lower cloud computing costs and a more predictable total cost of ownership. Companies can access these optimized NVIDIA GPUresources through flexible purchase or rental plans tailored to their sustained workload needs.

- Focus on Core Innovation: By abstracting away the immense complexity of cluster orchestration, WhaleFlux allows AI and data science teams to focus entirely on developing and refining their image models and business logic, accelerating the path from prototype to production-scale deployment.