Introduction: The AI Hardware Evolution

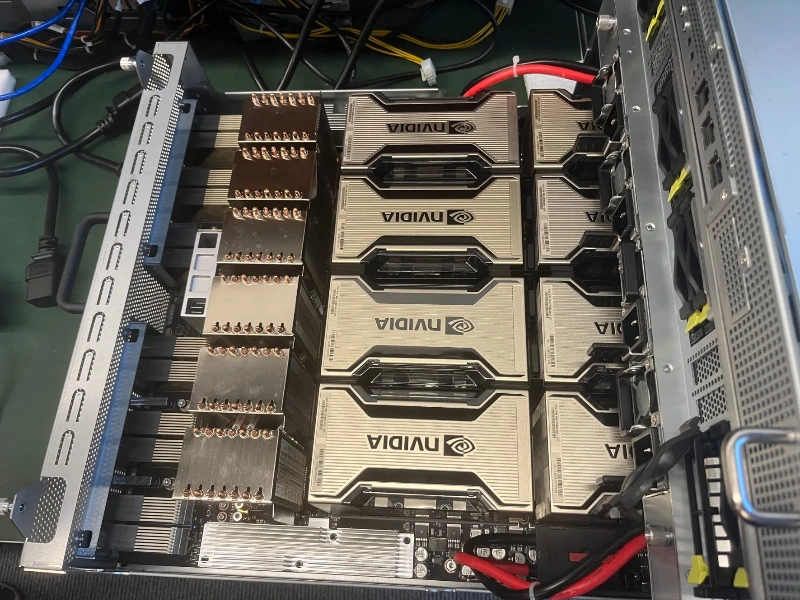

The race for AI supremacy is fueled by ever-more-powerful hardware. NVIDIA’s H800 GPU emerged as a critical workhorse, delivering the immense computational power needed to train complex large language models (LLMs) and handle demanding AI workloads. As models grow larger and datasets more complex, the demand for these powerful accelerators skyrockets. However, simply acquiring more H800 GPUs isn’t a sustainable or efficient strategy. Scaling GPU clusters introduces daunting challenges: skyrocketing cloud costs, intricate management overhead, and the constant pressure to maximize the return on massive hardware investments. How can enterprises leverage existing H800 investments while seamlessly preparing for the next generation of AI? The answer lies not just in hardware, but in intelligent orchestration.

The H800 GPU: Strengths and Limitations

There’s no denying the H800’s significant role in advancing AI capabilities. Its high-bandwidth memory and computational throughput made it a cornerstone for many demanding training tasks. Yet, as deployments scale, inherent limitations become apparent:

- Suboptimal Utilization in Multi-GPU Setups: H800 clusters often suffer from poor load balancing. Jobs might saturate some GPUs while others sit idle, or communication bottlenecks slow down distributed training. This inefficiency directly wastes expensive compute resources.

- Hidden Costs of Underused Resources: Paying for H800 instances that aren’t running at peak efficiency is a massive drain. Idle cycles or partially utilized GPUs represent pure financial loss, significantly inflating the total cost of ownership (TCO).

- Scalability Bottlenecks for Growing Models: As model sizes explode (think multi-trillion parameter LLMs), even large H800 clusters can hit performance ceilings. Scaling further often means complex, error-prone manual cluster expansion and management headaches.

While powerful, H800 clusters desperately need intelligent management to overcome these inefficiencies and unlock their true potential. Raw power alone isn’t enough in the modern AI landscape.

WhaleFlux: Your AI Infrastructure Amplifier

This is where WhaleFlux transforms the game. WhaleFlux isn’t just another cloud portal; it’s an intelligent GPU resource management platform built specifically for AI enterprises. Think of it as the essential optimization layer that sits on top of your existing GPU fleet, including your valuable H800 investments. Its core mission is simple: maximize the return on investment (ROI) for your current H800 GPUs while seamlessly future-proofing your infrastructure for what comes next.

How does WhaleFlux achieve this?

Intelligent Orchestration:

WhaleFlux’s brain dynamically analyzes workload demands (compute, memory, bandwidth) and intelligently assigns tasks across your entire mixed GPU cluster. Whether you have H800s, newer H100s, or a combination, WhaleFlux finds the optimal placement. It prevents H800 overload (which can cause throttling or instability) and eliminates idle time, ensuring every GPU cycle is productive. This dynamic scheduling drastically improves cluster-wide efficiency.

Unified Management:

Ditch the complexity of managing different GPU types through separate tools or scripts. WhaleFlux provides a single, intuitive control plane for your entire heterogeneous fleet. Monitor H800s alongside H100s, H200s, A100s, or RTX 4090s. Deploy jobs, track resource usage, and manage configurations seamlessly across all your accelerators from one dashboard. This drastically reduces operational overhead and eliminates compatibility hassles.

Cost Control:

WhaleFlux directly attacks the hidden costs of underutilization. By packing workloads efficiently, eliminating idle cycles, and preventing resource contention, it ensures your expensive H800s (and all other GPUs) are working hard when needed. This converts previously wasted capacity into valuable computation, directly lowering your cloud bill. You pay for power, not waste.

Beyond H800: WhaleFlux’s Performance Ecosystem

WhaleFlux’s power isn’t limited to optimizing your existing H800s. It also provides a strategic gateway to the latest NVIDIA GPU technologies, allowing you to augment or gradually transition your infrastructure without disruption.

H100/H200:

For enterprises pushing the boundaries, WhaleFlux provides access to NVIDIA’s current flagship GPUs. The H100 and newer H200 offer revolutionary performance for the largest training jobs, featuring dedicated Transformer Engine acceleration and significantly faster memory (HBM3/HBM3e). WhaleFlux intelligently integrates these into your cluster, allowing you to run your most demanding workloads on the best hardware, while potentially offloading less intensive tasks to your H800s or A100s for optimal cost/performance.

A100:

The NVIDIA A100 remains a versatile and powerful workhorse, excellent for a wide range of training and inference tasks. WhaleFlux makes it easy to incorporate A100s into your cluster, offering a balanced performance point, often at a compelling price/performance ratio compared to the bleeding edge, especially when optimized by WhaleFlux.

RTX 4090:

Need powerful, cost-effective GPUs for scaling inference, model fine-tuning, or smaller-scale training? WhaleFlux includes the NVIDIA RTX 4090 in its ecosystem. While a consumer card, its raw compute power makes it highly effective for specific AI tasks when managed correctly within an enterprise environment by WhaleFlux.

Crucially, WhaleFlux offers flexible procurement: Acquire these GPUs via outright purchase for long-term projects or leverage WhaleFlux’s rental options starting at a minimum one-month commitment. This provides significant budgeting flexibility compared to traditional cloud hourly models, especially for sustained workloads, while WhaleFlux ensures they are utilized optimally. No hourly rentals are available.

Strategic Advantage: Future-Proof AI Operations

Adopting WhaleFlux delivers a powerful dual strategic advantage:

- Extract Maximum Value from Current H800 Investments: Immediately boost the efficiency and ROI of your existing H800 clusters. Reduce waste, accelerate training times, and lower operational costs today.

- Seamlessly Integrate Next-Gen GPUs as Needs Evolve: When the time comes to adopt H100s, H200s, or other architectures, WhaleFlux makes the transition smooth. Integrate new GPUs incrementally into your existing managed cluster. WhaleFlux handles the orchestration and workload distribution across mixed generations, maximizing the value of both old and new hardware without complex re-engineering.

The tangible outcomes are compelling:

- 30-50% Lower Cloud Costs: Through aggressive optimization of utilization and elimination of idle waste across H800s and other GPUs.

- 2x Faster Model Deployment: Automated resource tuning, optimal scheduling, and reduced management friction get models from development to production faster.

- Zero Compatibility Headaches: WhaleFlux’s unified platform and standardized environment management remove the pain of integrating and managing diverse hardware (H800, H100, A100, etc.) and software stacks.

Conclusion: Optimize Today, Scale Tomorrow

The NVIDIA H800 GPU has been instrumental in powering the current wave of AI innovation. However, its raw potential is often hamstrung by management complexity, underutilization, and the relentless pace of hardware advancement. Simply stacking more H800s is not an efficient or future-proof strategy.

WhaleFlux is the essential optimization layer modern AI infrastructure requires. It unlocks the full value trapped within your existing H800 investments by dramatically improving utilization, slashing costs, and simplifying management. Simultaneously, it provides a seamless, low-friction path to integrate next-generation NVIDIA GPUs like the H100, H200, A100, and RTX 4090, ensuring your infrastructure evolves as fast as your AI ambitions.

Don’t let your powerful H800 GPUs operate below their potential or become stranded assets. Maximize your H800 ROI while unlocking effortless access to next-gen GPU power – Explore WhaleFlux Solutions today and transform your AI infrastructure efficiency.

FAQs

1. What is WhaleFlux and how does it relate to optimizing AI infrastructure beyond H800 GPUs?

WhaleFlux is an intelligent GPU resource management tool designed specifically for AI enterprises. It optimizes the utilization efficiency of multi-GPU clusters, helping enterprises reduce cloud computing costs while improving the deployment speed and stability of large language models. The “beyond H800 GPUs” in the blog title refers to its support for the full range of NVIDIA GPU models (not limited to H800), enabling more comprehensive AI infrastructure optimization across diverse hardware configurations.

2. Which NVIDIA GPU models are available through WhaleFlux for AI infrastructure deployment?

WhaleFlux provides the full range of NVIDIA GPU models, including but not limited to NVIDIA H100, NVIDIA H200, NVIDIA A100, and NVIDIA RTX 4090. Enterprises can select appropriate GPU models based on their specific AI task requirements, such as large language model training, inference, or other high-performance computing needs.

3. Can enterprises buy or rent NVIDIA GPUs via WhaleFlux, and is hourly rental supported?

Yes, customers can choose to buy or rent NVIDIA GPUs through WhaleFlux according to their own needs. However, it should be noted that hourly rental is not supported by WhaleFlux. This model is more suitable for enterprises with long-term or stable GPU resource demands, helping them better control cost budgets.

4. How does WhaleFlux help AI enterprises reduce cloud computing costs while enhancing LLM deployment performance?

WhaleFlux achieves cost reduction by optimizing the utilization efficiency of multi-GPU clusters, minimizing resource waste caused by underutilization. Meanwhile, its intelligent management capabilities accelerate the deployment speed of large language models and improve operational stability, ensuring that AI tasks can run smoothly without frequent performance bottlenecks or downtime issues.

5. For enterprises currently using NVIDIA H800 GPUs, what benefits can WhaleFlux bring to their AI infrastructure optimization?

For enterprises using NVIDIA H800 GPUs, WhaleFlux can further improve the utilization efficiency of H800-based multi-GPU clusters. Additionally, it allows seamless expansion to other NVIDIA GPU models (such as H100, H200, A100, etc.) based on business development needs, providing more flexible and scalable infrastructure support to adapt to evolving AI task requirements.