1. Introduction: The GPU Power Craze – From Gamers to AI Giants

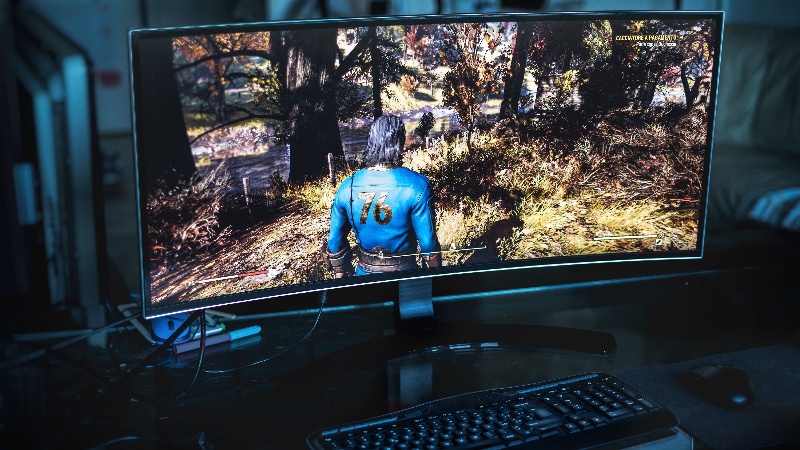

Searching for the “best GPU for 2K gaming” is a rite of passage for PC enthusiasts. Gamers chase high frame rates (144Hz+), buttery-smooth visuals at 1440p resolution, and immersive details in titles like Cyberpunk 2077 or Elden Ring. But while gamers push pixels, a far more demanding revolution is underway: industrial artificial intelligence. Training massive large language models (LLMs) like ChatGPT or deploying complex deep learning systems requires computational muscle that dwarfs even the most hardcore gaming setup. For AI enterprises, the quest isn’t about frames per second—it’s about efficiently harnessing industrial-scale GPU resources. This is where specialized solutions like WhaleFlux become mission-critical, transforming raw hardware into cost-effective, reliable AI productivity.

2. The Gaming Benchmark: What Makes a “Best” GPU for 2K?

For gamers, the “best” GPU balances four key pillars:

- High Frame Rates: Smooth gameplay demands 100+ FPS, especially in competitive shooters or fast-paced RPGs.

- Resolution & Detail: 2K (1440p) with ultra settings is the sweet spot, offering clarity without the extreme cost of 4K.

- VRAM Capacity: 8GB+ is essential for modern textures; 12GB-16GB (e.g., RTX 4070 Ti) future-proofs your rig.

- Price-to-Performance: Value matters. Cards like the RTX 4070 Super deliver excellent 1440p performance without breaking the bank.

These GPUs excel at rendering gorgeous virtual worlds. But shift the workload from displayingcomplex scenes to creating intelligence, and their limitations become starkly apparent.

3. When Gaming GPUs Aren’t Enough: The AI/ML Reality Check

Imagine trying to train ChatGPT on a gaming GPU. You’d hit a wall—fast. AI workloads demand resources that eclipse gaming needs:

- Raw Compute Power (TFLOPS): AI relies on FP16/FP32 precision and specialized cores (like NVIDIA’s Tensor Cores). An RTX 4090 (82 TFLOPS FP32) is powerful, but industrial AI needs thousands of TFLOPS.

- Massive VRAM (48GB+): LLMs like Llama 3 require 80GB+ VRAM just to load. Gaming GPUs max out at 24GB (RTX 4090)—insufficient for serious batches.

- Multi-GPU Scalability: Training happens across clusters. Consumer cards lack high-speed interconnects (like NVLink) for efficient parallel processing.

- Reliability & Stability: Model training runs for weeks. Gaming GPUs aren’t engineered for 24/7 data center endurance.

- Cost Efficiency at Scale: A single cloud H100 instance costs ~$5/hour. Without optimization, cluster costs spiral into millions monthly.

Even the mighty RTX 4090, while useful for prototyping, becomes a bottleneck in production AI pipelines.

4. Enter the Industrial Arena: GPUs Built for AI Workloads

This is where data center-grade GPUs shine:

- NVIDIA H100: The undisputed AI leader. With Transformer Engine, FP8 support, and 80GB VRAM, it accelerates LLM training 30X faster than A100.

- NVIDIA H200: Features 141GB of ultra-fast HBM3e memory—critical for inference on trillion-parameter models.

- NVIDIA A100: The battle-tested workhorse. Its 40GB/80GB variants remain vital for inference and mid-scale training.

- (Context) NVIDIA RTX 4090: Useful for small-scale R&D or fine-tuning, but lacks the memory, scalability, and reliability for enterprise deployment.

Owning or renting these GPUs is just step one. The real challenge? Managing them efficiently across dynamic AI workloads. Idle H100s cost $4,000/month each—wasted potential no business can afford.

5. Optimizing Industrial GPU Power: Introducing WhaleFlux

This is where WhaleFlux transforms the game. Designed specifically for AI enterprises, WhaleFlux is an intelligent GPU resource management platform that turns expensive hardware clusters into streamlined, cost-effective engines. Here’s how:

- Intelligent Orchestration: WhaleFlux dynamically allocates training/inference jobs across mixed clusters (H100, H200, A100, etc.), maximizing utilization. No more idle GPUs while queues back up.

- Cost Reduction: By eliminating wasted cycles and optimizing workload placement, WhaleFlux slashes cloud bills by up to 65%. Rent or purchase H100/H200/A100/RTX 4090 GPUs via WhaleFlux with predictable monthly pricing—no hourly surprises.

- Boosted Deployment Speed: Deploy models 50% faster with automated resource provisioning. WhaleFlux handles the complexity, letting your team focus on innovation.

- Enhanced Stability: Ensure week-long training jobs run uninterrupted. WhaleFlux monitors health, handles failures, and prioritizes critical workloads.

- Scale Without Pain: Manage 10 or 10,000 GPUs seamlessly. WhaleFlux’s platform abstracts away cluster complexity, supporting hybrid fleets (including your existing on-prem hardware).

For AI teams drowning in cloud costs and resource fragmentation, WhaleFlux isn’t just convenient—it’s a competitive necessity.

6. Beyond the Single Card: Why Management is Key for AI Success

Procuring an H100 is step one. But true AI ROI comes from orchestrating fleets of them. Think of it like this:

- A lone H100 is a sports car.

- A WhaleFlux-optimized cluster is a bullet train network.

The “best GPU” for AI isn’t any single chip—it’s the system that maximizes their collective power. WhaleFlux provides the management layer that turns capital expenditure into scalable, reliable intelligence.

7. Conclusion: Powering the Future, Efficiently

The search for the “best GPU” reveals a stark divide: gamers optimize for pixels and frames; AI enterprises optimize for petaflops and efficiency. Success in industrial AI hinges not just on buying H100s or A100s, but on intelligently harnessing their potential. As models grow larger and costs soar, WhaleFlux emerges as the critical enabler—transforming raw GPU power into streamlined, cost-effective productivity.

Ready to optimize your AI infrastructure?

Stop overpaying for underutilized GPUs. Discover how WhaleFlux can slash your cloud costs and accelerate deployment:

Explore WhaleFlux GPU Solutions Today

FAQs

1. Can I use the same GPU for both 2K gaming and industrial AI development?

Yes, high-end NVIDIA GeForce GPUs like the RTX 4090 are excellent dual-purpose solutions. They deliver exceptional 2K gaming performance with maxed-out settings while providing substantial computational power for AI development tasks like model fine-tuning and inference. For larger-scale AI training, you would typically leverage dedicated data center GPUs.

2. What’s the main difference between a gaming GPU and an industrial AI GPU?

Gaming GPUs like the RTX 4090 are optimized for real-time graphics rendering and consumer availability. Industrial AI GPUs like NVIDIA’s A100 or H100 are designed for data centers, featuring technologies like error-correcting code (ECC) memory and optimized for sustained, parallel computational throughput in multi-GPU server environments, which is where a platform like WhaleFlux provides crucial management.

3. Which offers better value for AI prototyping: multiple gaming GPUs or one data center GPU?

For initial prototyping, a powerful gaming GPU like the RTX 4090 often provides great value and flexibility. However, for consistent industrial AI work, the stability and software stack of a dedicated data center GPU like the A100 can accelerate development. WhaleFlux solves this dilemma by offering flexible access to both classes of NVIDIA GPUs, allowing teams to rent the right hardware for each project phase without large upfront investments.

4. How can a small AI team access the same powerful GPUs used by large tech companies?

Through specialized GPU infrastructure providers like WhaleFlux. WhaleFlux offers access to the full range of professional NVIDIA GPUs, including the H100, H200, and A100, via monthly rental or purchase plans. This eliminates the high capital expenditure of building a private data center, allowing smaller teams to compete in the industrial AI space by leveraging optimized, managed GPU clusters.

5. Why can’t I just build a server with multiple RTX 4090s for industrial AI?

While technically possible, managing a multi-GPU server for industrial AI requires significant expertise in workload orchestration, cooling, and power delivery to achieve stable performance. WhaleFlux specializes in this exact challenge, providing optimized, pre-configured clusters of NVIDIA GPUs (from GeForce to Hopper architectures) with the intelligent management software needed to maximize utilization and stability for enterprise AI workloads, ultimately providing a more reliable and cost-efficient solution.