1. Introduction

*”Choosing between 8-core and 10-core GPUs isn’t just about specs—it’s about aligning with your AI goals.”*

For AI teams, every infrastructure decision impacts speed, cost, and scalability. The choice between an 8-core and 10-core GPU often feels like a high-stakes puzzle: Do more cores always mean better performance? Is the extra cost justified? The truth is, core count alone won’t guarantee efficiency. What matters is how well your GPUs match your workloads—and how intelligently you manage them. This is where tools like WhaleFlux transform raw hardware into strategic advantage. By optimizing clusters of any core count, WhaleFlux helps enterprises extract maximum value from every GPU cycle. Let’s demystify the core count debate.

2. Demystifying Core Counts: 8-Core vs. 10-Core GPUs

A. What Core Count Means

GPU “cores” (or CUDA cores in NVIDIA GPUs) are tiny processors working in parallel. Think of them as workers on an assembly line:

- More cores = Higher throughput potential for parallel tasks (e.g., training AI models).

- But: Performance depends on other factors like memory bandwidth, power limits, and software optimization.

B. 8-Core GPUs: Strengths & Use Cases

*Example: NVIDIA RTX 4090 (with 8-core variants), A100 40GB configurations.*

Ideal for:

- Mid-scale inference: Deploying chatbots or recommendation engines.

- Budget-sensitive projects: Startups or teams testing new models.

- Smaller LLMs: Fine-tuning models under 7B parameters.

Limits:

- Struggles with massive training jobs (e.g., 100B+ parameter models).

- Lower parallelism for large batch sizes.

C. 10-Core GPUs: Strengths & Use Cases

*Example: NVIDIA H100, H200, high-end A100s.*

Ideal for:

- Heavy training: Training foundation models or complex vision transformers.

- HPC simulations: Climate modeling or genomic analysis.

- Large-batch inference: Real-time processing for millions of users.

Tradeoffs:

- 30–50% higher cost vs. 8-core equivalents.

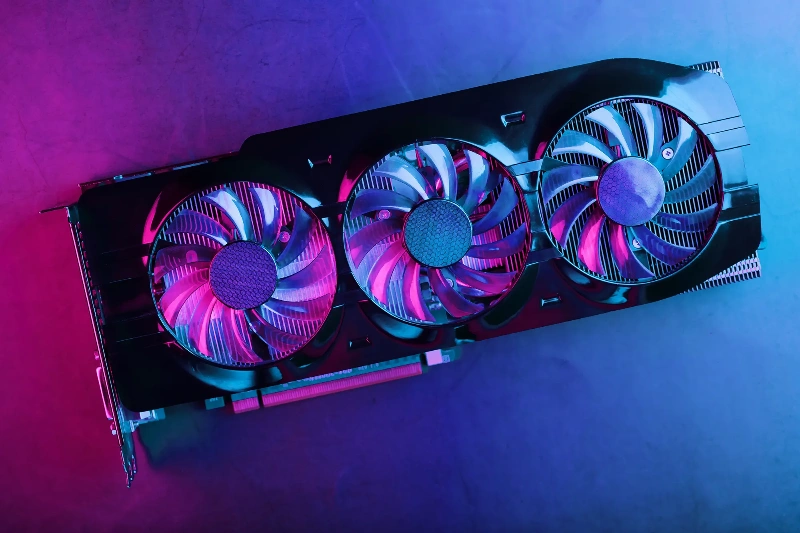

- Power/heat demands: Requires advanced cooling.

- Risk: Idle cores waste money if workloads don’t saturate them.

💡 Key Insight: A 10-core GPU isn’t “better”—it’s different. Mismatching cores to tasks burns budget.

3. Key Factors for AI Teams

A. Performance per Dollar

- The math: A 10-core GPU may offer 25% more cores but cost 40% more than an 8-core.

- Ask: Does your workload need that extra parallelism? For inference or smaller models, 8-core GPUs often deliver better ROI.

- WhaleFlux Impact: By preventing idle cores, WhaleFlux ensures every GPU—8-core or 10-core—runs at peak efficiency, making even “smaller” hardware cost-effective.

B. Workload Alignment

Training vs. Inference:

- Training: Benefits from 10-core brute force (if data/model size justifies it).

- Inference: 8-core GPUs frequently suffice, especially with optimization.

Test before scaling:

Run benchmarks! A 10-core GPU sitting 60% idle is a money pit.

C. Cluster Scalability

Myth:

“Adding more cores = linear performance gains.”

Reality:

Without smart orchestration, adding GPUs leads to:

- Resource fragmentation: Cores stranded across servers.

- Imbalanced loads: One GPU overwhelmed while others nap.

WhaleFlux Fix:

Intelligently pools all cores (8 or 10) into a unified resource, turning scattered hardware into a supercharged cluster.

4. Beyond Cores: Optimizing Any GPU with WhaleFlux

A. Intelligent Resource Allocation

WhaleFlux dynamically assigns tasks across mixed GPU clusters (H100, H200, A100, RTX 4090), treating 8-core and 10-core units as part of a unified compute pool.

- Example: A training job might split across three 8-core GPUs and one 10-core GPU based on real-time availability—no manual tuning.

- Result: 95%+ core utilization, even in hybrid environments.

B. Cost Efficiency

- Problem: Idle cores drain budgets (up to 40% waste in unoptimized clusters).

- WhaleFlux Solution: Analytics identify underused resources → auto-reassign tasks → cut cloud spend by 30%+.

- Real impact: For a team using 10-core GPUs for inference, WhaleFlux might reveal 8-core GPUs are cheaper and faster per dollar.

C. Simplified Deployment

- Flexibility: Purchase or lease WhaleFlux-managed GPUs (H100/H200/A100/RTX 4090) based on needs.

- Sustained workloads only: No hourly billing—leases start at 1 month minimum (ideal for training jobs or production inference).

- Zero lock-in: Scale up/down monthly without rearchitecting.

D. Stability for Scaling

- Eliminate bottlenecks: WhaleFlux’s load balancing ensures consistent LLM training/inference speeds—whether using 8-core or 10-core GPUs.

- Zero downtime: Failover protection reroutes jobs if a GPU falters.

- Proven results: Customers deploy models 50% faster with 99.9% cluster uptime.

5. Verdict: 8-Core or 10-Core?

| Scenario | Choose 8-Core GPU | Choose 10-Core GPU |

| Budget | Tight CapEx/OpEx | Ample funding |

| Workload Type | Inference, fine-tuning | Large-model training |

| Batch Size | Small/medium | Massive (e.g., enterprise LLMs) |

| Scalability Needs | Moderate growth | Hyper-scale AI research |

Universal Solution:

With WhaleFlux, you’re not locked into one choice. Mix 8-core and 10-core GPUs in the same cluster. The platform maximizes ROI by:

- Allocating lightweight tasks to 8-core units.

- Reserving 10-core beasts for heavy lifting.

- Ensuring no core goes underutilized.

6. Conclusion

Core count matters—but cluster intelligence matters more. Whether you deploy 8-core or 10-core GPUs, the real competitive edge lies in optimizing every cycle of your investment. WhaleFlux turns this philosophy into reality: slashing costs by 30%+, accelerating deployments, and bringing enterprise-grade stability to AI teams at any scale. Stop agonizing over core counts. Start optimizing with purpose.

Optimize your 8-core/10-core GPU cluster today. Explore WhaleFlux’s H100, H200 & A100 solutions.

FAQs

1. Is a 10-core GPU always better than an 8-core GPU for AI workloads?

Not necessarily. For NVIDIA GPUs, the number of streaming multiprocessors (SMs), tensor cores, and memory bandwidth are more important indicators for AI performance than simple core counts. An RTX 4090 with fewer but more powerful SMs can significantly outperform other GPUs with higher core counts.

2. What matters more for AI performance: core count or memory bandwidth?

Memory bandwidth is often more critical, especially for large language models. NVIDIA’s data center GPUs like the H100 and H200 prioritize massive memory bandwidth (over 2TB/s on H200) alongside specialized tensor cores, making them far more effective for AI than consumer GPUs with higher core counts but limited bandwidth.

3. Can I combine multiple 8-core and 10-core GPUs for larger AI models?

Yes, but managing heterogeneous GPU clusters requires sophisticated orchestration. WhaleFlux solves this by intelligently distributing AI workloads across mixed NVIDIA GPU setups, automatically optimizing for each GPU’s capabilities whether you’re using RTX 4090s, A100s, or H100s in the same cluster.

4. When should we consider upgrading from consumer to data center GPUs?

When your AI models exceed available VRAM or when you need features like ECC memory for production reliability. WhaleFlux provides seamless access to NVIDIA data center GPUs through monthly rental plans, allowing you to scale from RTX 4090s to H100 systems without infrastructure overhead.

5. How can we maximize AI performance without over-investing in hardware?

WhaleFlux enables optimal resource utilization through intelligent GPU management. Our platform automatically routes workloads to the most suitable NVIDIA GPUs in your cluster – whether 8-core or 10-core architectures – ensuring maximum throughput while providing flexible access to the latest H100 and H200 systems via monthly commitments.